GLM-4.5 is the latest series of large language models developed by Z.ai, designed specifically for agentic workflows, advanced reasoning tasks, and software development. GLM-4.5 represents a significant step forward for open-source AI, offering enterprise-level capabilities that were previously only available through proprietary models.

In this blog, we will explore five easy ways to access the GLM-4.5 models (and a couple of harder ones). You will learn how to use them through online chat applications, via the official Z.ai API, through LLM marketplaces, and finally, how to run it locally yourself.

Build with GLM-4.5: Use GLM-4.5 with agent frameworks for building autonomous AI applications or enhance it with RAG frameworks for knowledge-augmented responses.

What is GLM-4.5?

GLM-4.5 is an open-source large language model that rivals GPT-4 and Claude, offering advanced reasoning, code generation, and tool integration capabilities for free.

The series includes two versions: GLM-4.5 (355B) and GLM-4.5-Air (106B).

Both models employ a hybrid Mixture-of-Experts (MoE) architecture, enabling two distinct operational modes: a “thinking mode” for complex reasoning and tool use, and a “non-thinking mode” for quick, immediate responses.

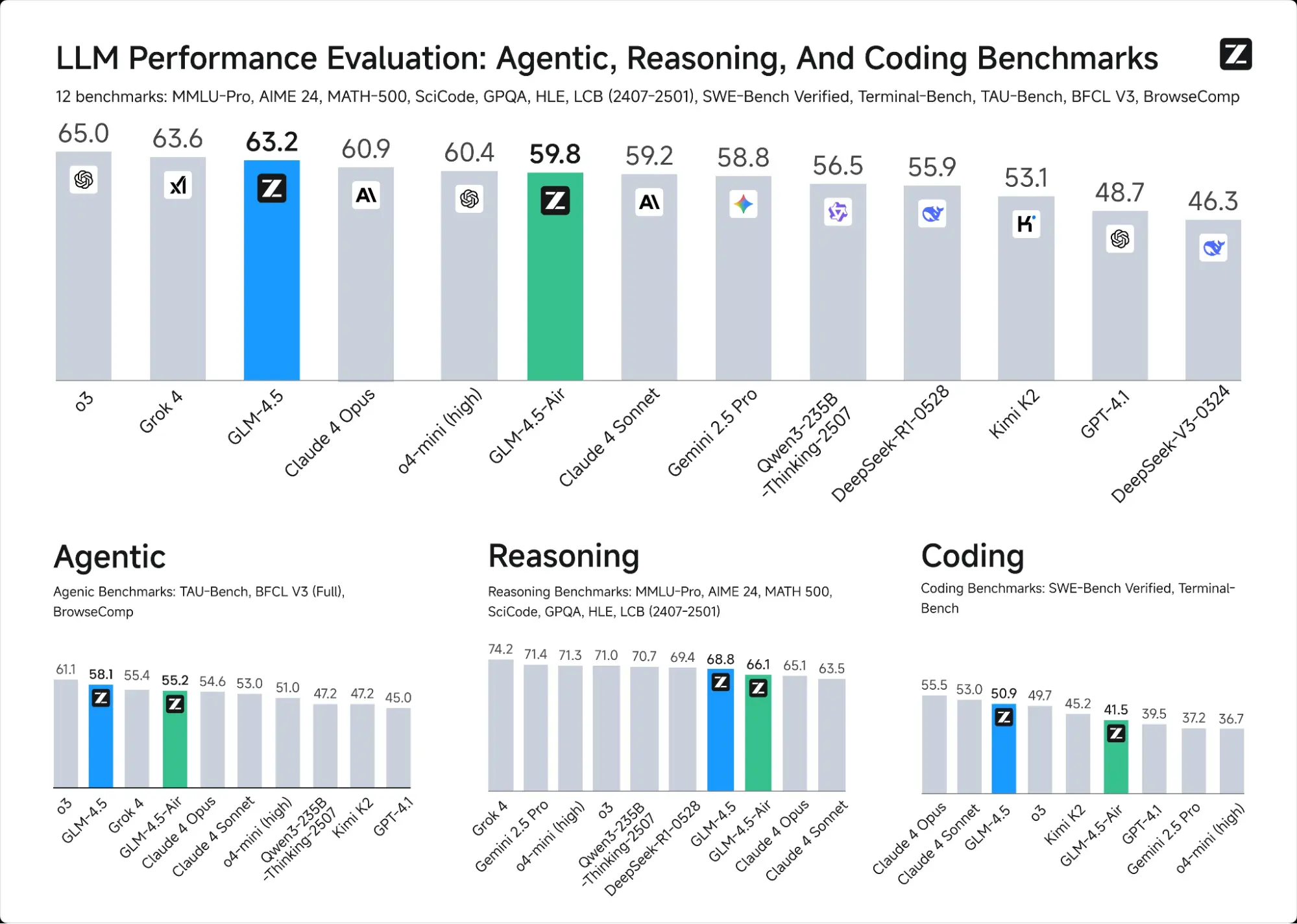

On 12 industry-standard benchmarks, GLM-4.5 achieved an impressive score of 63.2, ranking third among all proprietary and open-source models. Meanwhile, the GLM-4.5-Air model delivered a competitive score of 59.8, securing sixth place while maintaining exceptional speed and efficiency.

Free Chat Applications

Many popular AI chat applications that previously offered a mix of open-source and proprietary models are now including GLM-4.5 in their lineup. However, access to GLM-4.5 through these platforms typically requires a paid subscription.

If you want to test and experience GLM-4.5 for free, you have two great options:

- Z.ai’s Official Chatbot: It lets you interact with the model directly with code generation, testing, and deployment options.

- Hugging Face Demo: It lets you try out GLM-4.5 without any setup or cost.

1. Z.ai Chatbot

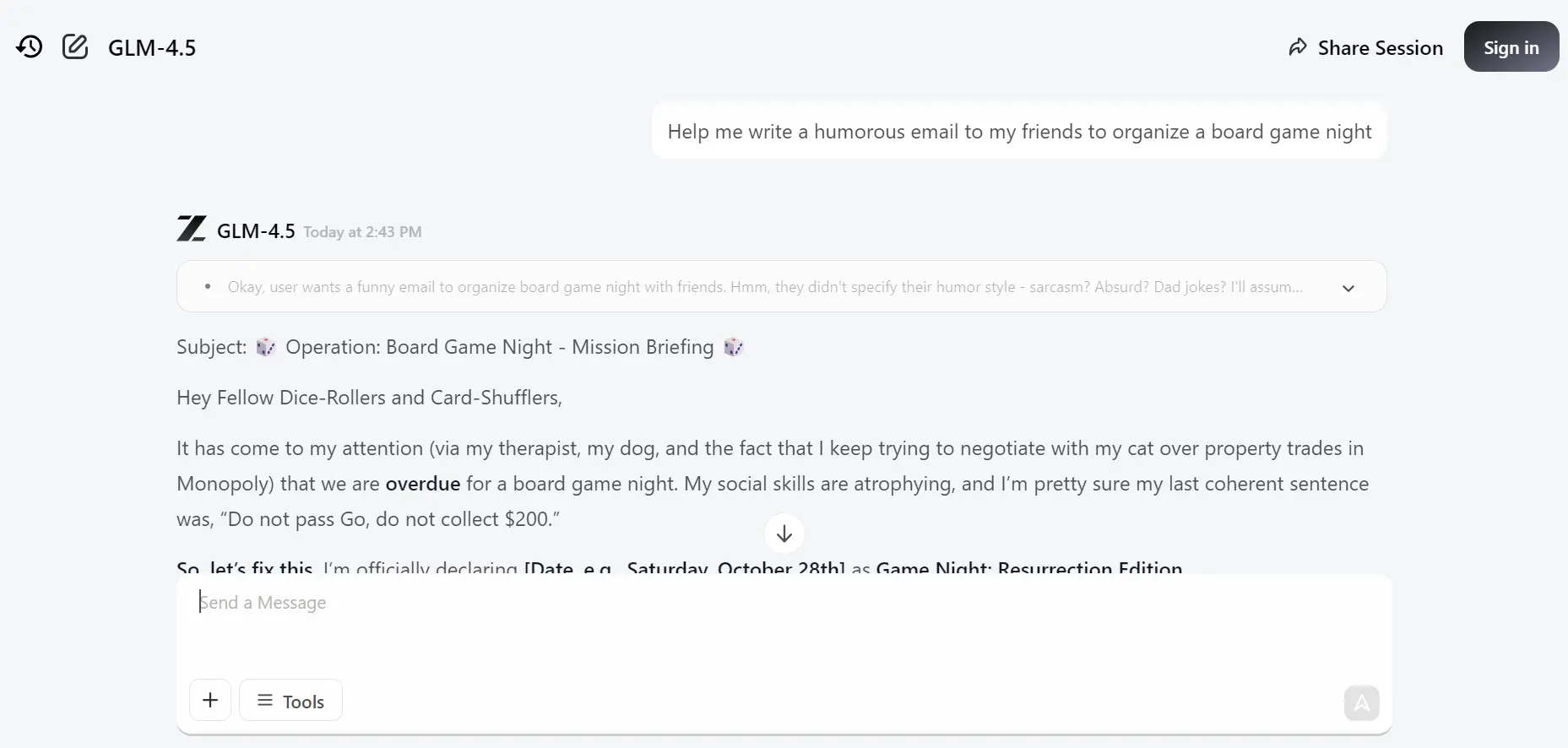

Z.ai Chatbot is a free AI assistant based on GLM-4.5, designed to help users instantly generate code, presentations, and professional writing through an easy-to-use chat interface. It has many features similar to ChatGPT, allowing you to generate code, preview applications, and even deploy them. This is the best way to test the full capabilities of the GLM-4.5 model.

To use it, go to https://chat.z.ai/. You'll likely need to create an account. Enter a prompt and you will see the model display its thinking process. After a few seconds, it will generate the actual response.

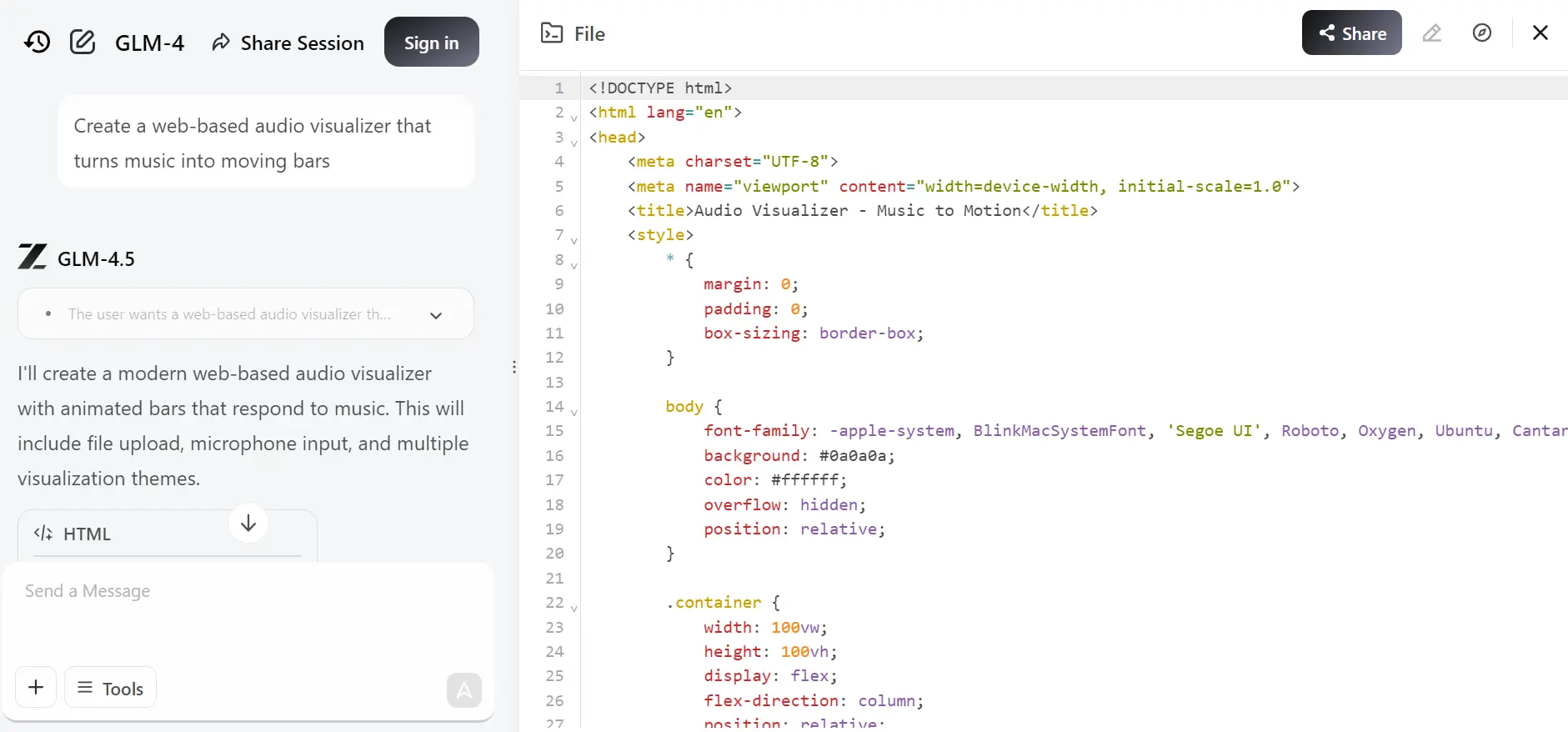

You can even start building your own web application in seconds. For example, you can send a prompt like this:

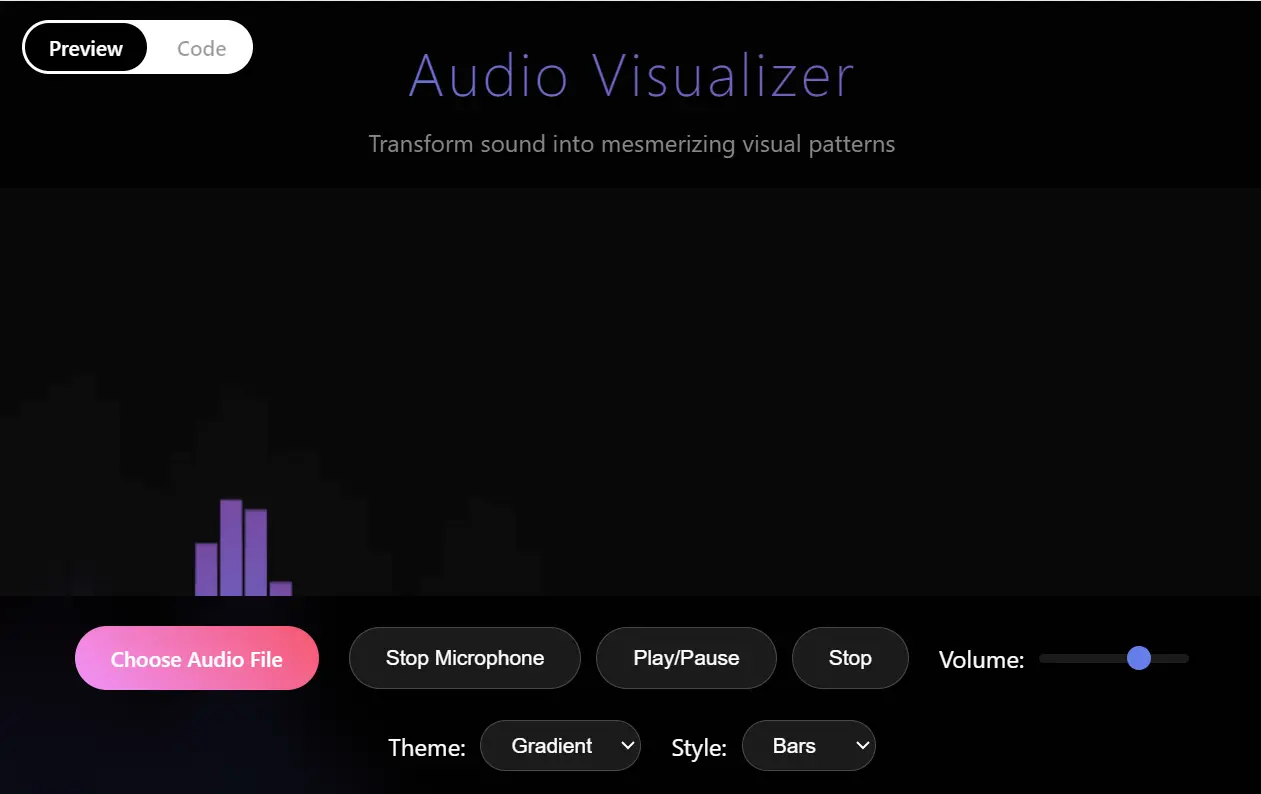

Create a web-based audio visualizer that turns music into moving bars

It will generate the HTML code for you and allow you to preview it.

Here is a preview of the application, which works right out of the box. With just one prompt, we created a working web application.

By clicking the “Open Website” button on the top right, you can also deploy this application and share it with anyone.

2. Hugging Face Demo

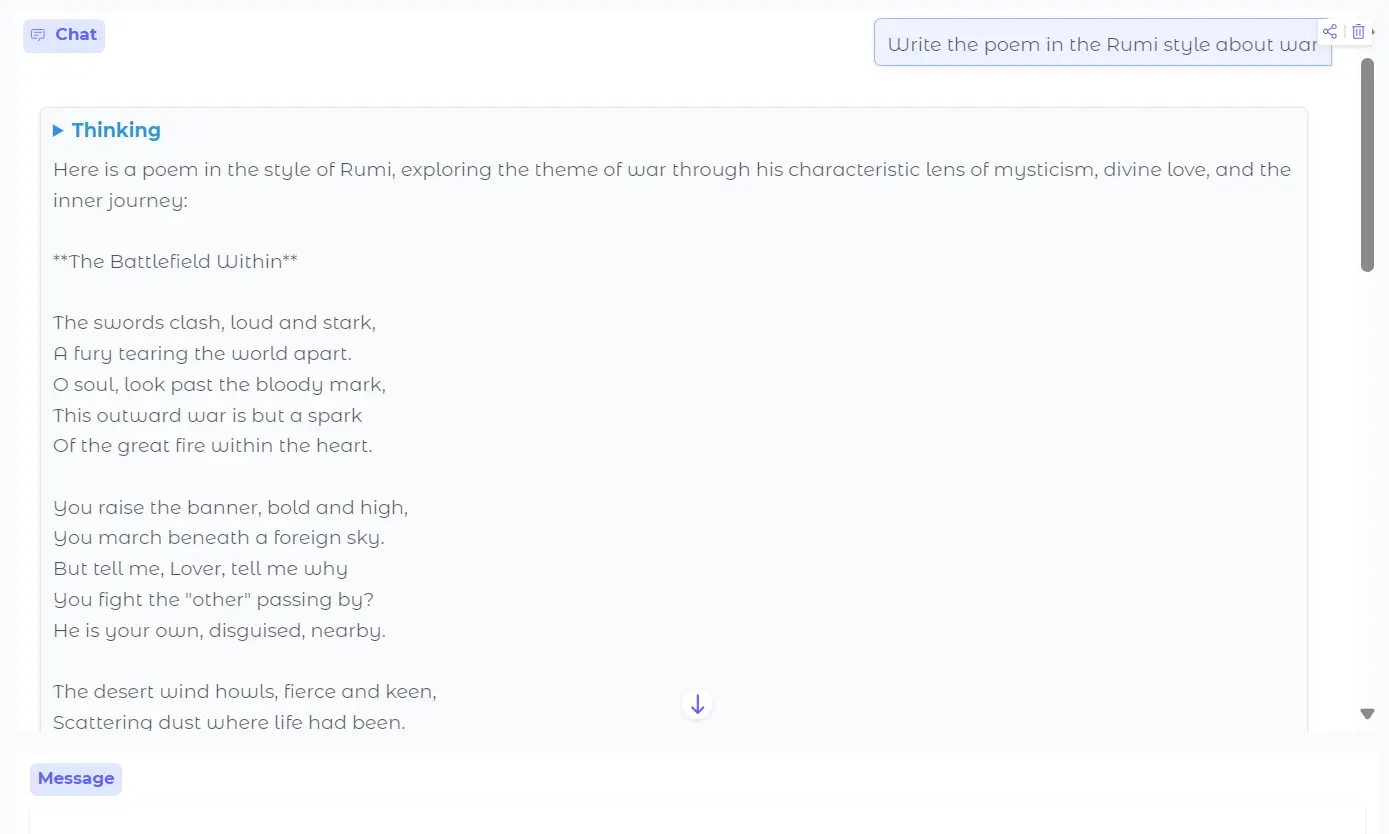

The Hugging Face Demo for GLM-4.5 allows you to interact with the model directly in your browser, providing detailed answers and step-by-step reasoning for your questions, all without the need for signups or setup.

To try it out, go to the GLM-4.5 Demo (API). There, you'll find a simple chat application where you can enter a prompt and generate responses along with the reasoning behind them. It is a fast and straightforward way to test the GLM-4.5 model. While it doesn't have the full features of the official chatbot and won't help you generate complex web applications, it's still a user-friendly tool that you can test for free as many times as you like, with no restrictions.

Official API

You can access the GLM-4.5 model through a variety of inference providers, many of which offer API endpoints for easy integration. However, if you're looking for a streamlined and official experience, Z.ai provides its own open API platform.

The official API comes with advanced features such as function calling and other core functionalities similar to those found in the OpenAI Python SDK.

3. Z.AI Open Platform

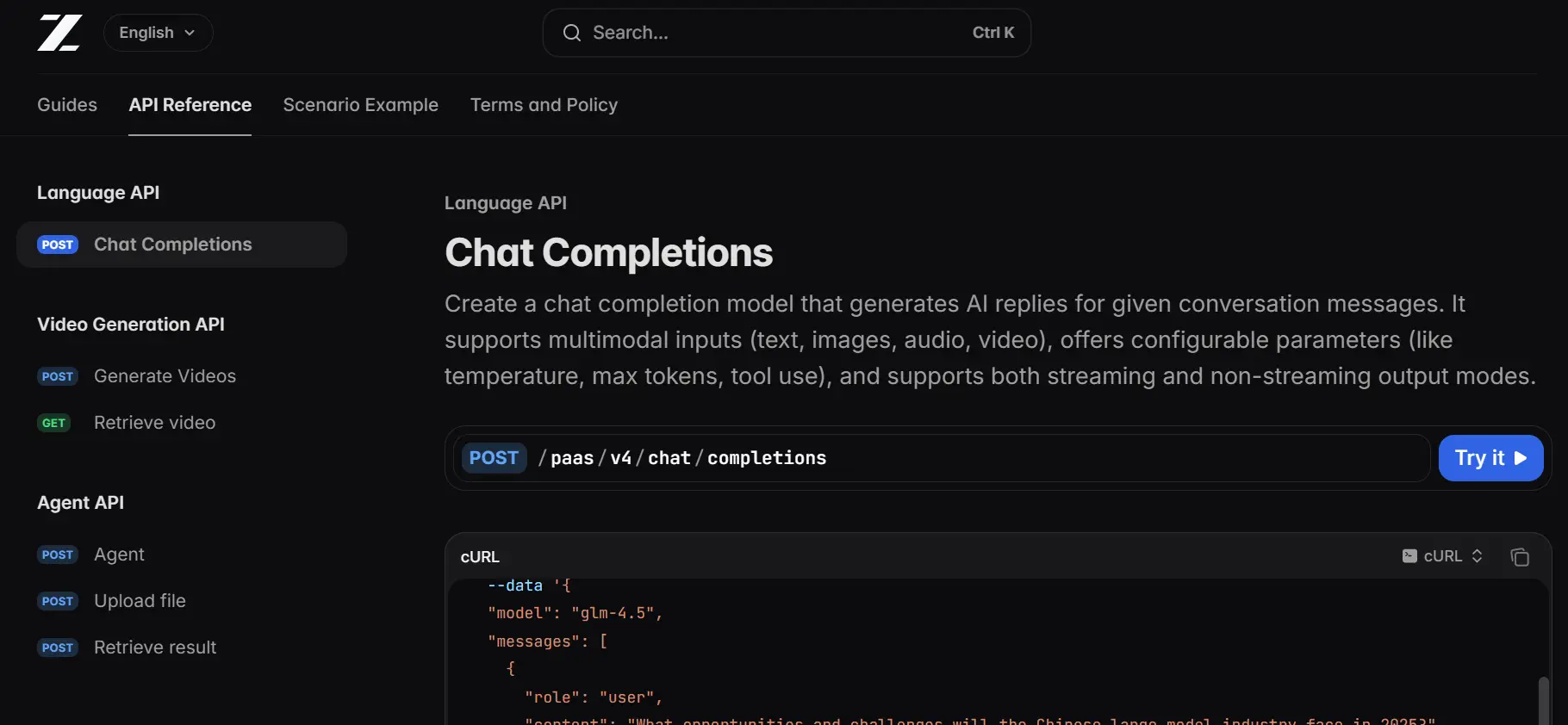

Z.AI Open Platform offers official, OpenAI-compatible API access to GLM-4.5, allowing developers to easily integrate advanced reasoning and agentic capabilities into their applications with a simple setup and robust support.

You can find the API endpoints documentation for Chat Completions on Z.AI, which includes a limited but powerful set of endpoints for LLMs, video generation, and agentic workflows.

In this example, we will use the Z.ai Python SDK to build an agentic application that employs Firecrawl for web searching and web scraping.

-

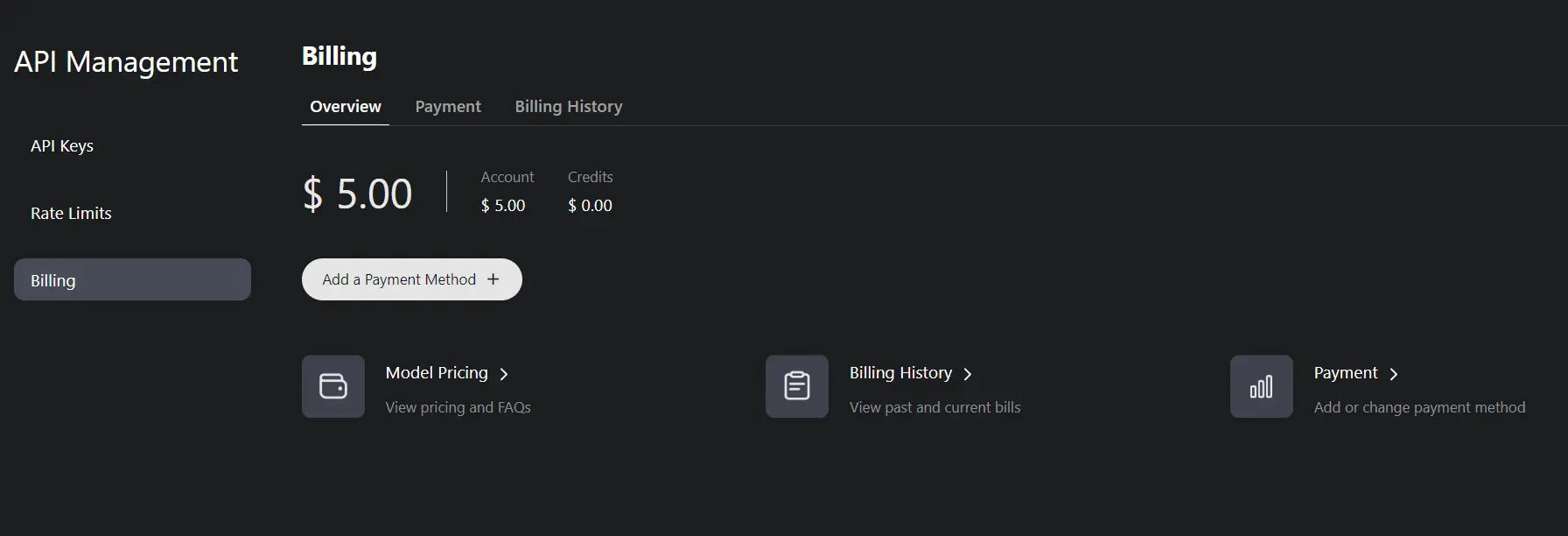

Visit the official Z.ai website and create an account (or if you made an account to test the Z.ai chatbot, use the login).

-

Navigate to the “Billing” tab and add at least $5 in credit using your credit card.

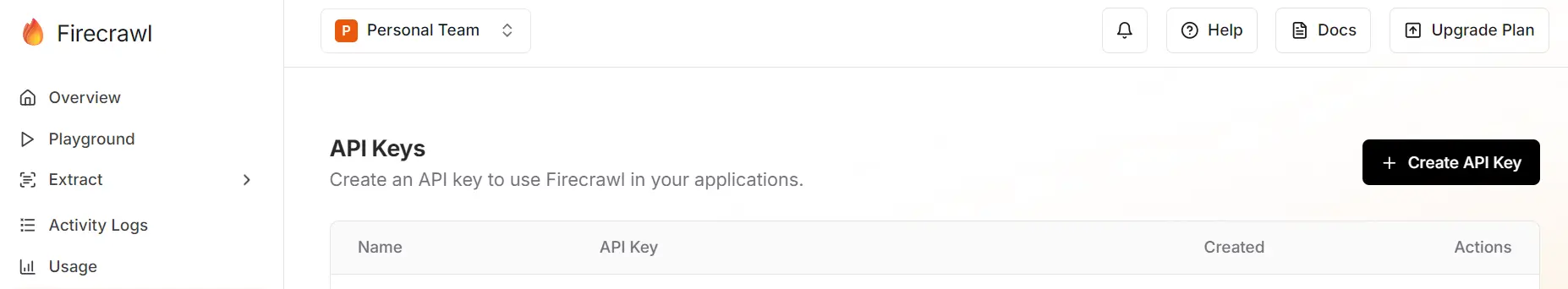

- Go to the “API Keys” tab and generate an API key. Then, set it as an environment variable in your local system.

export ZAI_API_KEY="your-api-key-here"- Sign up for Firecrawl to receive free credits, then generate the API keys and set them as environment variables in your system.

- Install two Python packages:

zai-sdk(for AI model access) andfirecrawl-py(for web scraping):

Note: Please refer to the official Firecrawl documentation to learn more about search customization and other APIs.

pip install zai-sdk

pip install firecrawl-py- Now we'll create some python functions with these APIs.

scrape_website()uses Firecrawl to scrape a single URL and convert it to markdown format, whilesearch_web()performs web searches using Firecrawl and formats results with titles, URLs, and descriptions.

import os

import json

from zai import ZaiClient

from firecrawl import FirecrawlApp

# Initialize clients

zai_client = ZaiClient(api_key=os.getenv("ZAI_API_KEY"))

firecrawl_app = FirecrawlApp(api_key=os.getenv("FIRECRAWL_API_KEY"))

def scrape_website(url: str) -> str:

"""Scrape a website using Firecrawl"""

try:

scrape_result = firecrawl_app.scrape_url(

url,

formats=["markdown"], # Specify the desired formats ["markdown"],

maxAge= 3600000, # 1 hour in milliseconds

)

return scrape_result.markdown

except Exception as e:

return f"Error scraping: {str(e)}"

def search_web(query: str, limit: int = 5) -> str:

"""Search the web using Firecrawl"""

try:

search_result = firecrawl_app.search(query, limit=limit)

results = []

for result in search_result.data:

results.append(

f"**{result['title']}**\nURL: {result['url']}\nDescription: {result['description']}\n"

)

return "\n".join(results) if results else "No results found"

except Exception as e:

return f"Error searching: {str(e)}"- The tool definitions specify what external actions the AI can perform. Here, we define two tools for function calling: one for website scraping and one for web searching.

# Define tools for function calling

tools = [

{

"type": "function",

"function": {

"name": "scrape_website",

"description": "Scrape content from a website URL",

"parameters": {

"type": "object",

"properties": {

"url": {"type": "string", "description": "The URL to scrape"}

},

"required": ["url"],

},

},

},

{

"type": "function",

"function": {

"name": "search_web",

"description": "Search the web for information",

"parameters": {

"type": "object",

"properties": {

"query": {"type": "string", "description": "Search query"},

"limit": {

"type": "integer",

"description": "Number of results",

"default": 5,

},

},

"required": ["query"],

},

},

},

]- Next, we'll define the functions that bring everything together.

run_function() is a router function that executes either scraping or searching based on the function name.

chat_with_tools() is the main orchestrator that:

- Sends user message to ZAI's GLM-4.5 model

- Detects if the AI wants to use tools (scraping/searching)

- Executes the requested tools and feeds results back to the AI

- Returns the final AI response with tool-enhanced information

def run_function(name: str, args: dict):

"""Execute the requested function"""

if name == "scrape_website":

return scrape_website(args["url"])

elif name == "search_web":

return search_web(args["query"], args.get("limit", 5))

def chat_with_tools(message: str):

"""Main chat function with tool calling"""

# Initial request

response = zai_client.chat.completions.create(

model="glm-4.5",

messages=[{"role": "user", "content": message}],

tools=tools,

tool_choice="auto",

temperature=0.7,

top_p=0.8,

)

assistant_message = response.choices[0].message

# Handle tool calls

if assistant_message.tool_calls:

# Convert tool calls to serializable format

tool_calls_dict = []

for tool_call in assistant_message.tool_calls:

tool_calls_dict.append(

{

"id": tool_call.id,

"type": "function",

"function": {

"name": tool_call.function.name,

"arguments": tool_call.function.arguments,

},

}

)

messages = [

{"role": "user", "content": message},

{

"role": "assistant",

"content": assistant_message.content,

"tool_calls": tool_calls_dict,

},

]

for tool_call in assistant_message.tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

print(f"🔧 Using {function_name} with {function_args}")

# Run the function

result = run_function(function_name, function_args)

messages.append(

{"role": "tool", "tool_call_id": tool_call.id, "content": result}

)

# Get final response

final_response = zai_client.chat.completions.create(

model="glm-4.5",

messages=messages,

temperature=0.7,

top_p=0.8,

)

return final_response.choices[0].message.content

else:

return assistant_message.content- Next, you can test the application by invoking the web search function. It may take a few seconds to complete.

result = chat_with_tools("Search for information about Python web scraping")

print(result)🔧 Using search_web with {'query': 'Python web scraping'}

I found several great resources about Python web scraping. Here's a summary of the key information:

### Top Resources for Learning Python Web Scraping:

1. **[Python Web Scraping: Full Tutorial With Examples (2025)](https://www.scrapingbee.com/blog/web-scraping-101-with-python/)**

- Comprehensive guide covering basics to advanced techniques

- Updated for 2025 with modern approaches

2. **[A Practical Introduction to Web Scraping in Python](https://realpython.com/python-web-scraping-practical-introduction/)**

- From Real Python, a trusted learning resource

- Covers fundamental concepts and popular libraries.......- Finally, test out the web scraping function.

result = chat_with_tools(

"Please scrape the https://docs.firecrawl.dev/introduction webpage."

)

print(result)🔧 Using scrape_website with {'url': 'https://docs.firecrawl.dev/introduction'}

I've successfully scraped the Firecrawl introduction webpage. Here's a summary of the key information:

## Firecrawl Overview

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It can crawl all accessible subpages and provide clean markdown for each without requiring a sitemap.........

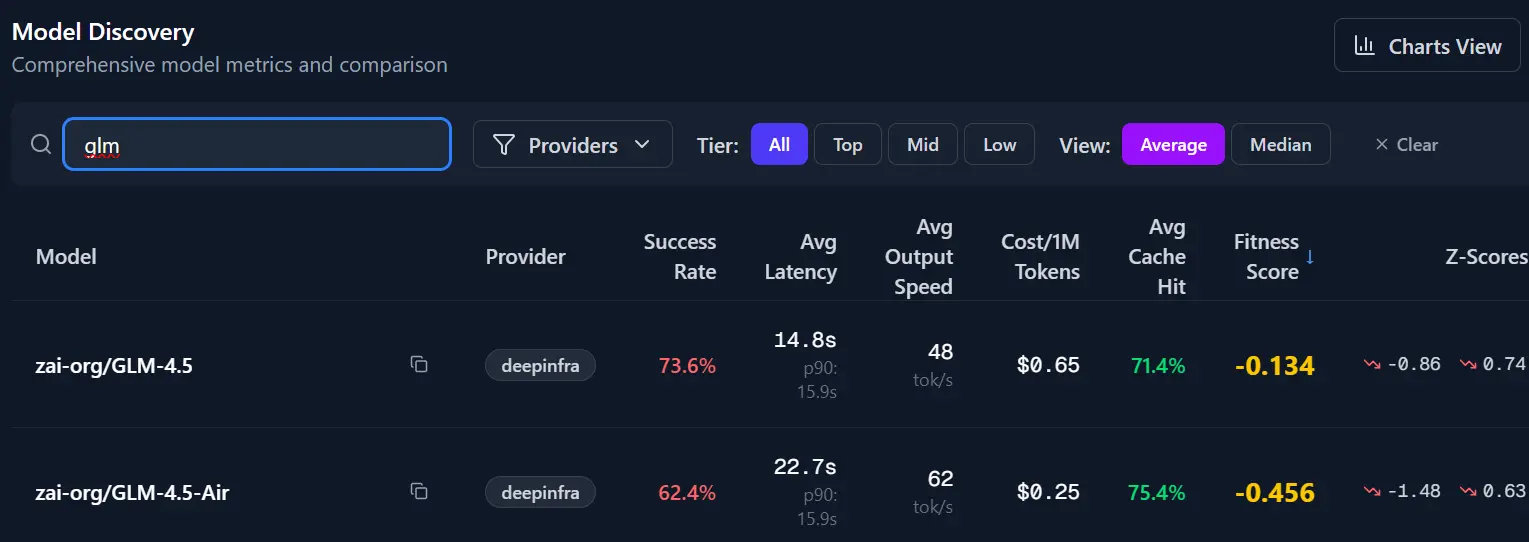

LLM Marketplace

Many AI enthusiasts and developers prefer to access new open-source and proprietary models through LLM marketplaces. These platforms often offer competitive pricing and faster response times, as multiple API providers compete to deliver the best service. In some cases, using a marketplace can be more cost-effective than going directly to a single provider, and you can easily compare different models and pricing options.

In this section, we will highlight two major LLM marketplaces where you can use the GLM-4.5 model, both for free and with paid options:

- OpenRouter: The most popular LLM marketplace, offering a wide range of models with flexible pricing and fast access.

- Requesty.ai: This platform provides $1 of free credit to new users, allowing you to test GLM-4.5 and other models at no initial cost.

4. OpenRouter

OpenRouter is a marketplace for large language models that provides API access to over 400 AI models, including GLM-4.5, across more than 60 providers. It offers centralized billing, competitive pricing, and fast response times through a single, OpenAI-compatible interface.

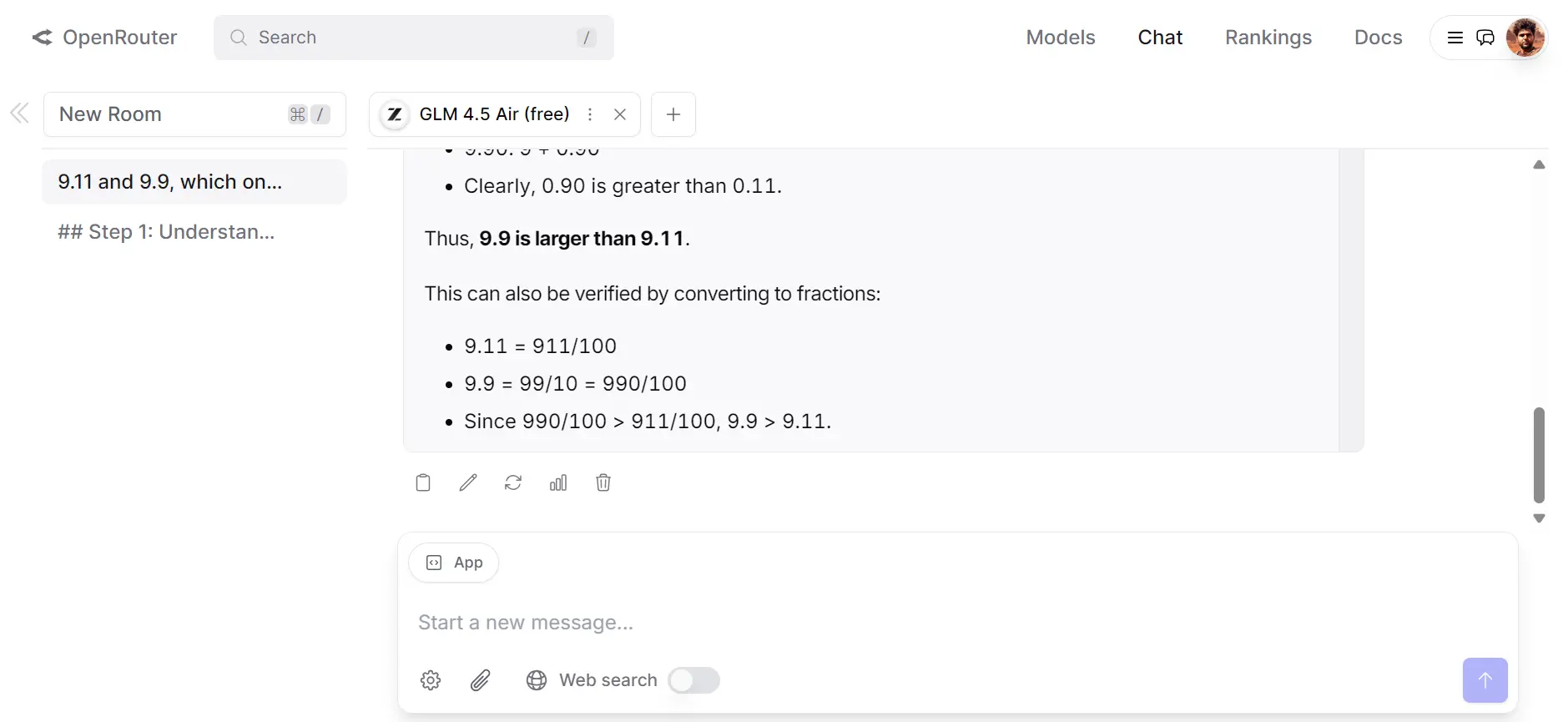

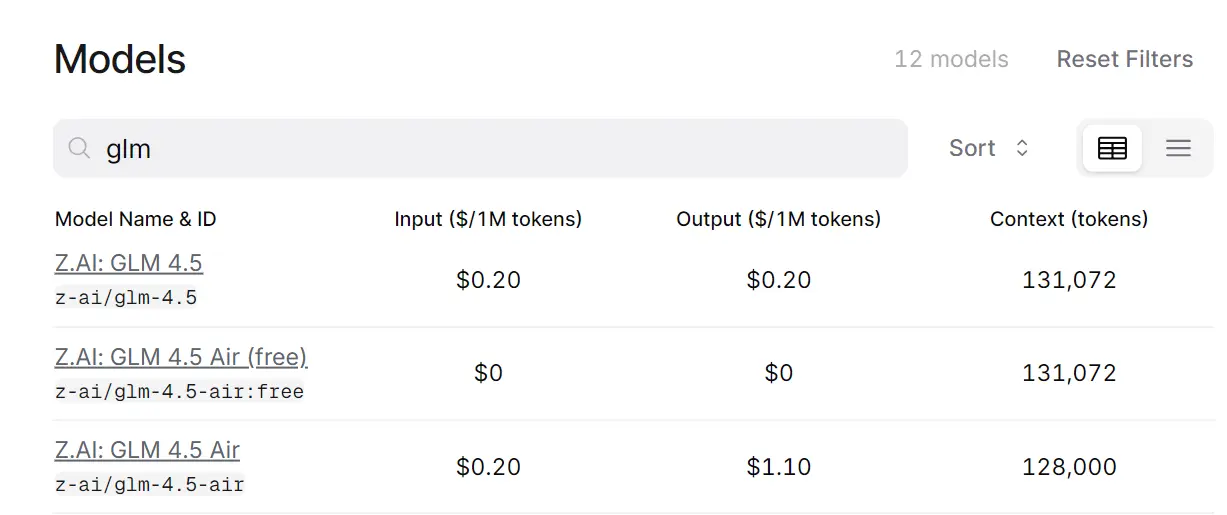

Currently, the GLM-4.5 Air model is provided by Chutes for free. You can even test this model on the OpenRouter "Chat" menu, which allows you to try all kinds of models.

If you click on the "Models" section and search for the GLM-4.5 model, you will get a list of available models with different pricing and context lengths.

In this example, we will use the OpenAI SDK to access the OpenRouter GLM-4.5 Air for free and use the Firecrawl API for search results. Our response will include updated information about the topic the user requested.

- Install the OpenAI SDK:

pip install openai- Create an account with OpenRouter, then generate the OpenRouter API key. Set it as an environment variable.

export OPENROUTER_API_KEY="your-openrouter-api-key-here"- Next, create a Python script that will take the user's question and pass it through the FireCrawl web search API.

import os

from openai import OpenAI

from firecrawl import FirecrawlApp

# Initialize clients

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=os.getenv("OPENROUTER_API_KEY"),

)

firecrawl_app = FirecrawlApp(api_key=os.getenv("FIRECRAWL_API_KEY"))

question = "Search for the latest gold price available."

# Search the web first

print(f"🔍 Searching for: {question}")

search_result = firecrawl_app.search(question, limit=5)

# Format search results as context

context = "Current web search results:\n\n"

for i, result in enumerate(search_result.data, 1):

context += f"{i}. {result['title']}\n{result['description']}\n\n"

- Combine the web search results and the user's question, then feed it to the GLM-4.5 Air model.

# Get AI response with search context

completion = client.chat.completions.create(

model="z-ai/glm-4.5-air:free",

messages=[

{"role": "system", "content": "You are a helpful assistant. Use the provided web search results to give accurate, current information."},

{"role": "user", "content": f"Question: {question}\n\n{context}\n\nAnswer based on the search results:"}

],

temperature=0.7,

)

print(completion.choices[0].message.content)

You will receive results that are highly accurate and up to date.

🔍 Searching for: Search for the latest gold price.

Based on the current web search results, the latest gold price is approximately:

- $3,355 per ounce (JM Bullion)

- $3,313 per ounce (Monex)

- $3,347.79 per ounce (BullionVault)

The prices are fluctuating throughout the trading day, with some sources showing a slight decline while others indicate the price is rebounding from earlier dips. Gold prices are constantly changing due to market conditions, including responses to economic developments such as U.S. tariff announcements.

Note that prices may vary slightly between different providers as they update at different times and may include different premiums or fees.5. Requesty.ai

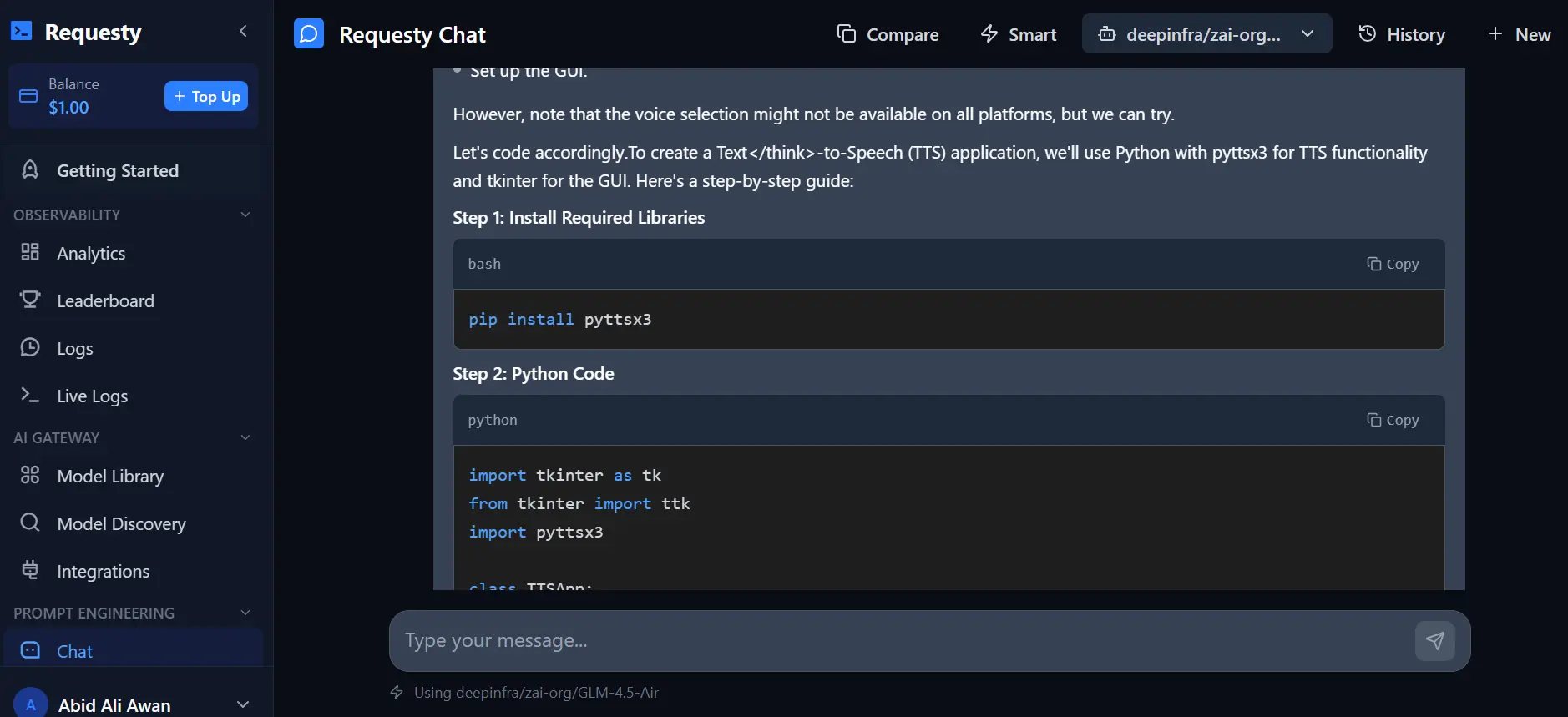

Requesty.ai is a unified LLM platform that intelligently routes your requests across over 169 models, including GLM-4.5. This helps you reduce API costs, avoid SDK lock-in, and access advanced features like efficient prompt engineering, all through a simple OpenAI-compatible interface.

You can think of Requesty.ai as a more intelligent version of OpenRouter.

Test any model on Requesty by navigating to the chat menus. You receive a $1 in credit when you sign up for the account.

Currently, you won't find the free version of the GLM-4.5 model in the model menus, but you can still access it using the API. The $1 free credit may be used up quickly to test GLM-4.5, so you may need to add $5 of credit if you want to try it.

Here is an example of the code you can use to generate the response. It's quite similar to OpenRouter. If you've already installed the openai package, you just need to change the base URL and provide your Requesty.ai API key.

export REQUESTY="your-requesty-api-key-here"import os

import openai

ROUTER_API_KEY = os.getenv("REQUESTY")

# Initialize OpenAI client

client = openai.OpenAI(

api_key=ROUTER_API_KEY,

base_url="https://router.requesty.ai/v1",

default_headers={"Authorization": f"Bearer {ROUTER_API_KEY}"}

)

# Example request

response = client.chat.completions.create(

model="deepinfra/zai-org/GLM-4.5-Air",

messages=[{"role": "user", "content": "Explain Firecrawl Search API in one line?"}]

)

# Print the result

output = response.choices[0].message.content.split("</think>")

print("Content:", output[1])Content: The Firecrawl Search API is a web scraping tool that enables real-time search and structured data extraction from websites using natural language queries.Displaying the reasoning part.

print("Reasoning:", output[0].split("<think>")[1])Reasoning: We are asked to explain the Firecrawl Search API in one line.

Firecrawl is a service that can scrape and extract structured data from websites.

The Firecrawl Search API is a part of that service, specifically designed for sea.........Bonus: Open Source

GLM-4.5 is a truly open-source model, meaning you can freely download it from Hugging Face and use it both locally and commercially without licensing fees. However, while the model itself is free, running it comes with significant hardware costs.

Due to its massive size of 355 billion parameters, you will need at least an 8x H100 GPU cluster, along with a high-end CPU to deploy it effectively. These hardware requirements can make both local and cloud-based serving quite expensive. While many users won't be able to run this locally due to the cost and complexity, we're covering these methods so you can understand how local deployment works and what's involved.

In this section, we will review two of the most popular ways to run GLM-4.5 locally:

- vLLM Framework: Efficient inference on powerful GPU clusters.

- MLX-LM: Optimized for Mac machines and allows for more accessible experimentation on consumer hardware.

6. Serving with vLLM

vLLM is a high-throughput, memory-efficient serving engine designed for large language models. It enables fast and scalable deployment, featuring capabilities such as continuous batching and APIs compatible with OpenAI.

The GLM-4.5 model is open-source, which means you can run it locally or on your private cloud using the vLLM inference engine.

To get started, you can download the model from Hugging Face and then start the OpenAI-compatible server using the following command:

vllm serve zai-org/GLM-4.5-Air \

--tensor-parallel-size 8 \

--tool-call-parser glm45 \

--reasoning-parser glm45 \

--enable-auto-tool-choice \

--served-model-name glm-4.5-airNote: To run this model with vLLM, you will need a cluster with 8 GPUs and enable CPU offloading with the option

--cpu-offload-gb 16. For any flash inference issues, set the environment variableVLLM_ATTENTION_BACKEND=XFORMERSor adjust theTORCH_CUDA_ARCH_LISTaccording to your GPU architecture.

7. Running Locally with MLX-LM

MLX-LM is an open-source framework that enables efficient inference and fine-tuning of large language models directly on Apple Silicon Macs, making it easy to run powerful large language models (LLMs) locally.

The MLX format quantized version of the GLM-4.5 model is available on Hugging Face under the repository mlx-community/GLM-4.5-4bit. You can install the mlx-lm library using the following command.

pip install mlx-lmThen run the script to download and load the model to generate responses based on your prompts.

from mlx_lm import load, generate

# Load the model and tokenizer

model, tokenizer = load("mlx-community/GLM-4.5-4bit")

# Define your prompt

prompt = "hello"

# Apply chat template if available

if tokenizer.chat_template is not None:

messages = [{"role": "user", "content": prompt}]

prompt = tokenizer.apply_chat_template(

messages, add_generation_prompt=True

)

# Generate a response

response = generate(model, tokenizer, prompt=prompt, verbose=True)Conclusion

With these five easy approaches, you have multiple paths to working with GLM-4.5. For most people, the free web interfaces are perfect for experimenting. When you're ready to build applications, the API options give you flexibility, whether you choose the official Z.ai API for full functionality or explore the LLM marketplaces for competitive pricing and easy integration.

For those who want complete control, you can always run the model yourself, which is the great advantage of open source.

We're seeing an exciting trend where open-source models are competing directly with proprietary models and disrupting the AI space. With multiple access options, developers can choose whatever fits their project needs and budget.

Next Steps with GLM-4.5

- Integrate GLM-4.5 into MCP servers for Cursor for enhanced development workflows

- Build visual AI applications with our LangFlow tutorial

- Automate workflows using n8n and Firecrawl

- Explore web scraping libraries to collect training data

data from the web