With its clear and readable syntax, Python opens doors to creativity and innovation. From automating a simple spreadsheet to deploying a sophisticated production API or even creating an intelligent AI agent, the possibilities are endless. Python is more than just a programming language. It's a universal problem-solving tool that can be applied in countless ways

In this article, you'll find a collection of Python projects, each accompanied by step-by-step guides. These projects are categorized into Beginner, Intermediate, Advanced, and Production-Ready levels, enabling you to enhance your skills gradually. By the end, you'll have a portfolio that showcases your ability to build and deploy applications.

Why Learn Python?

Python is one of the most popular programming languages, known for its clean and beginner-friendly syntax, as well as its extensive open-source ecosystem that enables rapid development. It is a versatile, general-purpose language used in various fields, including data science, backend and web development, machine learning and AI, data engineering, and MLOps.

Python is an interpreted language, which makes it slower than compiled languages. However, Python's ecosystem includes many optimized libraries that use compiled backends (written in C or C++) for performance-critical operations. This hybrid approach allows developers to maintain Python's ease of use and productivity while still achieving the speed needed for computationally intensive tasks like machine learning training and inference.

Beginner-Friendly Python Projects

These beginner Python projects allow you to learn by quickly building fun applications, such as automated emails, a simple to-do list GUI, a basic password manager, or quick stock forecasting via an API. You can get hands-on practice without becoming overwhelmed by complexity, perfect for those starting a python tutorial

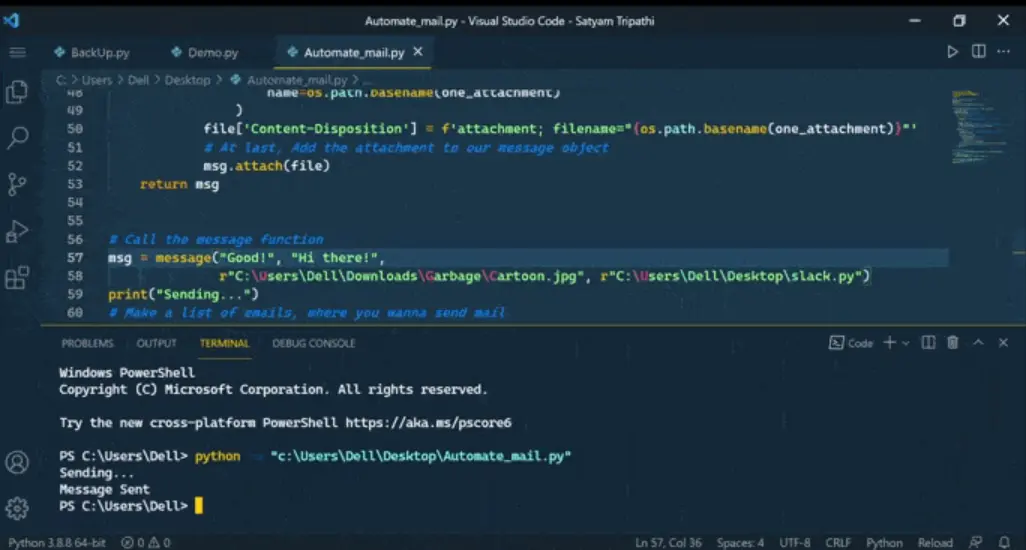

This project guides you in building an automated messaging workflow that composes rich messages, adds optional media and files, delivers them to multiple recipients, and can run on a schedule. Ultimately, you'll transform a manual communication task into a reliable and repeatable process.

You'll get to practice foundational skills for many Python projects, including automation, working with external services, organizing reusable code, managing files and attachments, handling recipient lists, scheduling recurring tasks, and troubleshooting issues.

What You'll Build:

- SMTP connection setup with Gmail using TLS encryption and authentication

- Rich email composition with text, HTML formatting, images, and file attachments

- Multiple recipient management with personalized messaging

- Scheduled email automation using cron-like scheduling

Skills You'll Practice:

- Working with external services and SMTP protocols

- File handling and email formatting with MIME components

- Basic scheduling and automation patterns

- Secure credential management and authentication

Tools Used: smtplib, email.mime, schedule, Gmail SMTP, os for file handling

Link to Guide: How to Send Automated Email Messages in Python

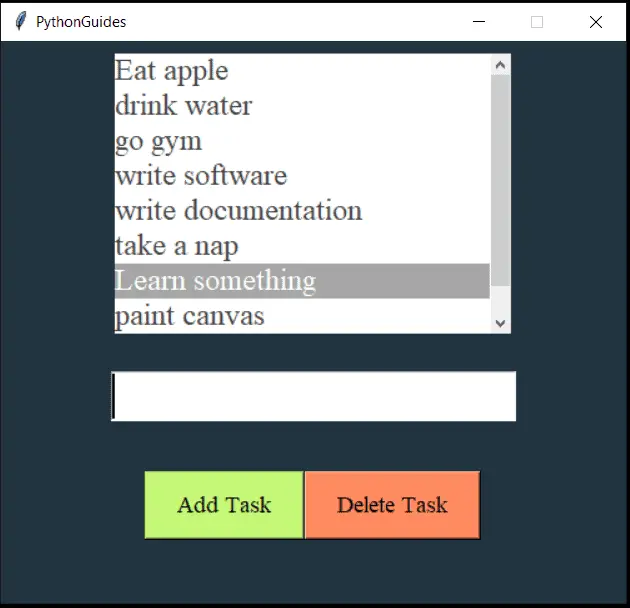

You'll build a simple desktop to-do app with a clean interface that allows users to add, remove, and mark tasks as complete. The app features a professional layout with listbox display, scrollbar navigation, and intuitive add/delete functionality, providing hands-on experience with desktop application development.

By the end of this project, you'll have practice with GUI fundamentals, event-driven problem-solving, layout and state management, user input validation and feedback, basic data handling for task lists, organizing reusable functions, and designing clear, responsive interactions.

What You'll Build:

- Desktop GUI with Tkinter widgets including Listbox, Entry, and Buttons

- Scrollable task display with proper frame-based layout organization

- Task management system with add, delete, and selection functionality

- Event-driven interface with user input validation and feedback

Skills You'll Practice:

- GUI programming fundamentals and widget management

- Event-driven programming and user interaction handling

- Layout design with frames and geometry managers

- Basic data structures and state management

Tools Used: tkinter, tkinter.messagebox, Python built-in data structures

Link to Guide: Build a ToDo List with Python Tkinter

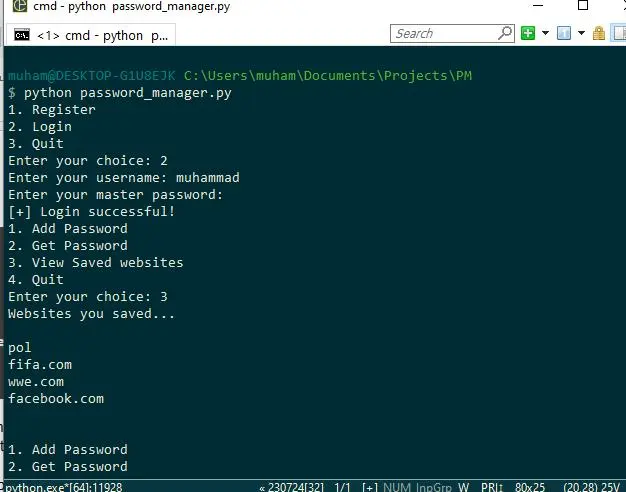

You'll be building a personal password manager that allows users to sign in with a master login, securely store and organize credentials, retrieve and copy passwords when needed, and review saved accounts. This system will protect data through encryption and safeguard access using hashed authentication.

This project teaches secure app design and security concepts essential for any application handling sensitive data including password hashing, symmetric encryption, and secure key management. You'll also develop skills in data persistence, input handling, user flows, error handling, and edge-case thinking.

What You'll Build:

- Master password authentication with SHA-256 hashing

- Symmetric encryption system using Fernet for password storage

- JSON-based secure storage with encrypted password vault

- Command-line interface with clipboard integration for easy access

Skills You'll Practice:

- Cryptographic programming with symmetric encryption

- Secure authentication and password hashing techniques

- File-based data persistence with JSON

- Command-line interface design and user experience

Tools Used: cryptography, hashlib, getpass, pyperclip, json, os

Link to Guide: How to Build a Password Manager in Python

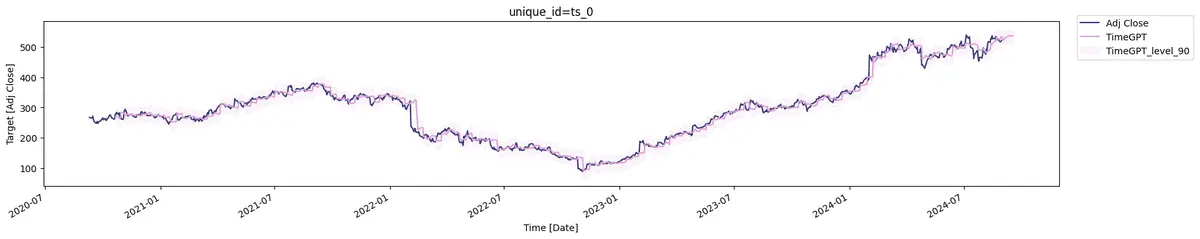

Create a time-series forecasting system using cutting-edge foundation models to predict stock prices. You'll learn to work with financial APIs, implement both zero-shot and fine-tuned forecasting approaches, and visualize predictions with confidence intervals.

This project introduces modern AI forecasting techniques and financial data analysis, demonstrating how foundation models can be applied to time-series prediction without traditional model training.

What You'll Build:

- Financial data integration with time-series APIs

- Zero-shot forecasting using TimeGPT foundation model

- Advanced fine-tuned forecasting with custom parameters

- Interactive visualizations with prediction intervals and uncertainty bands

Skills You'll Practice:

- Time-series data preparation and formatting

- API integration with financial data services

- AI model integration and prompt engineering

- Data visualization and statistical analysis

Tools Used: TimeGPT API, pandas, matplotlib, requests, financial data APIs

Link to Guide: Stock Market Forecasting with TimeGPT

Intermediate Python Projects

These projects allow you to quickly develop real-world systems, such as creating a dermatology Q&A dataset, analyzing medical prescriptions, detecting changes through web scraping, and automating price tracking. You'll learn to utilize AI APIs and web scraping APIs, build user interfaces with the Gradio web framework, integrate applications with Discord, and enhance your skills in large language models (LLMs), automation, API integrations, and alerting.

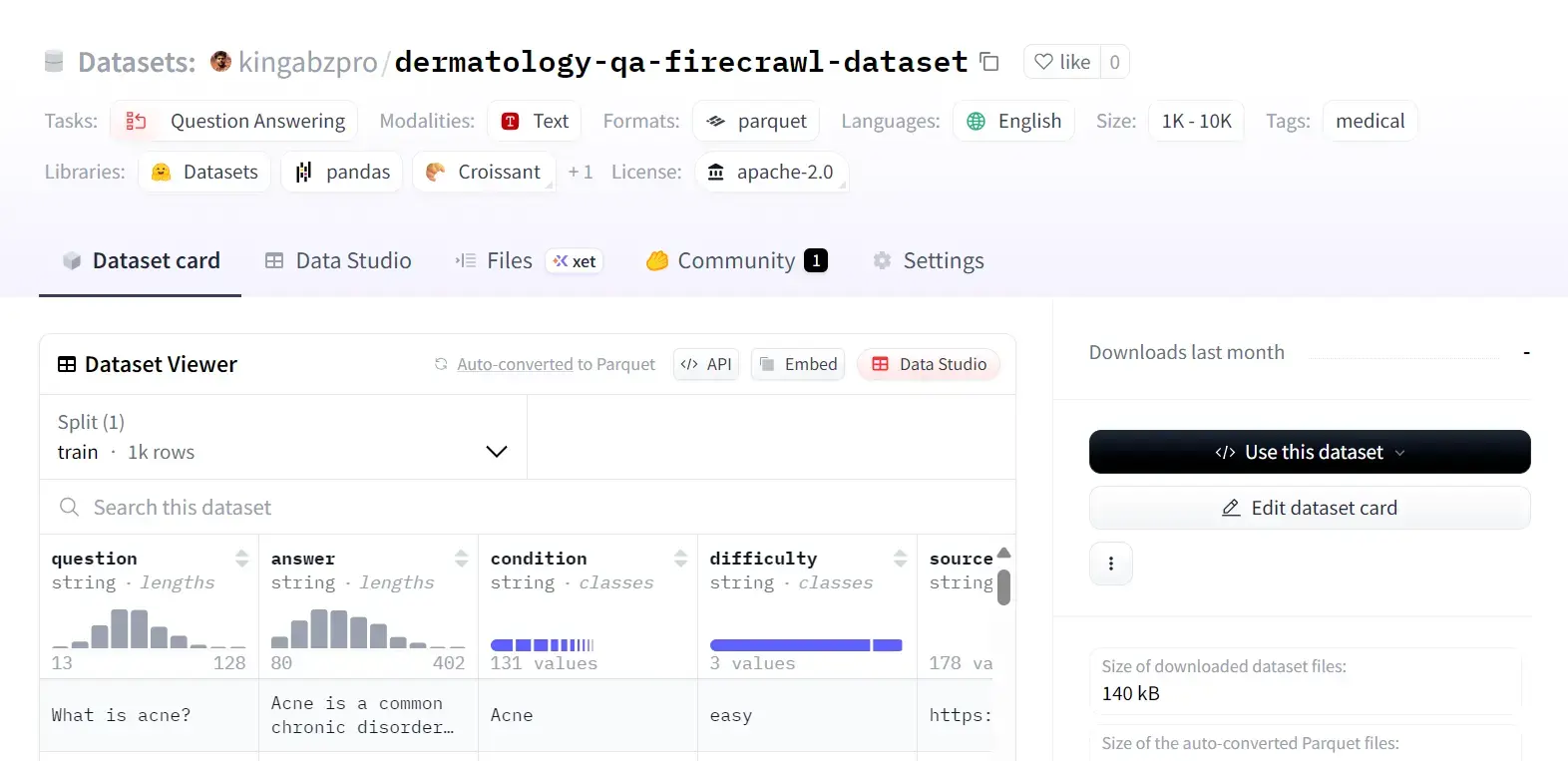

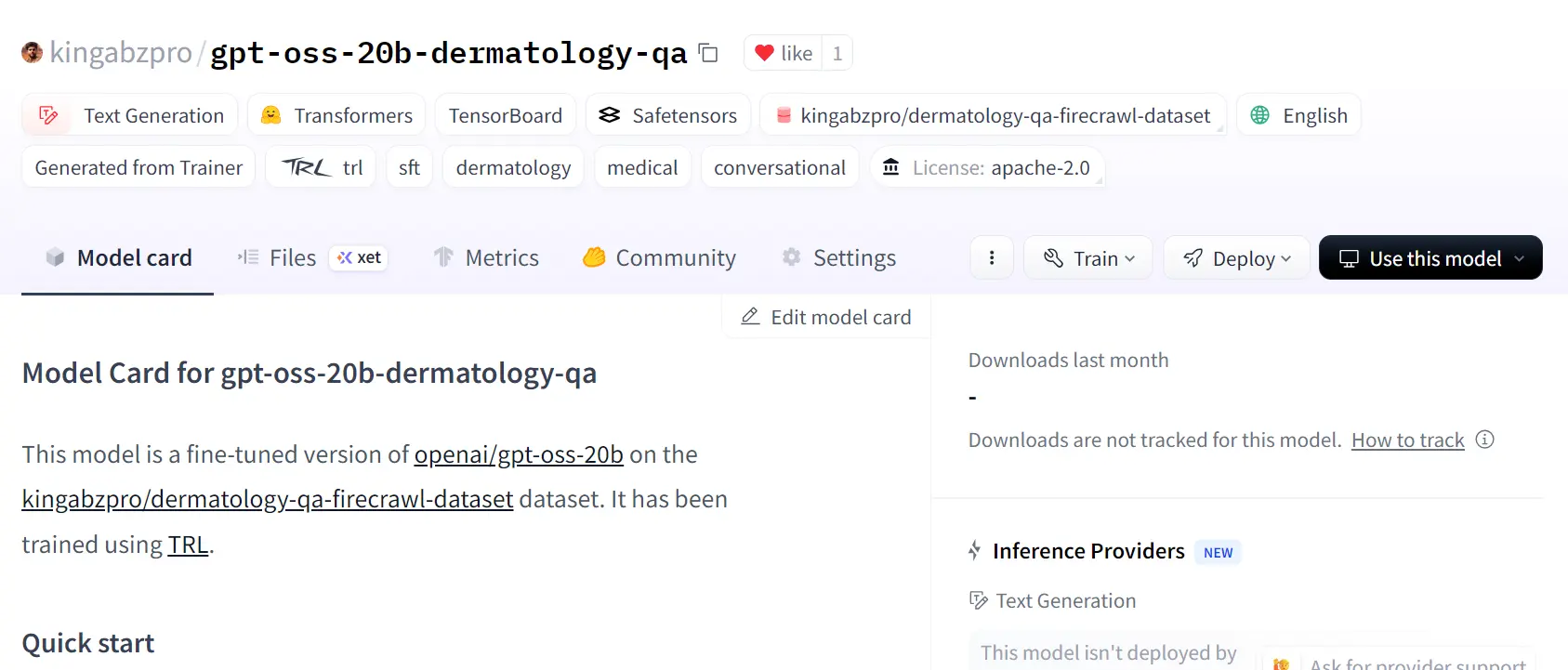

Build a cutting-edge AI dataset creation pipeline using OpenAI's GPT-OSS 120B model and the revolutionary Harmony prompting format. This project demonstrates how modern AI can automate the expensive and time-consuming process of creating high-quality training datasets by combining intelligent web discovery with structured data generation.

You'll create an end-to-end system that automatically discovers dermatology content across trusted medical websites using Firecrawl's Search API, then transforms that raw data into structured Q&A pairs using GPT-OSS 120B via Groq Cloud. The resulting dataset can be used for fine-tuning language models or training domain-specific AI systems.

This project represents the frontier of automated dataset creation, combining multiple cutting-edge AI services into a production-ready system. You'll learn techniques used by leading AI companies to create training data at scale while building a valuable resource for the medical AI community.

What You'll Build:

- Intelligent web discovery using Firecrawl's AI-powered search across medical websites

- Harmony-format prompting using OpenAI's newest structured conversation system

- Robust data pipeline with atomic checkpointing and fault tolerance

- Multi-API coordination handling rate limits across Firecrawl and Groq services

- Advanced quality validation using Pydantic schemas and custom business rules

- Easy dataset publishing to Hugging Face Hub for public access

Skills You'll Practice:

- Async Python programming including context managers and concurrent processing

- Advanced error handling with retry strategies

- Data validation frameworks using Pydantic for schema enforcement

- Dataset publishing workflows including metadata and version control

Real-World Applications:

- Fine-tuning datasets for domain-specific language models

- Training data generation for medical AI applications

- Content creation pipelines for educational platforms

- Research datasets for academic and commercial AI projects

Tools Used: firecrawl-py, openai-harmony, groq, pydantic, tenacity, datasets, Firecrawl Search API, Groq Cloud, Hugging Face Hub

Link to Guide: How to Create a Dermatology Q&A Dataset with OpenAI Harmony & Firecrawl Search

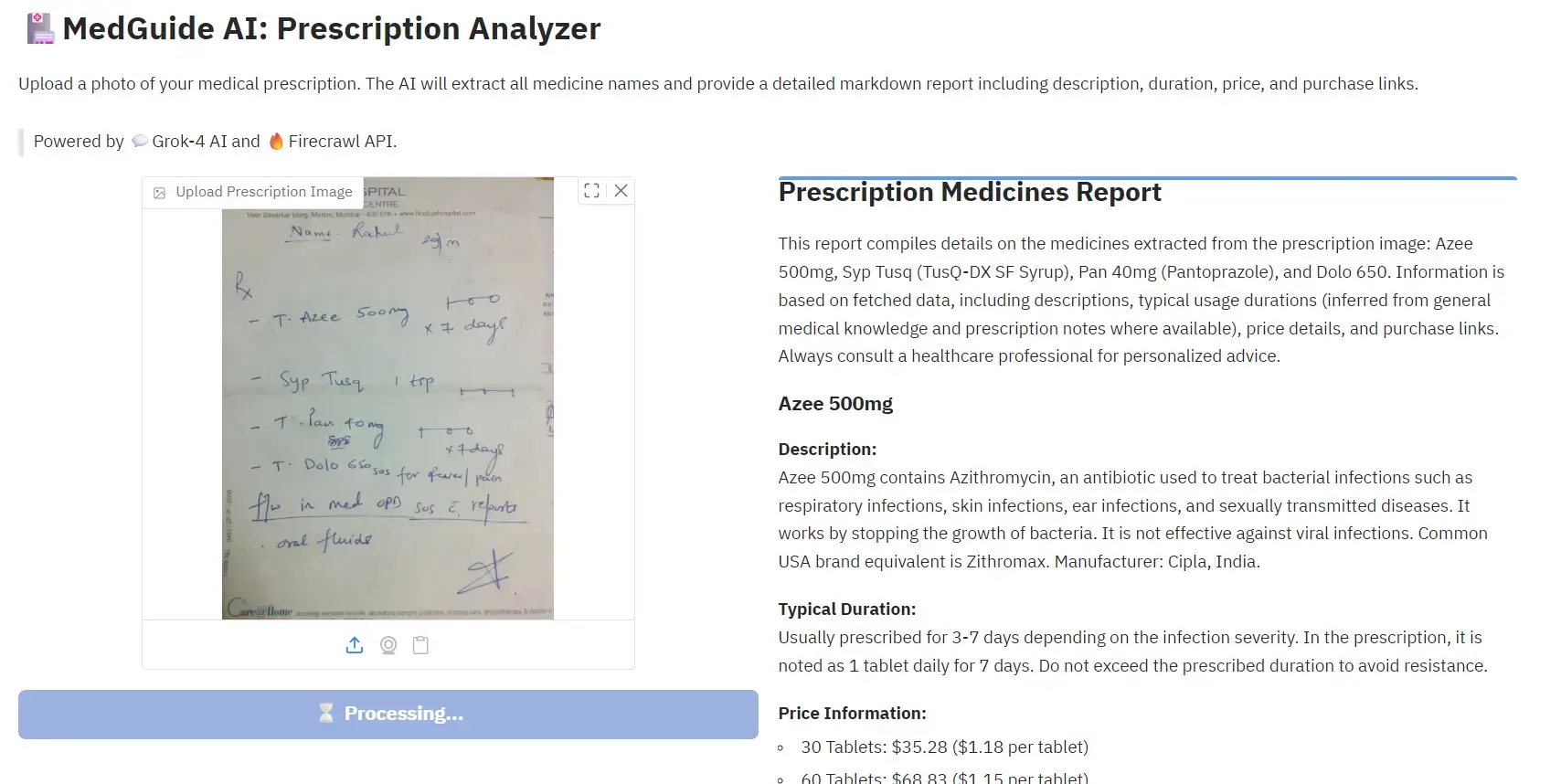

Develop an AI-powered prescription analyzer that allows users to upload an image of a prescription. The system will extract medication details and enhance the information with pricing and availability sourced from the web. It will then generate a clean, shareable report that includes helpful links.

By completing this project, you'll develop valuable skills in defining multimodal problems, orchestrating tool-driven workflows, retrieving and structuring web data, managing concurrency and error handling, producing clear user-ready summaries, and creating a lightweight user interface, all without getting lost in implementation details.

What You'll Build:

- Multimodal AI integration using Grok 4 for prescription image analysis

- Automated web data retrieval for medication pricing and availability

- Concurrent processing system for handling multiple data sources

- Professional report generation with formatted output and actionable links

Skills You'll Practice:

- Multimodal AI programming with image and text processing

- Web scraping and data enrichment workflows

- Concurrent programming and error handling

Real-World Applications:

- Healthcare technology and patient assistance tools

- Pharmacy management and pricing comparison systems

- Medical data processing and analysis platforms

Tools Used: Grok 4 API, web scraping libraries, image processing tools, concurrent processing frameworks

Link to Guide: Building a Medical AI Application with Grok 4

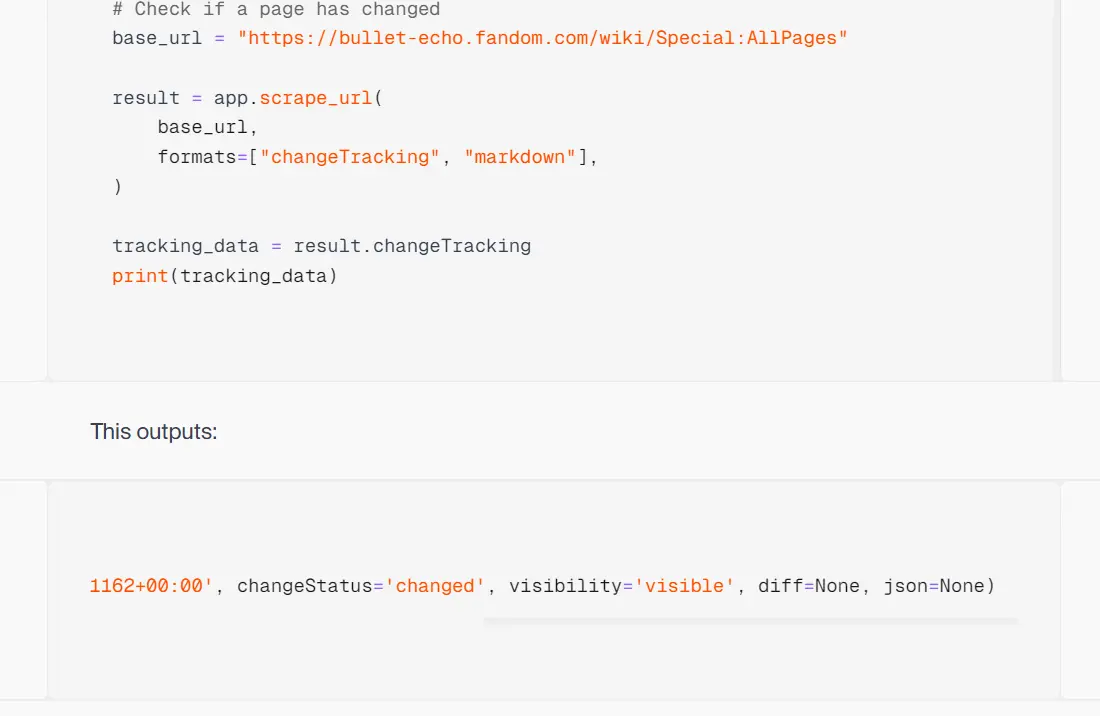

Create an automated web change-monitoring pipeline that ensures a large collection of web pages remains up to date by detecting edits, additions, and removals. It efficiently fetches only the content that has changed to save time and resources.

Through this project, you'll develop skills in designing incremental data pipelines, implementing change detection, orchestrating scheduled jobs, managing parallel workloads and partial results, enforcing basic quality checks and deduplication, and planning production-ready storage.

What You'll Build:

- Incremental web monitoring with change detection algorithms

- Parallel processing system for handling multiple website sources

- Quality validation and deduplication systems

- Production-ready storage and scheduling infrastructure

Skills You'll Practice:

- Advanced web scraping with change detection

- Parallel processing and concurrent workflow management

- Data pipeline design with quality controls

- Automated scheduling and production deployment

Real-World Applications:

- Competitive intelligence and market monitoring

- Content management and SEO tracking systems

- News and information aggregation platforms

Tools Used: Firecrawl API, scheduling frameworks, parallel processing libraries, data storage systems

Link to Guide: Web Scraping Change Detection with Firecrawl

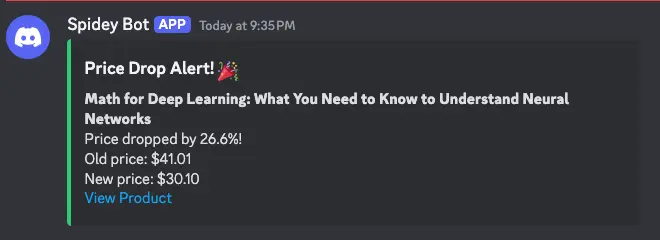

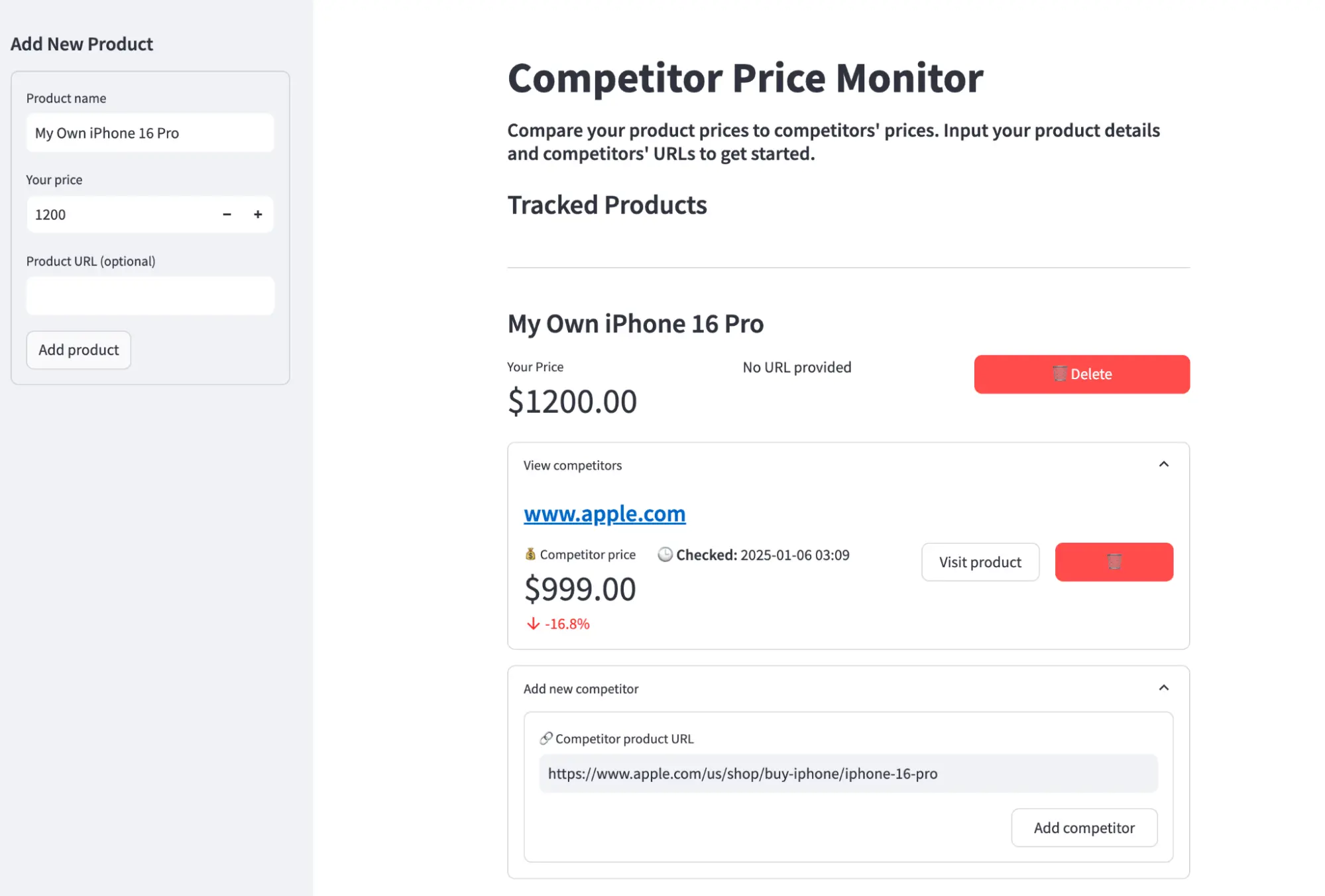

Create an automated price-tracking app that monitors products across e-commerce sites, records their price history, and alerts you when a price drop meets your specified threshold. The app will feature a user-friendly interface and operate on a schedule.

Through this project, you'll gain skills in web data collection, input validation, database design, trend visualization, scheduled automation, notifications, and basic UI and deployment workflows.

What You'll Build:

- Multi-site product price monitoring with automated data collection

- Historical price tracking with trend visualization and analytics

- Threshold-based alerting system with Discord webhook notifications

- Scheduled automation with configurable monitoring intervals

- User-friendly interface for managing products and viewing price history

- Database design for efficient price data storage and retrieval

Skills You'll Practice:

- Web scraping techniques for e-commerce price extraction

- Database design and time-series data management

- Automated scheduling and background task processing

- Webhook integration and notification system development

- Data visualization and trend analysis

- User interface development and user experience design

Real-World Applications:

- Personal shopping and deal-finding automation

- E-commerce competitive analysis tools

- Inventory management and pricing strategy systems

- Consumer price tracking and budgeting applications

Tools Used: Web scraping libraries, database systems, discord-webhook, scheduling frameworks, visualization libraries, UI frameworks

Link to Guide: Building an Automated Price Tracking Tool

Advanced Python Projects

These projects enable you to quickly build complex systems, such as fine-tuning GPT-OSS 20B, creating an automated competitor price monitoring system, developing a bulk sales lead extractor with AI, and setting up an image classification inference API using FastAPI. Through these experiences, you'll practice model training and serving, develop robust data pipelines, implement alerting systems, design user interfaces, and create scalable automation solutions.

In this project, you fine-tune a general AI model into a specialized dermatology assistant on a curated Q&A dataset using OpenAI's open-source GPT-OSS 20B model with LoRA (Low-Rank Adaptation) techniques. This process will involve a structured approach that includes setting up the model, conducting baseline tests, training, evaluating performance, and making inferences.

You'll learn how to prepare datasets and format prompts, perform efficient parameter-efficient fine-tuning, set up and monitor training, evaluate performance, merge adapters, and deploy the model for reliable inference.

What You'll Build:

- Dataset preparation and prompt formatting for fine-tuning workflows

- LoRA-based parameter-efficient fine-tuning implementation

- Model training pipeline with monitoring and evaluation systems

- Performance benchmarking and baseline comparison frameworks

- Model merging and deployment-ready inference systems

Skills You'll Practice:

- Advanced Python machine learning model training and fine-tuning techniques

- Parameter-efficient training methods and memory optimization

- Model evaluation and performance benchmarking

- MLOps practices including experiment tracking and model versioning

- Production model deployment and inference optimization

Real-World Applications:

- Domain-specific AI assistant development

- Custom chatbot and conversational AI systems

- Specialized content generation platforms

- Research and academic AI model development

Tools Used: transformers, peft, torch, datasets, wandb, LoRA adapters, Hugging Face ecosystem

Link to Guide: Fine-Tune OpenAI GPT-OSS 20B on the Dermatology Dataset

Develop an automated competitor price-monitoring system that tracks products across multiple sites, extracts prices using AI, stores the results, and displays real-time comparisons on a simple dashboard with scheduled updates.

Gain skills in web scraping, data extraction, database modeling, data persistence, dashboard creation, scheduling automated tasks, managing continuous integration workflows, and designing a reliable end-to-end data pipeline.

What You'll Build:

- Multi-site web scraping system with AI-powered data extraction

- Robust database architecture for price history and trend analysis

- Real-time dashboard with interactive visualizations and alerts

- Automated scheduling system with continuous integration workflows

- End-to-end data pipeline with quality monitoring and error handling

Skills You'll Practice:

- Enterprise-scale web scraping architecture and anti-detection techniques

- AI-powered data extraction and natural language processing

- Database design and optimization for time-series data

- Business intelligence dashboard development and data visualization

- Production system deployment with monitoring and alerting

Real-World Applications:

- E-commerce competitive intelligence platforms

- Pricing strategy and market analysis tools

- Retail management and inventory optimization systems

- Business intelligence and market research applications

Tools Used: Advanced web scraping frameworks, AI extraction APIs, database systems, dashboard frameworks, scheduling platforms

Link to Guide: How to Build an Automated Competitor Price Monitoring System with Python

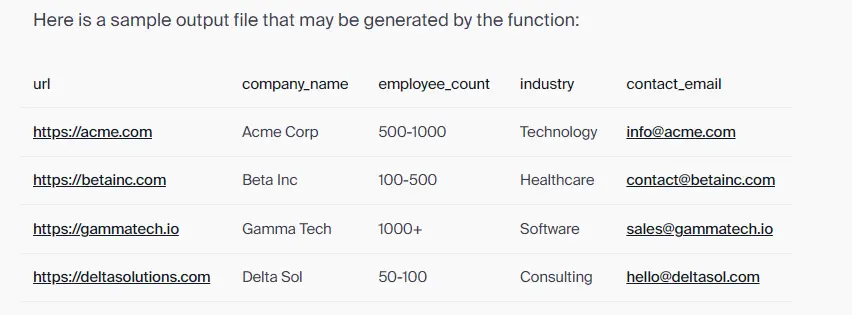

This project involves creating an automated lead-extraction application that allows users to upload a list of websites, select specific fields to collect, and receive a clean, structured file through a simple and user-friendly interface that can scale to handle many sites.

Throughout this project, you'll develop skills in advanced data extraction techniques, defining data schemas, extracting and validating web data, designing forms and workflows, managing batching and concurrency, cleaning and exporting results, implementing basic error handling and quality checks, and preparing a lightweight application for sharing or deployment.

What You'll Build:

- Scalable web scraping system with concurrent processing for bulk operations

- AI-powered data extraction with customizable field selection and validation

- Professional web interface with batch upload and progress tracking

- Advanced data cleaning and export systems with multiple format support

- Error handling and quality assurance systems

Skills You'll Practice:

- Large-scale concurrent programming and batch processing techniques

- AI-powered data extraction with schema validation and quality controls

- Professional web application development with user-friendly interfaces

- Data pipeline optimization and performance tuning for high-volume processing

Real-World Applications:

- Sales and marketing lead generation platforms

- Business intelligence and market research tools

- Customer acquisition and prospecting systems

- Data enrichment and contact management platforms

Tools Used: AI extraction APIs, concurrent processing frameworks, Python web framework libraries, data validation systems, export utilities

Link to Guide: How to Build a Bulk Sales Lead Extractor in Python Using AI

This project focuses on training an image classifier and transforming it into a production-ready API for real-time predictions. You'll gain skills in data preparation, model fine-tuning and evaluation, packaging models for inference, designing clean API endpoints, handling file uploads and validation, optimizing performance and concurrency, and preparing the service for deployment.

This project covers the full machine learning operations (MLOps) pipeline from model training through production deployment, demonstrating industry-standard practices for serving ML models at scale.

What You'll Build:

- Custom image classification model training and optimization pipeline

- High-performance REST API with FastAPI for real-time inference serving

- File upload handling with validation and preprocessing systems

- Production deployment with containerization and scaling capabilities

- Performance monitoring and optimization with caching and load balancing

Skills You'll Practice:

- End-to-end machine learning model development and training

- Production API development with performance optimization and error handling

- Model serving architecture and inference pipeline optimization

- Container deployment and cloud infrastructure management

- MLOps practices including monitoring, logging, and continuous deployment

Real-World Applications:

- Computer vision and image analysis platforms

- Content moderation and automated tagging systems

- Medical imaging and diagnostic assistance tools

- Quality control and automated inspection systems

Tools Used: fastapi, torch/tensorflow, pillow, uvicorn, containerization tools, cloud deployment platforms

Link to Guide: Image Classification Inference with FastAPI

Production-Ready Python Projects

These projects enable you to quickly develop production-grade applications. You'll learn how to deploy robust web scrapers, create an AI resume-job matching app, and run large language models in the cloud. This experience will help you practice key skills in deployment, scalability, and reliability.

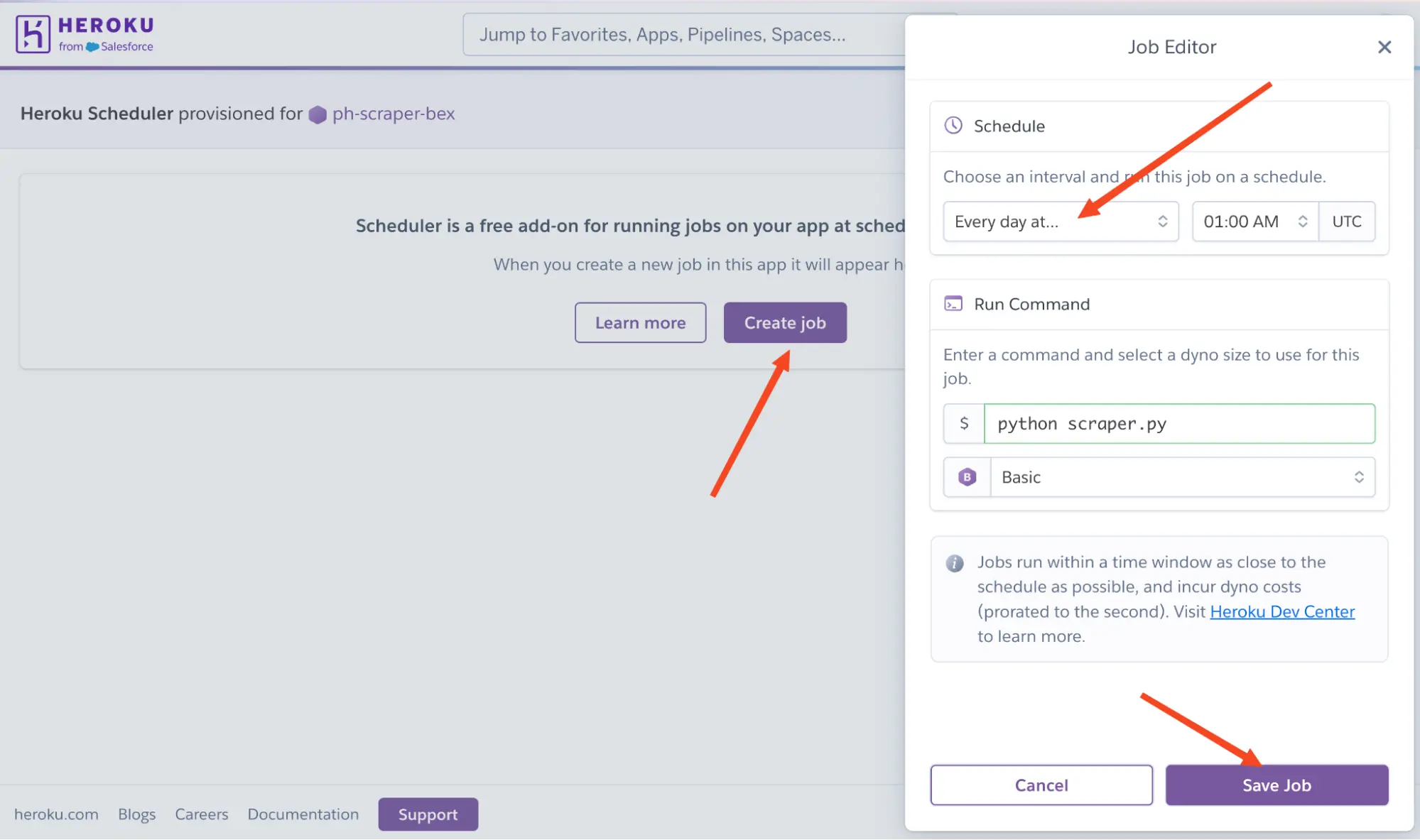

Deploy web scrapers to the cloud to ensure they run automatically, operate 24/7, scale as needed, and do not depend on your laptop. This can be achieved using free or low-cost options while implementing best practices for reliability and security.

In this project, you'll learn how to choose a hosting method, schedule scraper runs, manage sensitive information, add retries and logging, set up alerts, validate and store data effectively, and package a scraper for continuous integration/deployment (CI/CD) and straightforward deployments.

What You'll Build:

- Cloud deployment infrastructure with auto-scaling and load balancing

- CI/CD pipeline with automated testing, deployment, and rollback capabilities

- Comprehensive monitoring and alerting systems with performance dashboards

- Advanced retry mechanisms and error recovery with data validation systems

- Security implementation with credential management and access controls

- Production logging and analytics with data persistence and backup strategies

Skills You'll Practice:

- DevOps and cloud infrastructure management for production systems

- CI/CD pipeline design and automated deployment strategies

- Production monitoring, logging, and performance optimization techniques

- Security best practices and credential management for enterprise applications

- System reliability engineering and error recovery mechanisms

- Database management and data pipeline optimization for high-volume operations

Real-World Applications:

- Enterprise data collection and business intelligence platforms

- Market research and competitive analysis systems

- Content aggregation and news monitoring services

- E-commerce and pricing intelligence solutions

Tools Used: Cloud platforms (AWS/GCP/Azure), Docker, Kubernetes, CI/CD tools, monitoring systems, database platforms

Link to Guide: How to Deploy Python Web Scrapers

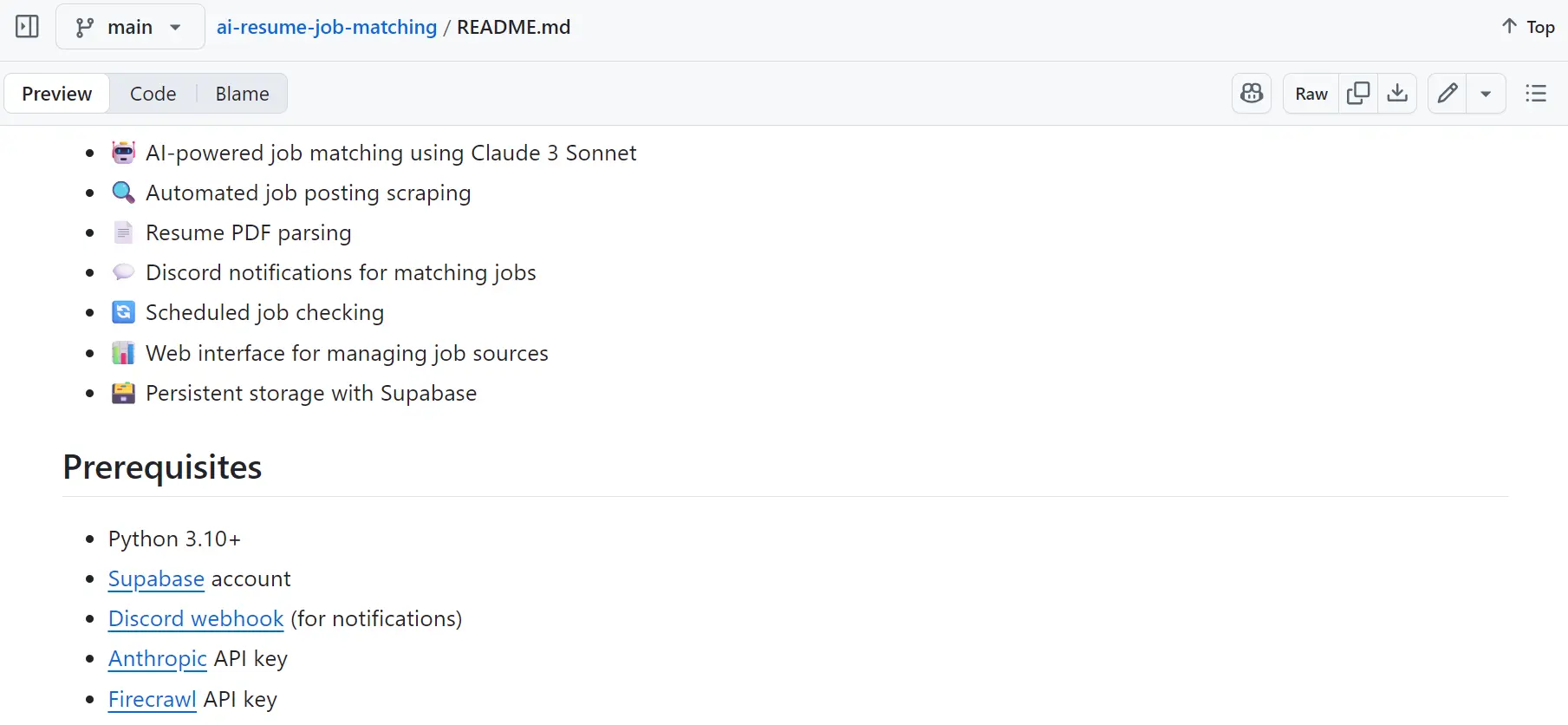

Build an automated job-matching assistant that scrapes company career pages you add, parses your PDF resume, evaluates each job posting with an AI matcher, and sends Discord alerts for strong matches. This assistant will also provide a simple web app to manage sources and view results on a recurring schedule in the cloud.

Through this project, you'll gain skills in AI-powered web scraping (using Firecrawl), structured data modeling (with Pydantic), LLM-based evaluation and prompt design (using Claude 3.5 Sonnet), database persistence and tracking (with Supabase), webhook notifications (through Discord), UI building (using Streamlit), and continuous integration scheduling/operations (using GitHub Actions).

What You'll Build:

- Intelligent web discovery system using AI-powered scraping across diverse job boards

- Advanced AI matching engine using Claude 3.5 Sonnet for job-candidate compatibility analysis

- Multi-component architecture with database management, API integration, and user interface systems

- Automated scheduling and notification systems with Discord webhooks and real-time alerts

- Production web interface with Streamlit providing job source management and analytics

- CI/CD automation with GitHub Actions for continuous monitoring and updates

Skills You'll Practice:

- Full-stack application architecture with microservices and component separation

- Advanced AI integration with prompt engineering and structured output processing

- Production database design and management with real-time data synchronization

- Automated workflow orchestration with scheduling and event-driven processing

- API integration patterns and multi-service coordination with error handling

- DevOps practices including automated deployment, monitoring, and maintenance

Real-World Applications:

- Career services and job placement platforms

- Recruitment technology and talent acquisition systems

- HR automation and candidate screening solutions

- Professional networking and career development tools

Tools Used: firecrawl-py, anthropic, supabase, streamlit, discord-webhook, GitHub Actions, databases, Python AI APIs

Link to Guide: Building an AI Resume Job Matching App With Firecrawl And Claude

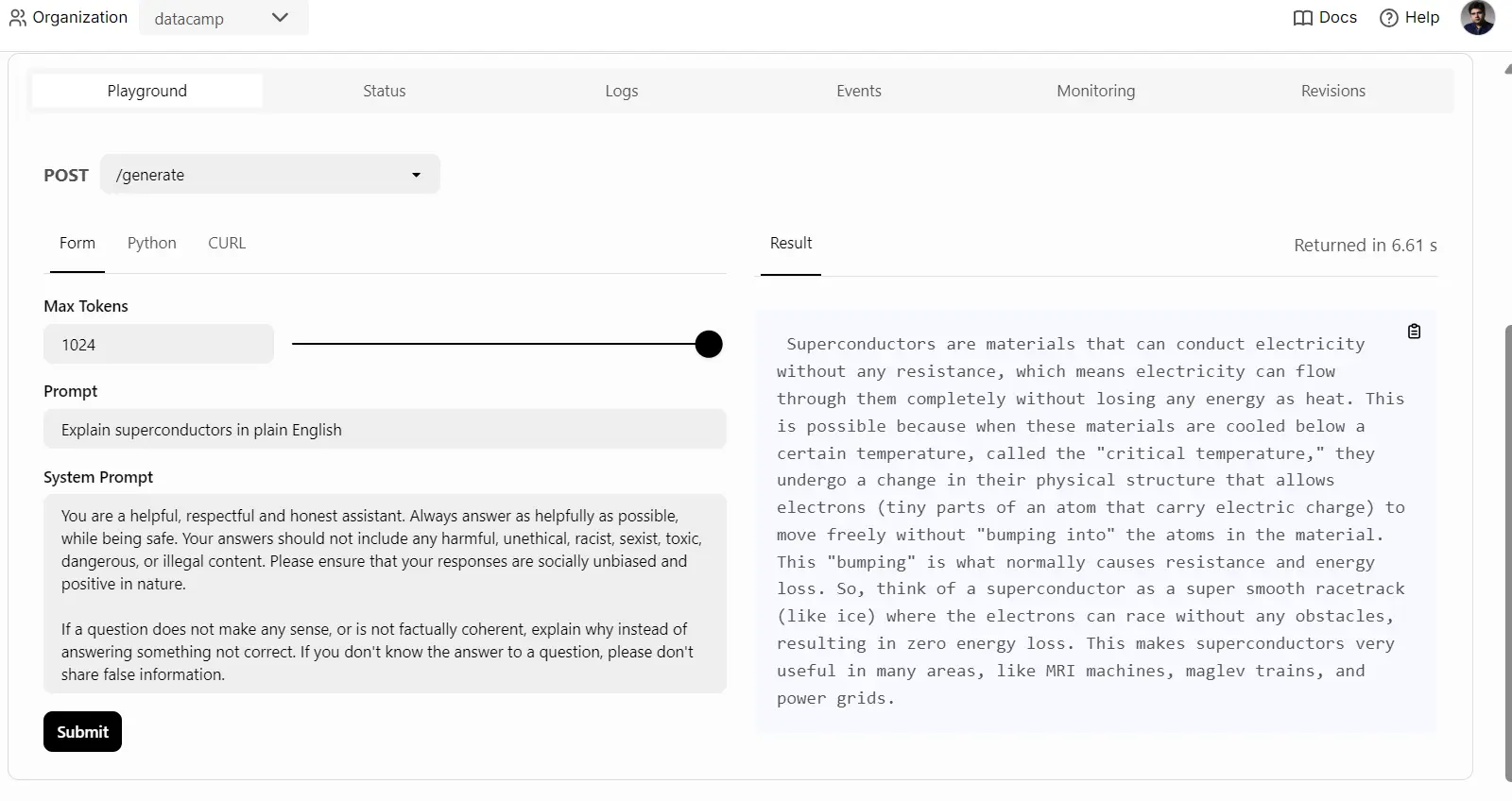

This project involves building an AI app and turning it into a production API, first running locally for quick tests and then deploying to the cloud so it's reachable from anywhere with a simple curl request. By working on this project, you'll develop skills in model serving, clean API design, environment and dependency management, secure configuration and secrets, cloud deployment and scaling, and basic monitoring and logging.

What You'll Build:

- Production-ready LLM serving architecture with BentoML framework

- Scalable API design with request handling, authentication, and rate limiting

- Cloud deployment infrastructure with auto-scaling and load balancing capabilities

- Comprehensive monitoring and logging systems with performance analytics

- Security implementation with API key management and access controls

- Environment management and dependency handling for production reliability

Skills You'll Practice:

- MLOps and model serving architecture for large-scale AI applications

- Production API development with security, monitoring, and performance optimization

- Cloud infrastructure management and automated scaling for AI workloads

- Advanced deployment patterns and containerization for ML applications

- System monitoring and observability for production AI services

- Security best practices and operational excellence for ML systems

Real-World Applications:

- AI-powered SaaS platforms and API services

- Enterprise AI assistants and chatbot systems

- Content generation and automation platforms

- Custom AI model deployment and serving solutions

Tools Used: bentoml, containerization platforms, cloud infrastructure, monitoring tools, API frameworks, ML deployment systems

Link to Guide: How to Deploy LLMs with BentoML

How Do You Start Building Python Projects?

Start simple: Choose a small idea that you can complete from start to finish in one day. Ship a basic version, and then gradually expand it into something more complex. This approach keeps you motivated, reduces feelings of overwhelm, and helps you learn the entire workflow from input to output before adding additional features.

Once you have decided on your project idea, focus on the following steps:

- Set up your environment: Install Python 3.11+ and create a virtual environment to isolate dependencies.

- Pick your tools: I recommend you use VS Code (with Python extension), Git/GitHub for version control, and optionally Jupyter for notebooks or UV for dependency management.

- Start with a Minimum Viable Product (MVP): Define the smallest end-to-end feature you can create, such as fetching data, processing it, and producing output. Allocate a few hours for this task, rather than weeks, then iterate to improve.

- Handle secrets safely: Store API keys in environment variables or a .env file (for example, using python-dotenv), and never hard-code them in your source code or repositories.

- Choose simple storage: Start with JSON, CSV, or SQLite for small projects; only consider using PostgreSQL or NoSQL databases if the need arises.

- Add quality early: Write a few pytest tests, format with black, and lint with ruff or flake8 to keep your code clean.

- Log and debug: Use the logging module for structured logs and clear error messages; avoid silent failures.

- Document and demo: Create a clear README with setup instructions, a short demo (such as a GIF or screenshot), and example configurations or datasets to make your project easy to run and share.

- Deploy simply: Start with Streamlit or FastAPI along with Uvicorn, and host your application on platforms like Render, Railway, Fly.io, or Hugging Face Spaces for quick and low-friction deployments.

- Share your project: Add it to your portfolio and GitHub, then share on LinkedIn or other socials/communities. Ask for feedback, track suggestions via GitHub Issues, and iterate to improve.

Python for Everything and Everyone

I have been using Python for almost everything: web development, APIs, machine learning, data science, and even simple automation. I never feel the need to use another language, because I can accomplish everything I need to with this versatile language.

Python offers speed, ease of use, a wealth of pre-built packages, and, best of all, you can build, test, and deploy applications to any cloud platform with ease. Even large language models are primarily trained on Python code, often more so than Rust or C++, so they can generate highly accurate code for your Python projects too.

In this article, we have reviewed 15 Python projects suitable for different skill levels, from beginners to production-ready applications. You can start with these projects and keep building them, as they all come with step-by-step guides for you to follow. All you need to do is dedicate a couple of hours each day to build, and you'll have your own projects to share in no time.

If you want to go deeper on web scraping projects, explore list crawling with Python in our guide: List Crawling: Extract Structured Data From Websites at Scale.

data from the web