Hitting Claude Code limits and burning cash? Meet the $3 GLM Coding Plan. Designed as a powerful yet affordable solution, the GLM Coding Plan pairs Claude Code's intuitive workflow with the open-source strength of GLM-4.6, giving you near-Claude-level coding performance at a fraction of the price.

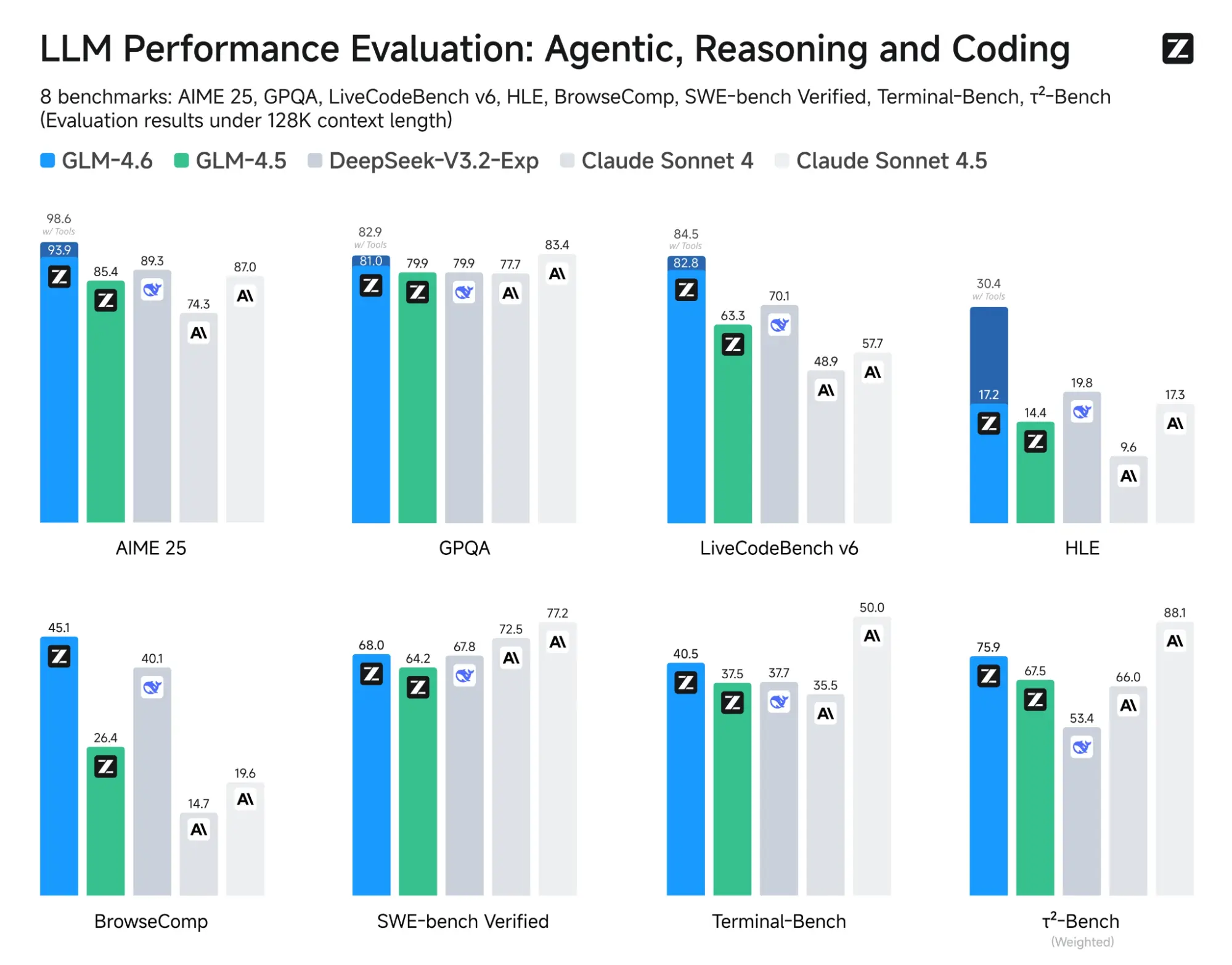

GLM-4.6 pushes real capability upgrades over GLM-4.5, from a longer 200K-token context window to stronger reasoning, faster tool use, and significantly better coding performance. Across eight public benchmarks in reasoning, agents, and coding, it consistently outperforms GLM-4.5 and competes closely with top-tier models like DeepSeek-V3.2-Exp and Claude Sonnet 4, though it still trails Sonnet 4.5 in raw code generation strength.

In this tutorial, we will walk through how to subscribe to the $3 GLM Coding Plan, connect it with Claude Code, and vibe-code a smart E-Commerce Intelligence App, one that scrapes product reviews using Firecrawl, analyzes them with GPT-5-mini, caches results in SQLite, and visualizes insights through an interactive Streamlit dashboard. By the end, you'll see exactly where GLM-4.6 excels, where it struggles, and how to optimize it for your own coding flow.

What Is the GLM Coding Plan?

The GLM Coding Plan is Z.ai's subscription service that provides access to the latest GLM-4.6 model, which has been optimized for advanced agent-like behavior, reasoning, and coding. This plan integrates directly into your existing developer tools, starting at $3 per month.

It seamlessly combines with popular workflows like Claude Code, Cline, and OpenCode (among others), allowing you to enhance your coding assistant without needing to change your editor or command-line interface (CLI) setup.

Developers are drawn to this plan because it offers predictable, low-cost pricing along with strong performance and wide tool compatibility. The entry-level Lite tier typically provides around 120 prompts per 5-hour cycle for about $3, while the Pro tier increases that to approximately 600 prompts per 5-hour cycle. This structure is designed to accommodate frequent coding sessions without the complexity of per-token charges.

Setting Up Claude Code with the GLM Coding Plan

Claude Code is an agentic coding tool that lives in your terminal, understands your codebase, and helps you code faster by executing routine tasks, explaining complex code, and handling git workflows, all through natural language commands. It acts like a hands-on engineering partner that can run commands, edit files, and reason about your repo structure from the CLI.

Why use Claude Code with GLM Coding Plan? We chose this setup because the official Claude Code plans are expensive and have strict usage limits. Many developers report hitting these limits within an hour, which disrupts productivity. To solve this, we'll be using a more cost-effective and flexible alternative. With this setup, you only need to change the model and the base URL to switch to a fully functional, Anthropic-compatible backend without sacrificing performance.

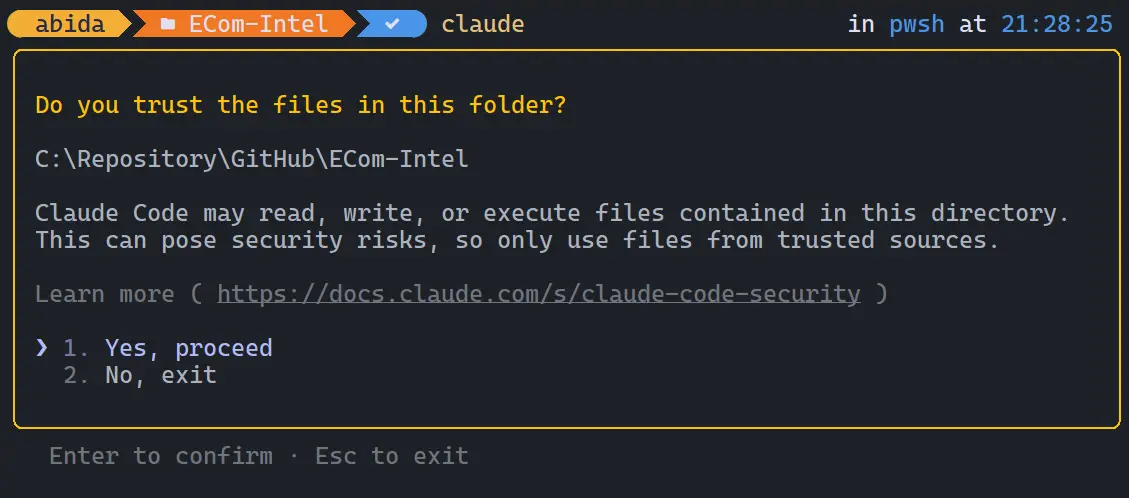

1) Install Claude Code

If you are new to Claude Code, start by launching your terminal. Ensure that Node.js is installed on your system. Then, install the official CLI globally by running:

npm install -g @anthropic-ai/claude-codeNext, navigate to your project directory using:

cd ECom-IntelFinally, initialize Claude Code by typing:

claude

2) Sign up for the GLM Coding Plan (GLM-4.6)

Visit the Z.AI Open Platform and either register or log in to your account. Subscribe to the Lite GLM Coding Plan, which costs $3. After connecting your credit card and completing the subscription, go to the API keys management page. Create a new API key and copy it, as you'll need it in the next configuration step.

3) Configure environment variables

Set up your environment variables so that Claude Code uses Z.AI as its Anthropic-compatible backend. On macOS or Linux (bash/zsh), run:

export ANTHROPIC_AUTH_TOKEN='your_zai_api_key'

export ANTHROPIC_BASE_URL='https://api.z.ai/api/anthropic'4) Add Firecrawl (MCP tool) for web data

Next, integrate Firecrawl remote MCP designed to fetch the latest tech stack details, code snippets, and documentation for your project. Firecrawl is a Web Data API for AI that can connect to Claude Code through MCP. Start by creating a free Firecrawl account and generating an API key. Then, add Firecrawl as an MCP tool using:

claude mcp add --transport http firecrawl https://mcp.firecrawl.dev/{FIRECRAWL_API_KEY}/v2/mcpThis enables Claude Code to access live web data for more accurate and context-aware coding assistance.

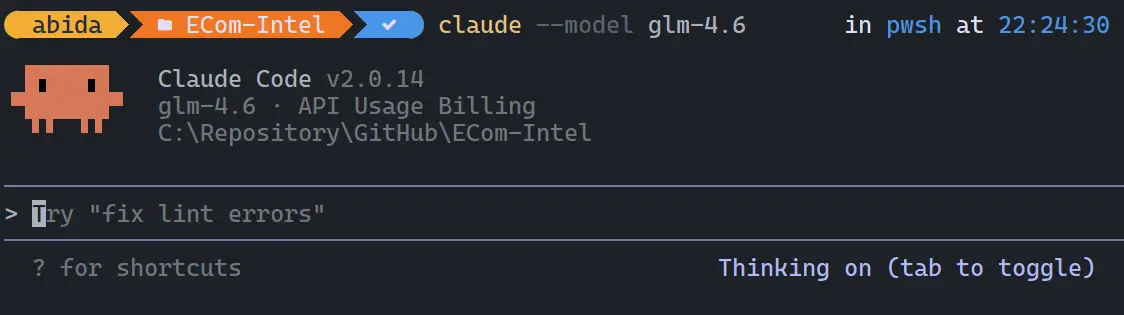

5) Use Claude Code with GLM-4.6 in your project

Return to your project directory and launch Claude Code using the GLM-4.6 model:

cd ECom-Intel

claude --model glm-4.6

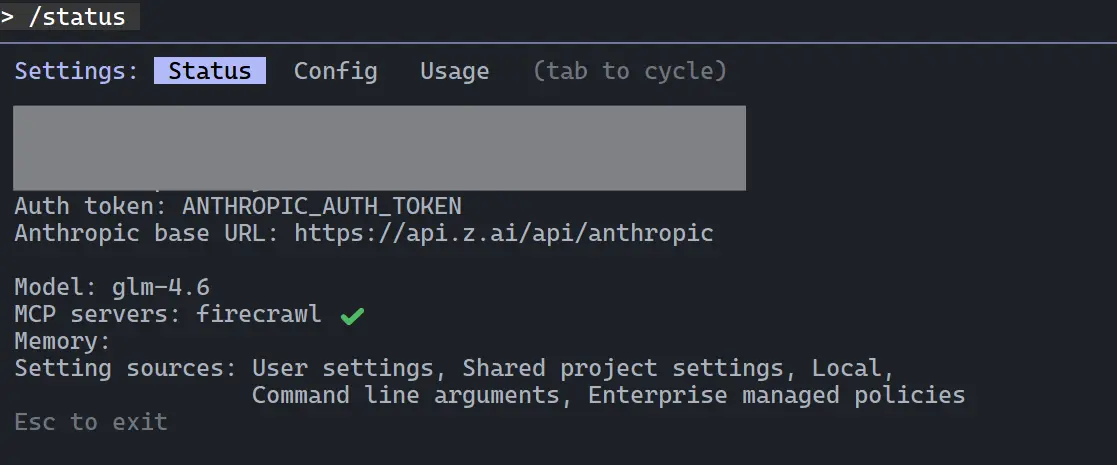

You can verify that the setup is correct by using the /status command. It should display the Z.AI endpoint as the base URL and indicate that the active model is glm-4.6.

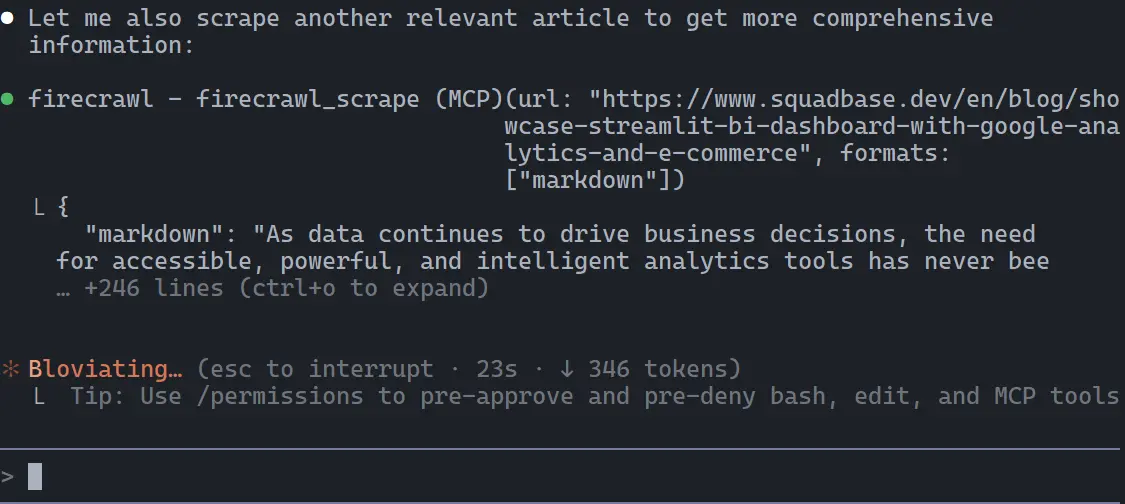

To confirm the Firecrawl MCP connection, you can also ask Claude to test connectivity.

For the best experience, use Alacritty, a cross-platform OpenGL-based terminal emulator. It provides exceptional performance, smooth rendering, and better support for tools like Claude Code, enhancing your overall coding workflow.

Vibe-Coding the E-Commerce Intelligence Application

With everything now configured, it's time to vibe code our E-Commerce Intelligence Application: a compact, end-to-end project that brings together data scraping, sentiment analysis, caching, and visualization into one smooth workflow.

This application uses Firecrawl to automatically search for and scrape customer reviews from relevant pages. The collected reviews are then analyzed using OpenAI's AI model, which classifies overall sentiment, identifies recurring themes, and extracts useful insights such as customer satisfaction levels or feature-related feedback.

To make the process efficient, all scraped and analyzed data is stored locally in an SQLite database, ensuring that results are cached for quick access. Finally, the insights are displayed through an elegant Streamlit dashboard, giving users an interactive and visual overview of sentiment trends and summarized findings.

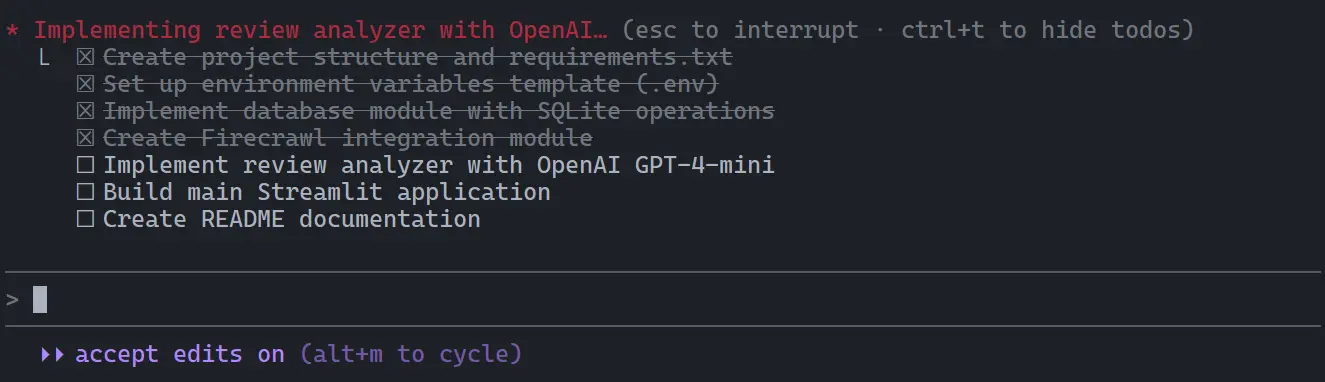

1. Plan mode

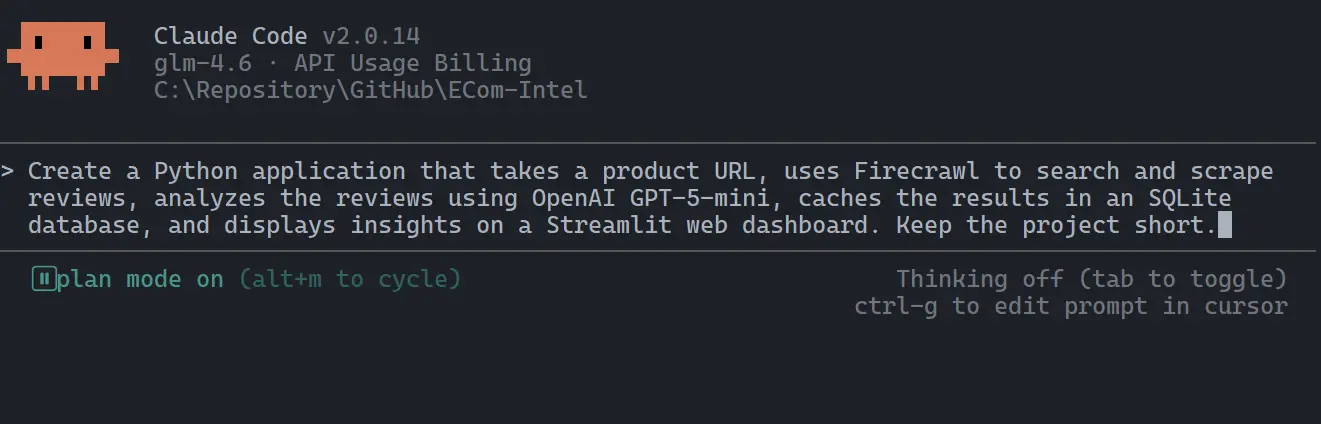

Go to your project folder and launch the terminal. Once inside, run the following command to start Claude Code with the GLM-4.6 model:

claude --model glm-4.6Press Alt + M twice to activate Planning Mode. This mode allows GLM-4.6 to create a detailed project plan before generating or editing any files.

Here's the initial prompt:

Create a Python application that takes a product URL, uses Firecrawl to search and scrape reviews, analyzes the reviews using OpenAI GPT-5-mini, caches the results in an SQLite database, and displays insights on a Streamlit web dashboard. Keep the project short.

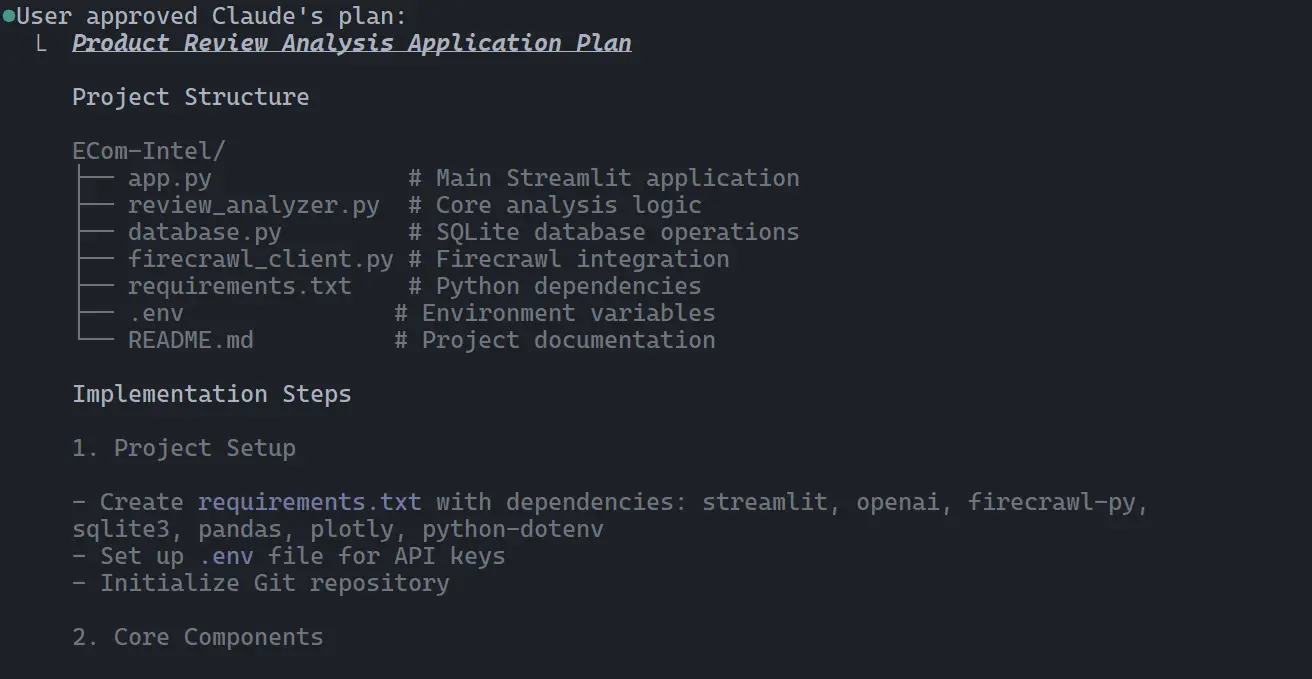

Once the plan is generated, accept it. Within seconds, Claude Code will begin building the project structure and core components based on the plan.

2. Edit mode

In Edit Mode, Claude Code automatically breaks down the project into smaller, manageable tasks and executes them one by one.

Within about five minutes, it creates all necessary files and provides installation steps to set up and launch the application.

While we can ask Claude Code to install dependencies automatically, some configurations, such as adding your OpenAI and Firecrawl API keys must be done manually to complete the setup.

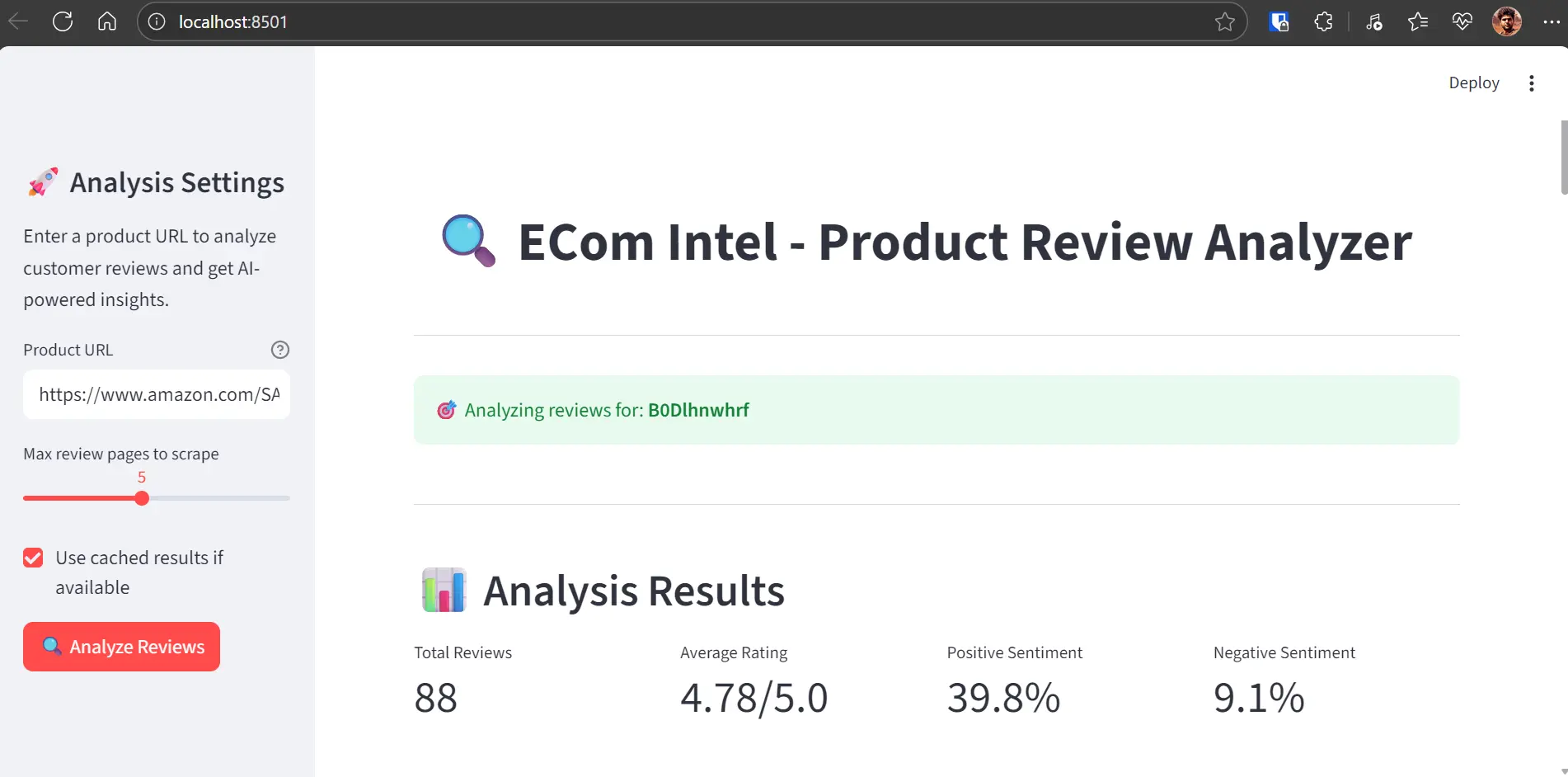

Testing Our E-Commerce Intelligence Application

Now that our project is complete, it is time to test the E-Commerce Intelligence App and see it in action.

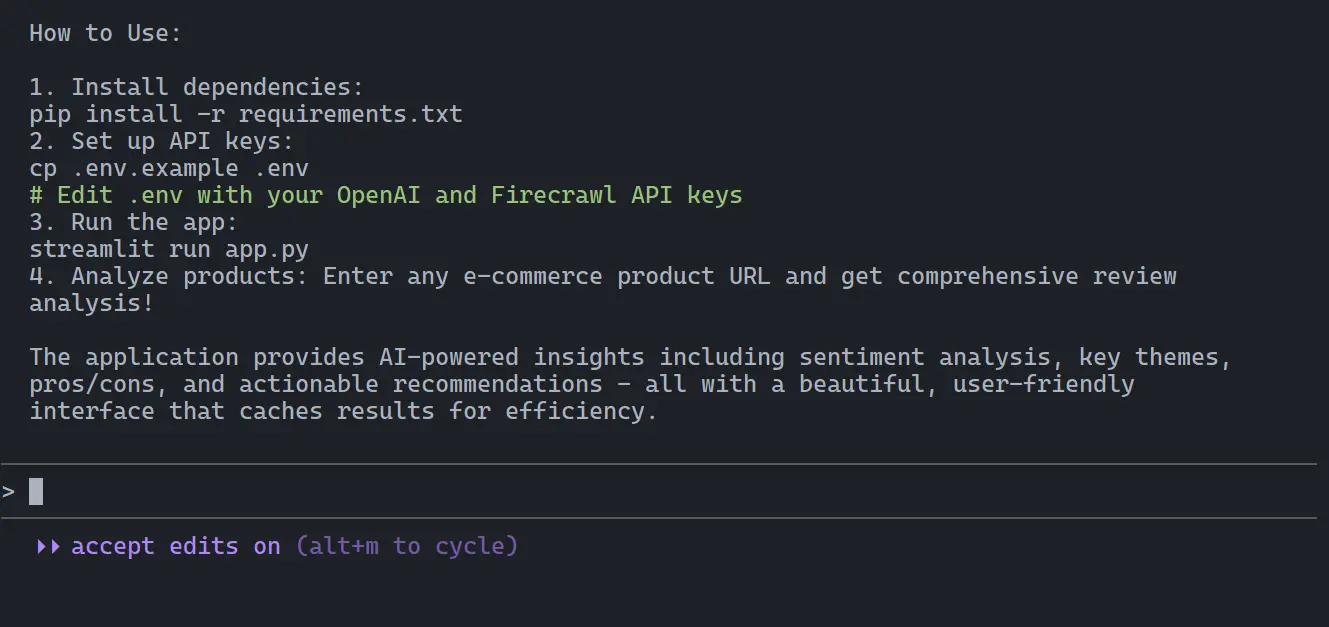

1. Install dependencies

Run the following command to install all required packages:

pip install -r requirements.txt2. Set up API keys

Make sure you have generated both your OpenAI and Firecrawl API keys. Ensure you have at least $5 in your OpenAI account balance for API calls to work properly. Some models on OpenAI may also require organization verification, so complete that step before running the app.

Next, copy the environment template:

cp .env.example .envThen, open the .env file and add your OpenAI and Firecrawl API keys.

3. Run the app

Launch the Streamlit application by running:

streamlit run app.pyOutput:

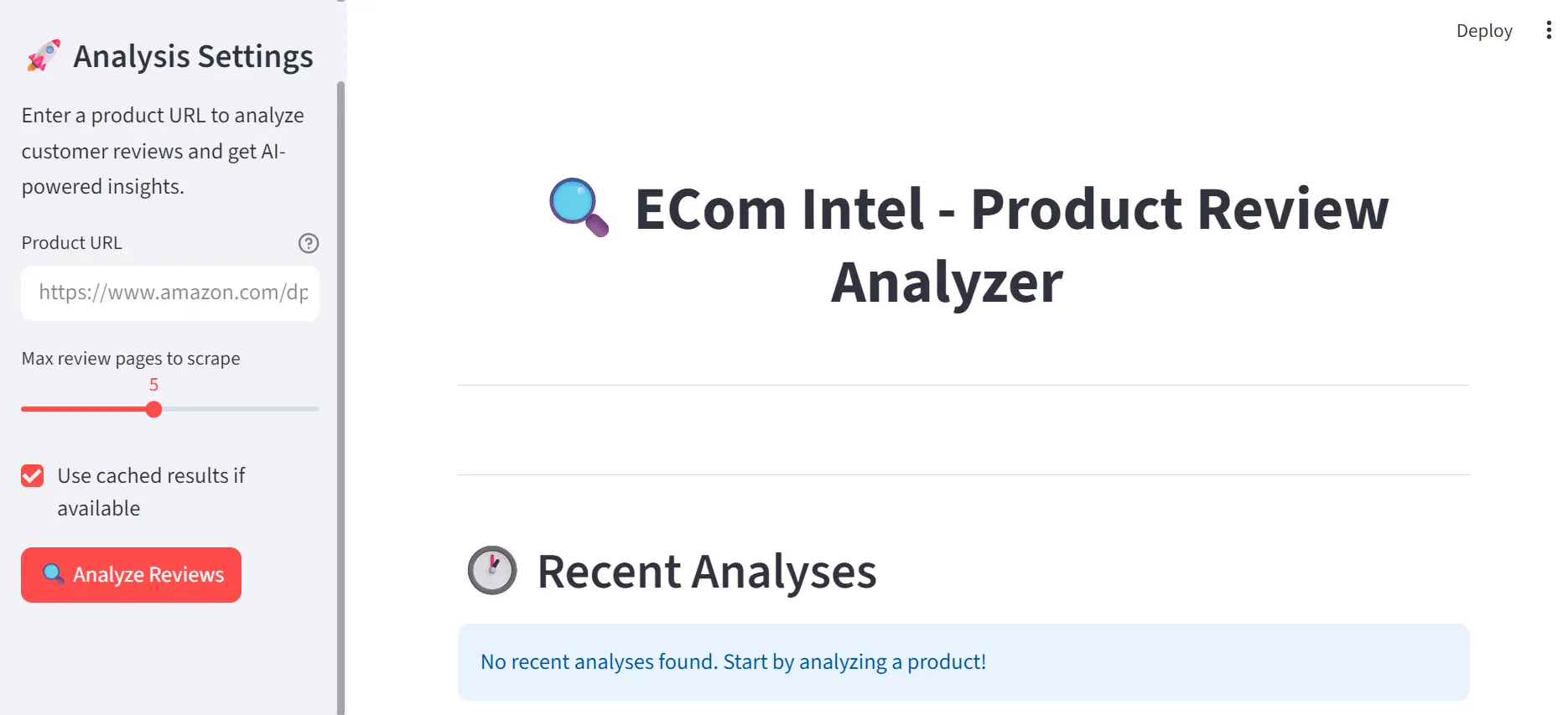

You can now view your Streamlit app in your browser.

Local URL: http://localhost:8501

Network URL: http://192.168.100.94:8501The app should load perfectly, it's impressive how well it works right out of the box.

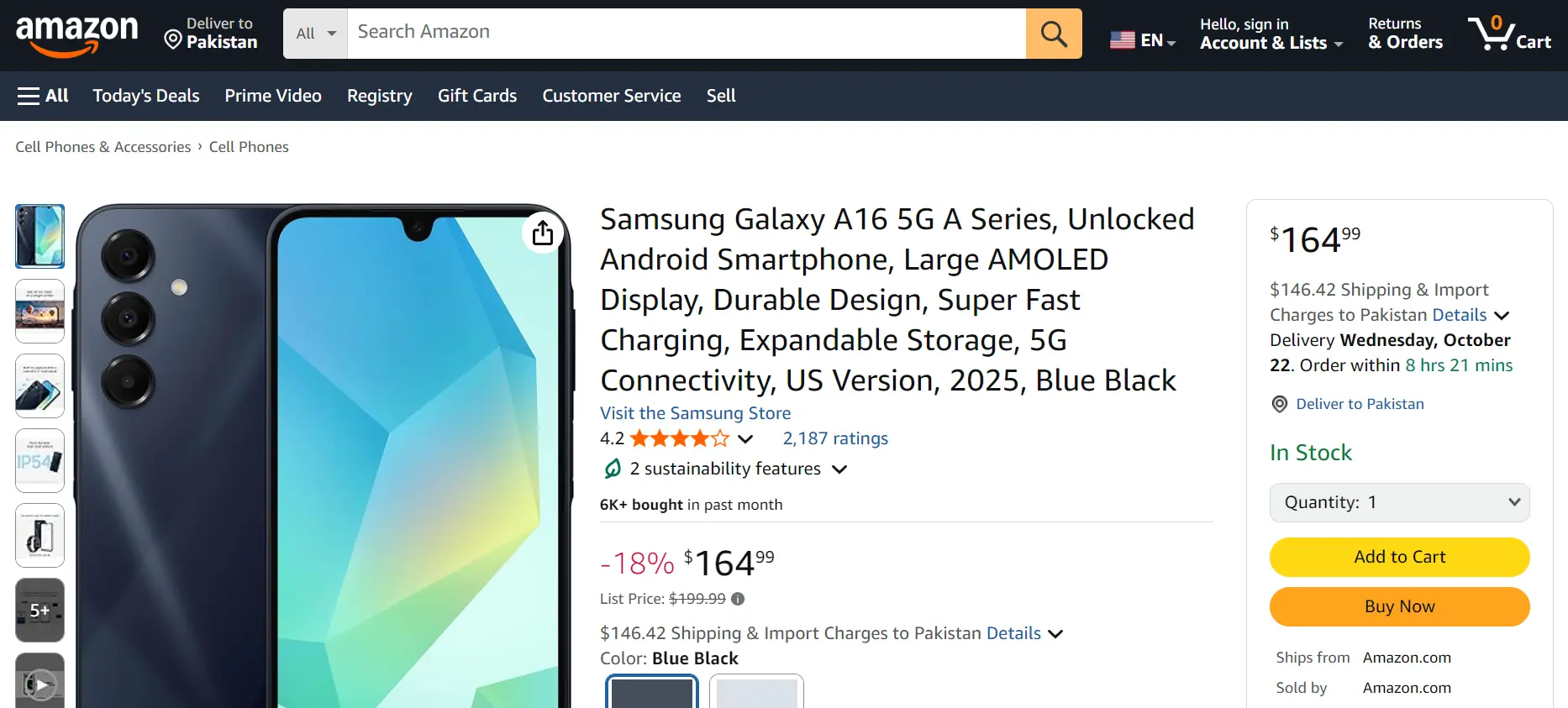

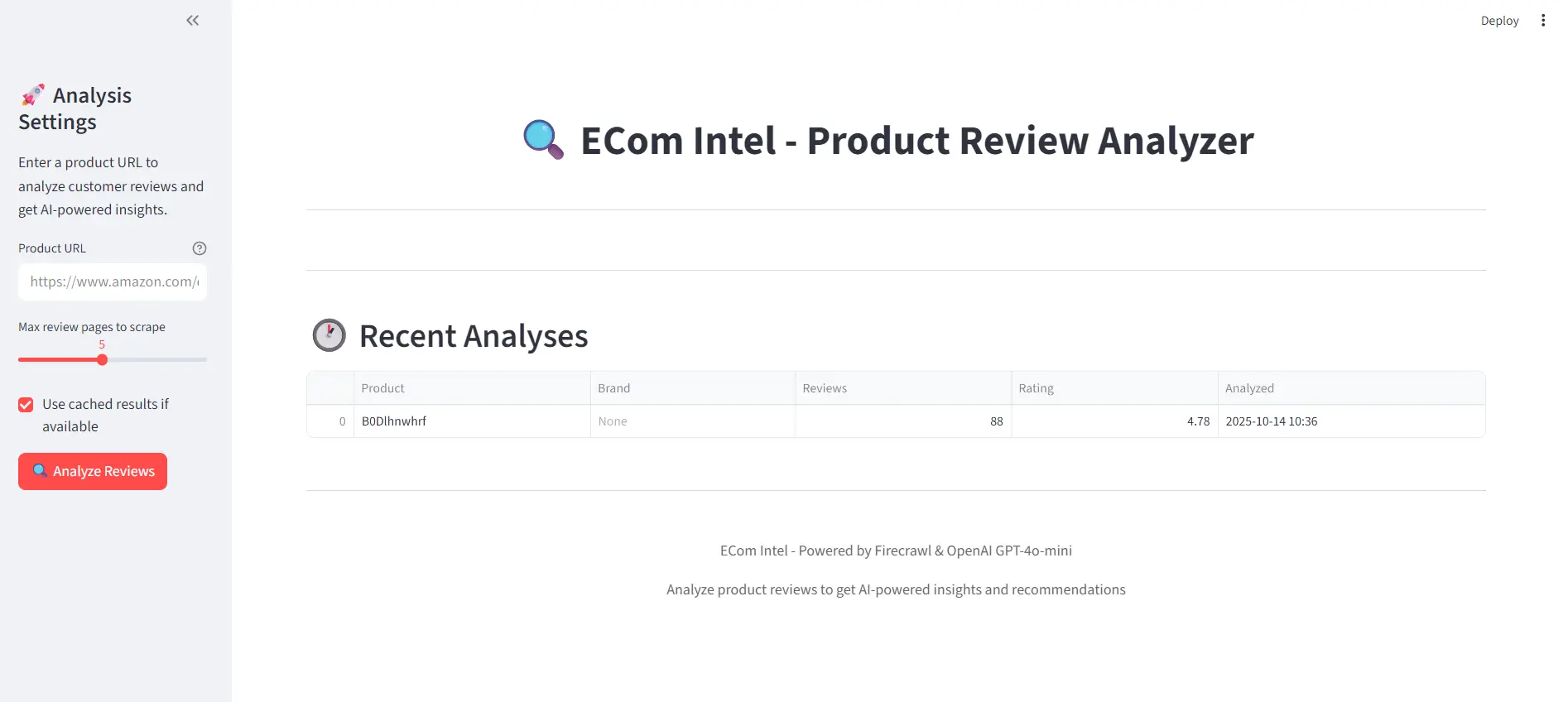

4. Testing with the URL

To test it, search for a random product on Amazon and copy its URL, for example: https://www.amazon.com/SAMSUNG-Unlocked-Smartphone-Charging-Expandable/dp/B0DLHNWHRF?th=1

Paste the URL into the app and click the Analyze Reviews button.

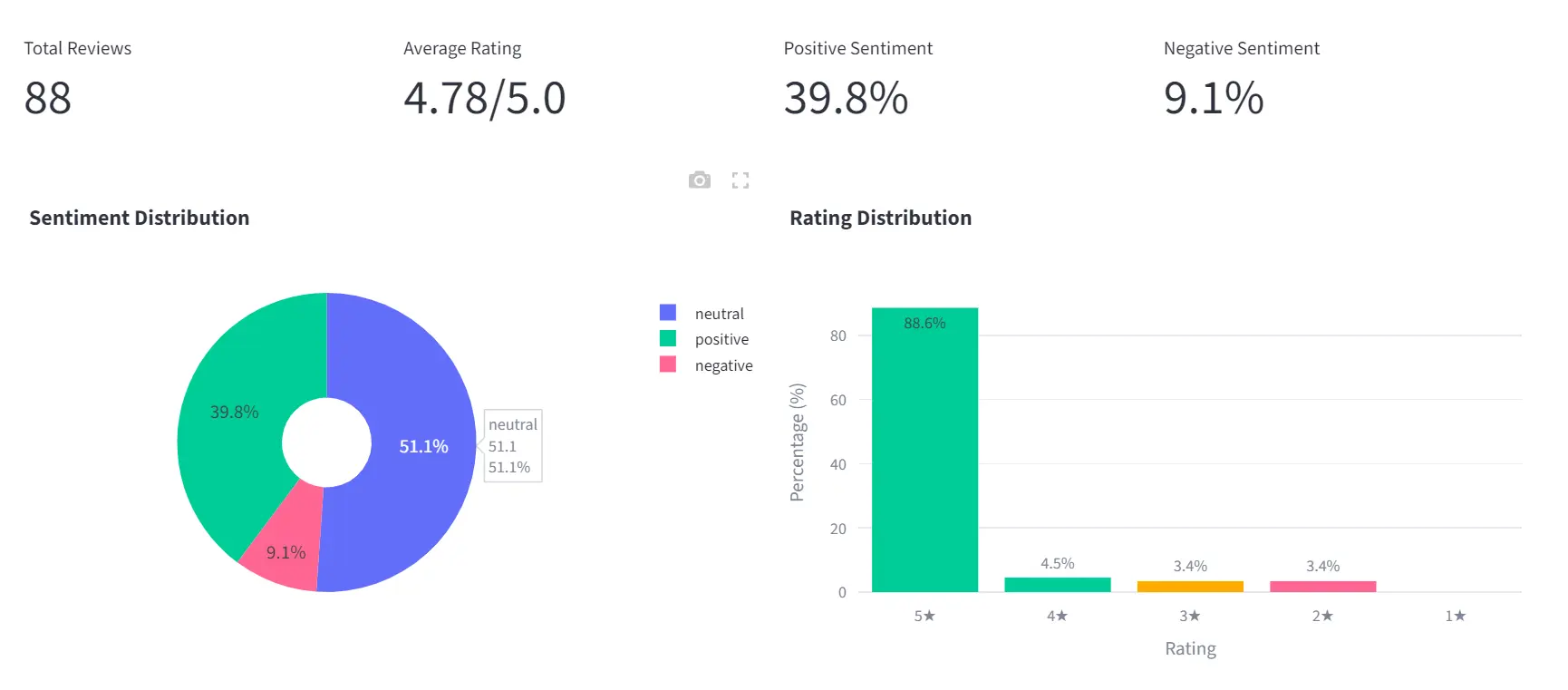

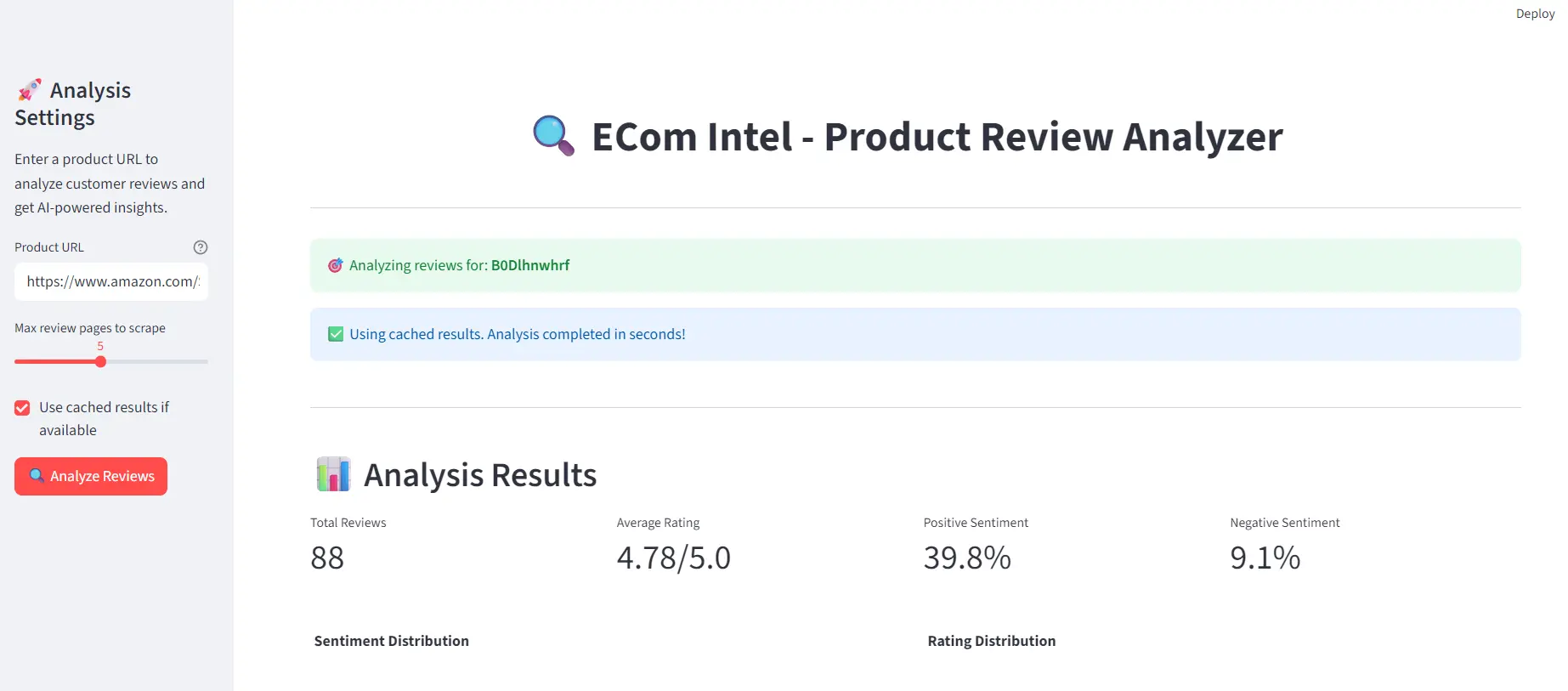

5. Visualizing the Results

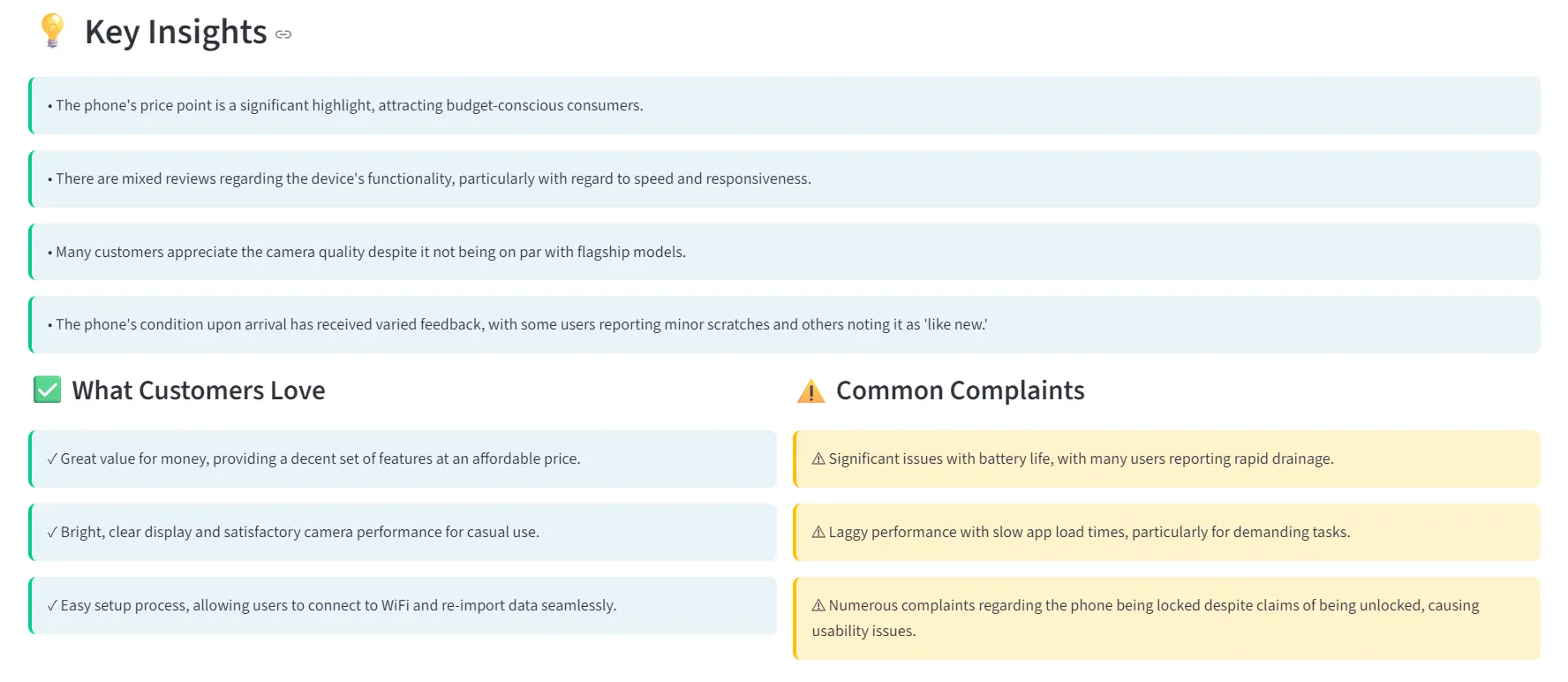

Within seconds, you'll see detailed visualizations showing total reviews, sentiment analysis, rating distributions, and more.

The report comes with the key highlights, what customers love, and common complaints.

In the end, it has provided recommendations on how you can improve the product information.

If you refresh the page, the app will show your past searches.

When you analyze the same URL again, results appear almost instantly because of cached data stored in SQLite.

All source code, documentation, and demo results are available on GitHub: kingabzpro/ECom-Intel: Product Review Analyzer

Final Thoughts

It took me a bit of time to get the hang of Claude Code, from setting up the MCP to crafting the perfect prompts and understanding its various modes.

The biggest takeaway? Don't overcomplicate your instructions. When you pack too many constraints, specify exact libraries, or overdefine the process, the model tends to produce messy, error-prone code. Instead, describe the goal clearly and give it creative freedom to decide how to build it. Let Claude Code plan, review its approach, and refine only where needed, that's when it truly shines.

The Good and the not-so-good

There were a few hiccups. It defaulted to GPT-4o-mini instead of GPT-5-mini and relied on an older API, which made the responses a bit slower. But honestly, the fact that the app worked right out of the box made up for it. All I had to do was install dependencies, set up the environment variables, and run the app, no manual debugging or complex setup needed.

What I would improve next

If I were to rebuild this project, I would make it faster and more efficient. This would involve switching to the latest OpenAI SDK and response API, optimizing Firecrawl queries for quicker data retrieval, and enhancing caching to ensure results are almost instant. Additionally, I was pleasantly surprised by how well GLM-4.6 managed the user interface. It generated a clean and functional design for both real-time and cached data, something that many AI coding tools struggle to achieve.

My recommendation

If you are new to Claude Code or the GLM Coding Plan, keep it simple. Start in Plan Mode and let it generate the project plan. If you like the plan, accept it and let GLM-4.6 build the whole project; if you want changes, edit the plan first and then let it work its magic. Once the base app is ready, test it, spot the gaps, and refine it manually, especially if you are comfortable with Python. Tweak, optimize, and personalize. That's the fastest path from a solid AI-generated MVP to something genuinely exceptional.

data from the web