Why use Firecrawl with n8n?

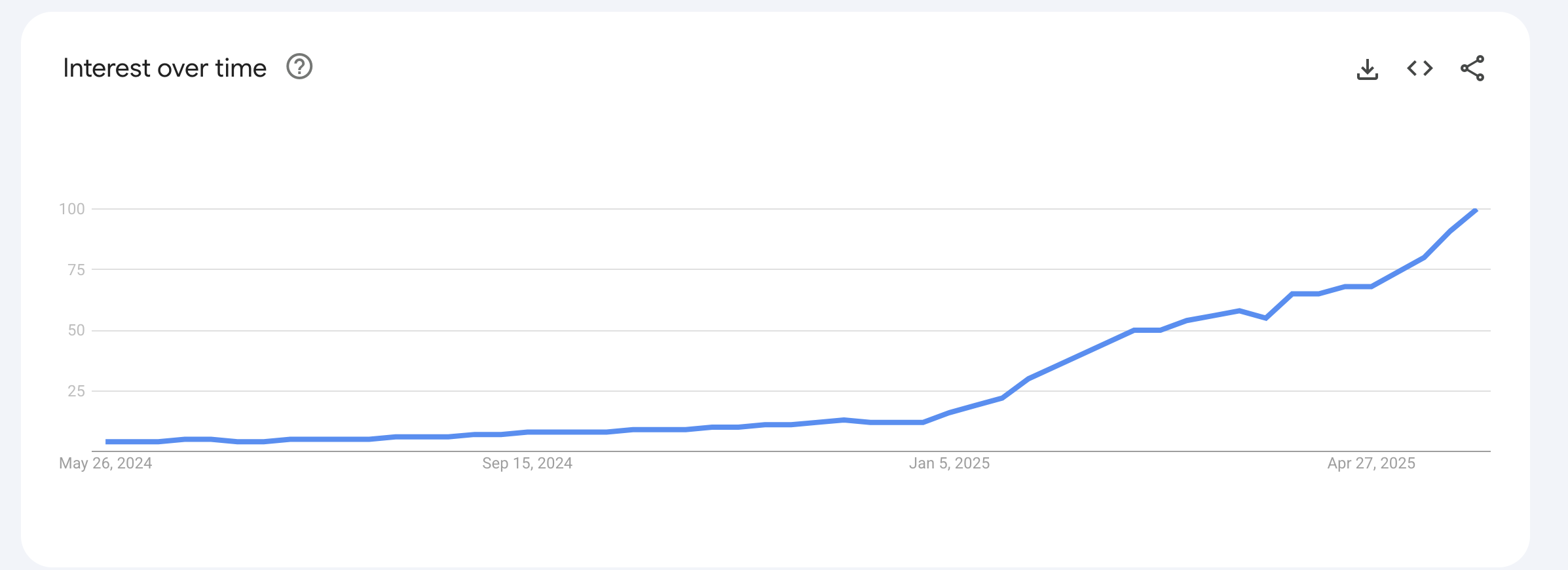

This is the meteoric rise of n8n - an open-source workflow automation platform:

n8n has become the automation platform of choice for technical teams since its 2019 launch. The platform now boasts over 400 integrations and recently raised $60 million in March 2025, saving companies hundreds of hours monthly. While n8n excels at connecting APIs and services, most business workflows need one notoriously difficult thing: fresh, reliable web data. Traditional web scraping breaks when sites update, gets blocked by anti-bot systems, or fails to handle JavaScript-heavy pages.

Firecrawl handles the web scraping side of this integration. The API service converts entire websites into LLM-ready markdown, managing JavaScript rendering and anti-bot systems that can complicate traditional scraping approaches. You get structured data in a format that works directly with n8n nodes. This combination lets you pull web content into workflows without requiring custom scraping infrastructure.

Ready for More? Check out our collection of n8n web scraping workflow templates for production-ready examples. For visual AI development, explore our LangFlow tutorial.

This tutorial will show you how to combine these tools through two approaches: HTTP requests and the community node. You'll build a working chatbot that can access live web data, compare both integration methods, and understand which approach fits your automation needs. By the end, you'll have a practical example that you can adapt for competitor monitoring or content aggregation.

This guide assumes you have a basic understanding of Firecrawl's capabilities and API structure. If you're new to Firecrawl, you may want to spend a few minutes with the official documentation to familiarize yourself with the service.

Getting started with n8n

n8n offers one of the most comprehensive documentation experiences in the automation space. You can get up to speed quickly by spending 30 minutes on their quickstart guide and beginner course. This foundation covers 90% of the workflows you'll build.

Let's explore the basics through a real example: a workflow that connects WhatsApp and Slack for bidirectional messaging. You can explore this workflow interactively to see how the pieces fit together.

The first part of the n8n workflow to send Slack messages to WhatsApp

n8n workflows consist of nodes connected by edges that pass data between operations. This first section converts Slack messages into WhatsApp messages and sends them to your specified contact.

The workflow begins with a Slack trigger node that listens for events like new messages in channels, support tickets, or mentions.

n8n provides various trigger types to start workflows: manual triggers, scheduled timers, cron jobs, and application-specific triggers like this Slack example.

After the trigger fires, two nodes retrieve the channel ID where the message was posted. A conditional node then determines whether the message contains only text or includes media files.

For media messages, the workflow downloads the content before sending it to WhatsApp. Text-only messages go directly to the WhatsApp node.

The second part of the workflow to convert WhatsApp messages to Slack

The reverse direction - converting WhatsApp messages to Slack - requires more complexity since WhatsApp frequently includes audio and file attachments.

Notice the "download media" node in the workflow. This demonstrates n8n's HTTP Request node, which can send GET, POST, PUT, and DELETE requests to any API. This node type will be central to our Firecrawl integration later.

You can also notice red warning flags next to the Slack and WhatsApp nodes - these indicate authentication errors. Since these nodes connect to external accounts, you need to set up credentials first.

To configure authentication, select a node and press Enter to open its settings, then click "Create new credential." You can also configure other node settings here, such as filtering by channel ID.

Try the quickstart examples or the templates library before continuing with this tutorial to get hands-on experience with n8n's interface.

Two ways of using Firecrawl with n8n

Now that you understand workflow basics and node types, let's explore how to integrate Firecrawl's AI-powered scraping engine into n8n workflows.

The choice between integration methods depends on your specific requirements and infrastructure preferences. You have two integration options: using HTTP request nodes to call Firecrawl's API directly on n8n Cloud, or installing the community-maintained Firecrawl node on a self-hosted n8n instance. Each approach has distinct advantages depending on your setup.

Method 1: Cloud Version + HTTP Requests

This method works with n8n Cloud, which requires no installation or setup. While you can also use HTTP requests in self-hosted n8n instances, the cloud version removes infrastructure concerns and gets you started immediately.

When to use this method: Choose HTTP requests when you need access to all Firecrawl API endpoints, want to get started quickly without installation, or prefer the convenience of n8n Cloud. This approach works best for teams that prioritize rapid prototyping and don't have strict data residency requirements.

We'll build a practical example: a GitHub trending scraper that extracts repositories in your chosen field.

Setting up the workflow

Start by signing up for n8n Cloud and creating a new workflow from your dashboard.

Add the Schedule Trigger:

- Click the plus icon and search for "Schedule Trigger"

- Configure it to run daily at 5 AM

- This node will automatically start your workflow each morning

![]()

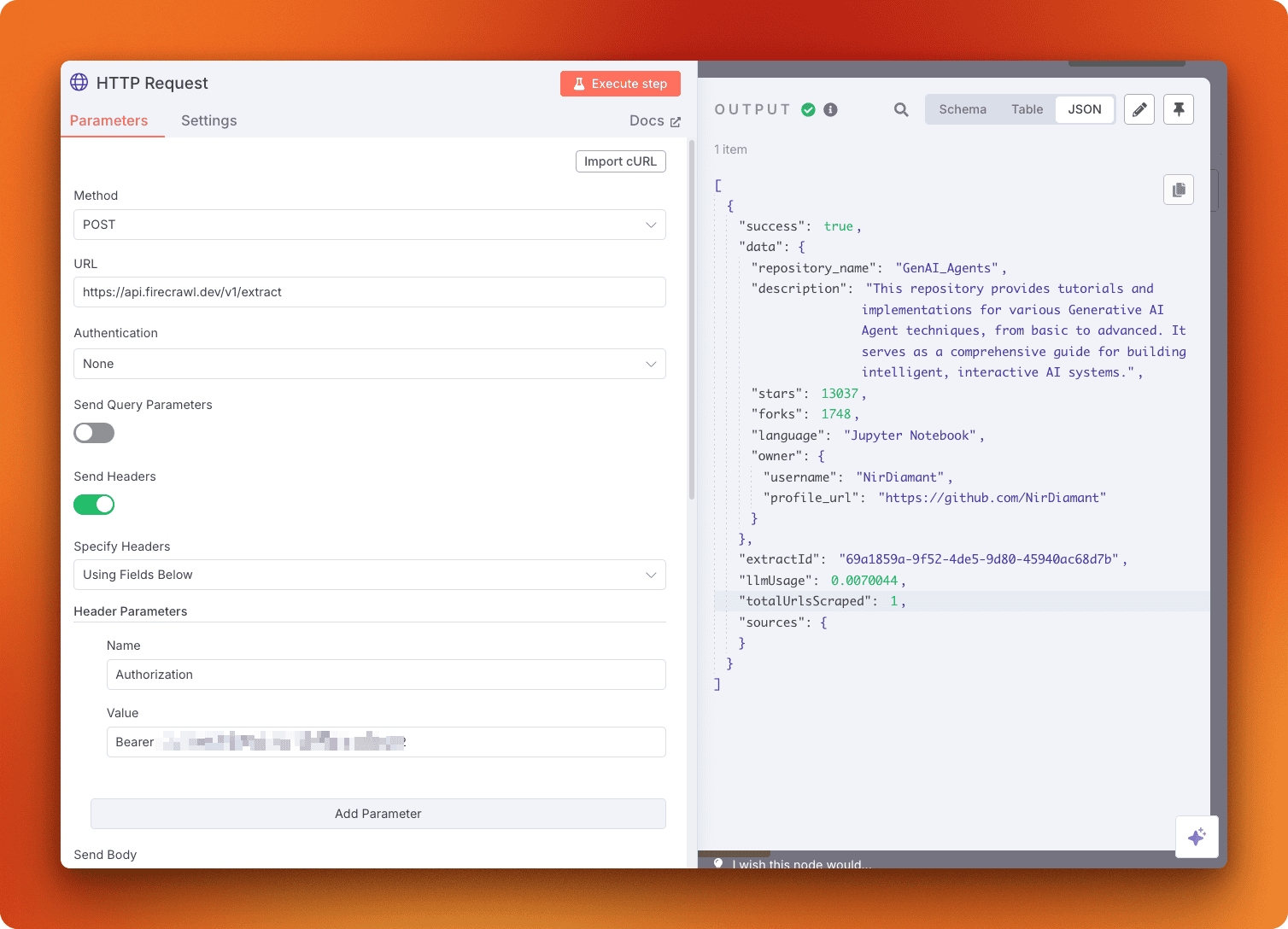

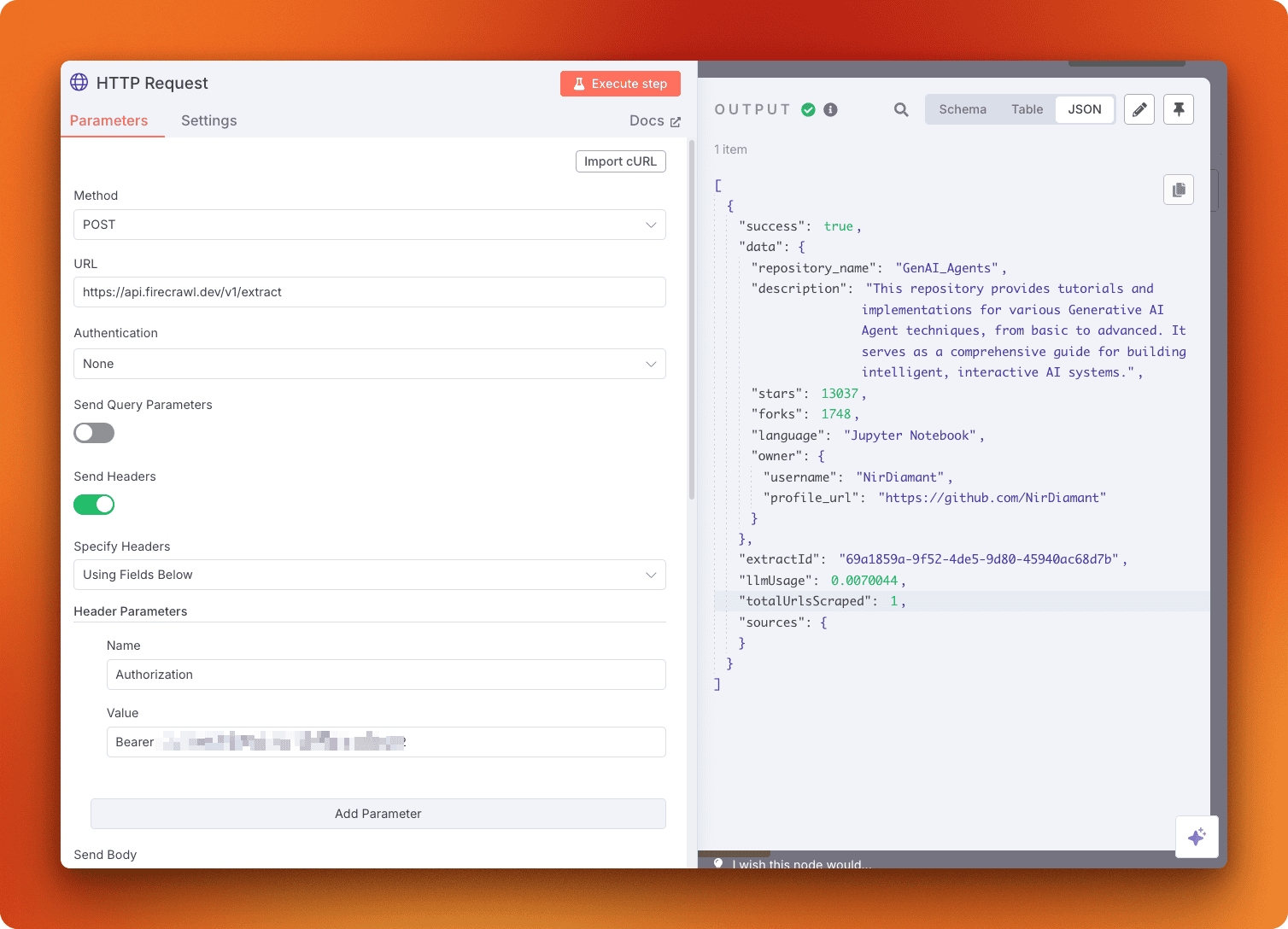

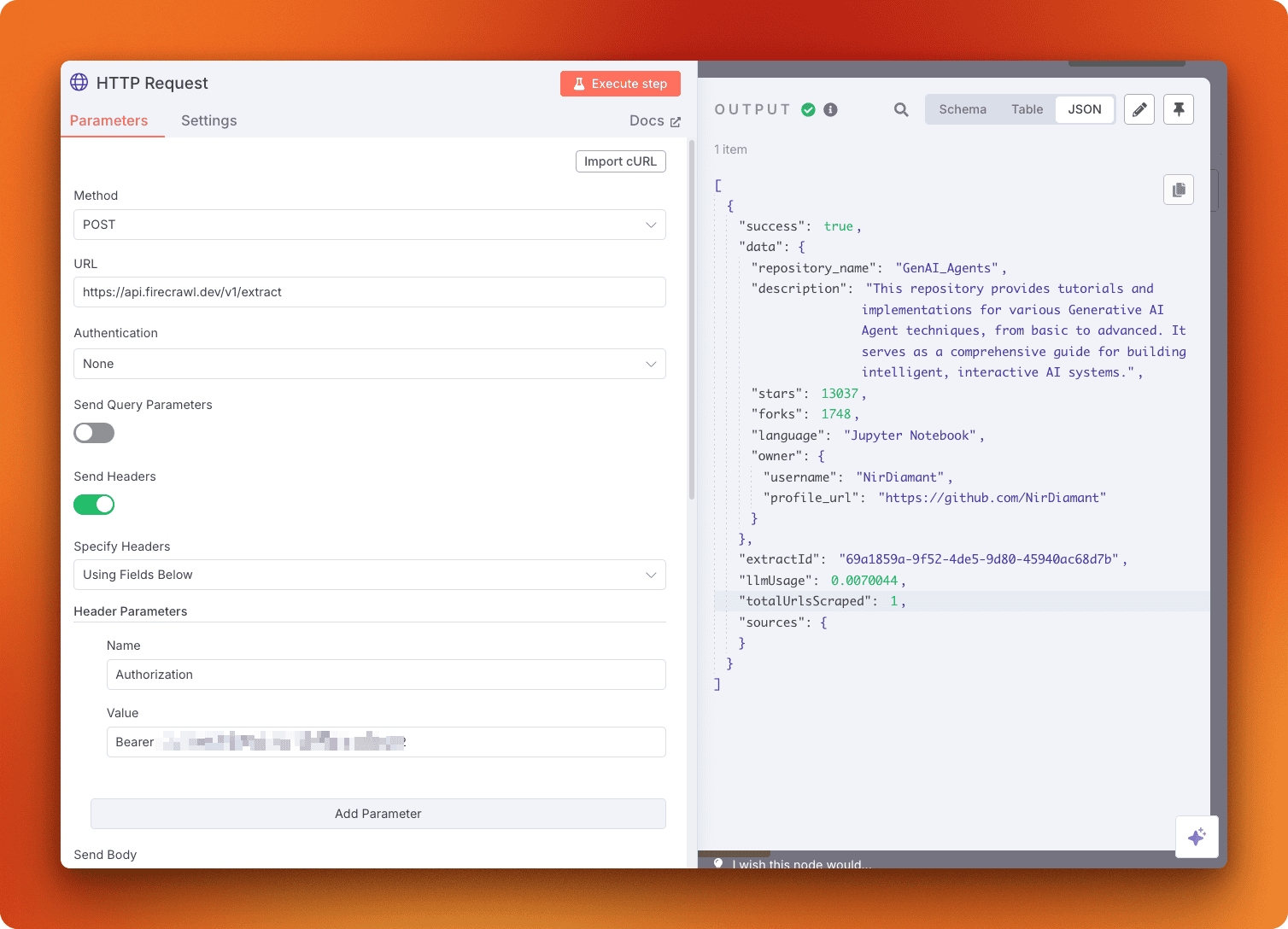

Add the HTTP Request Node:

- Search for "HTTP Request" and add it to your canvas

- Connect it to the Schedule Trigger node

- Click on the HTTP Request node to open its settings

The HTTP Request node lets you call any API endpoint. Notice the "Import cURL" button - this feature allows you to copy API calls directly from documentation and paste them into n8n.

The cURL button highlighted in node settings on n8n

Configuring the Firecrawl API call

Navigate to Firecrawl's Extract endpoint documentation to set up your API request.

Firecrawl API reference showing the Extract endpoint

The Extract endpoint converts web pages into structured JSON using AI. Click "Try it" to open the interactive API explorer.

API parameters interface for the Extract endpoint

Configure your request:

- URLs: Add a new URL and set it to

https://github.com/trending - Prompt: "Extract all repository details related to MLOps" (or your preferred topic)

- API Key: Add your Firecrawl API key in the Bearer token field

Click "Send" to test your request. If no MLOps repositories are trending, try a broader topic like "Python" to verify the setup works. A 200 response code indicates success.

Importing to n8n

Once your API call works in the documentation:

- Copy the generated cURL command

- Return to your n8n HTTP Request node settings

- Click "Import cURL" and paste the command

- Click "Import" - n8n automatically fills in all parameters

- Click "Execute node" to test the integration

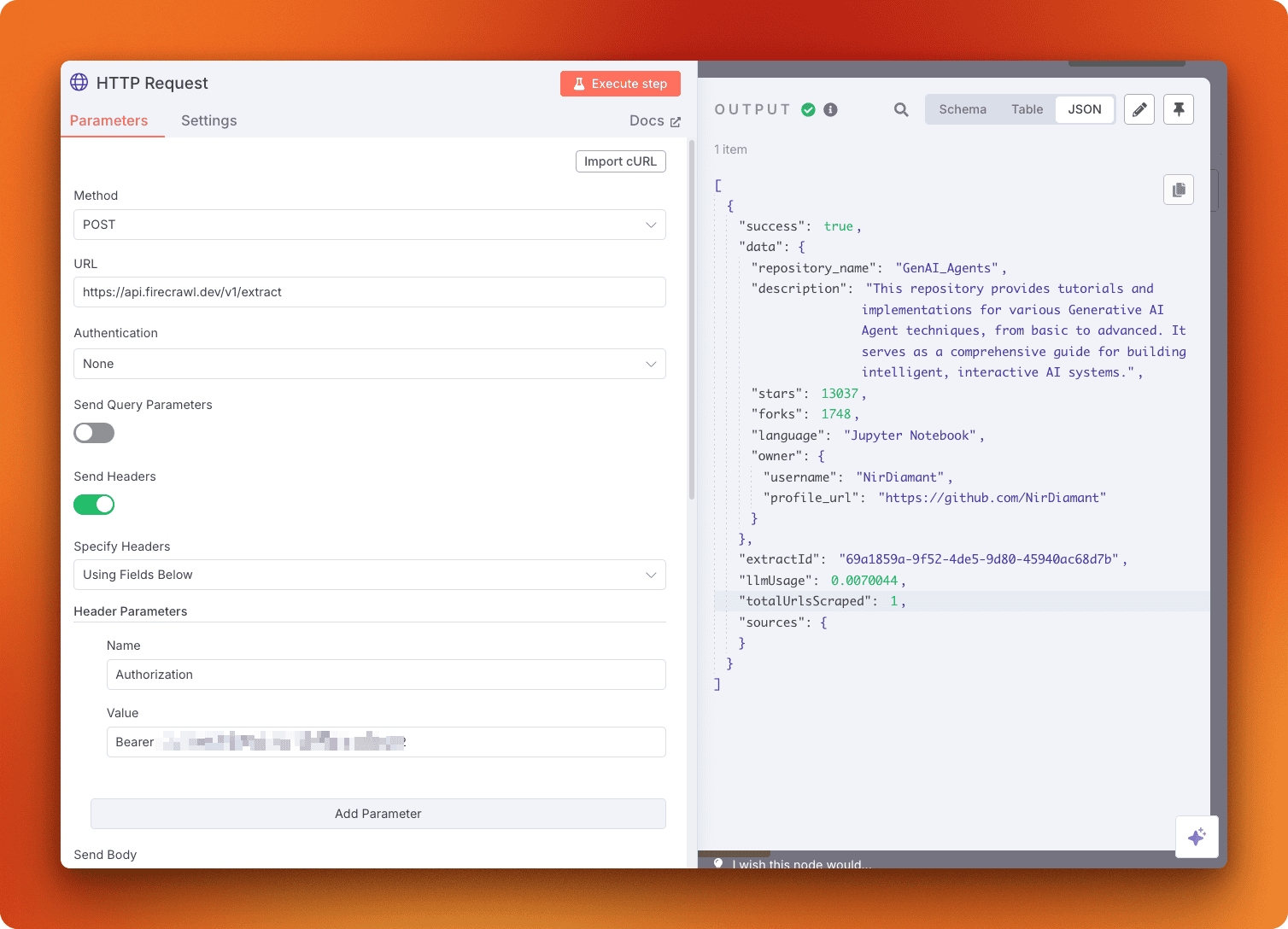

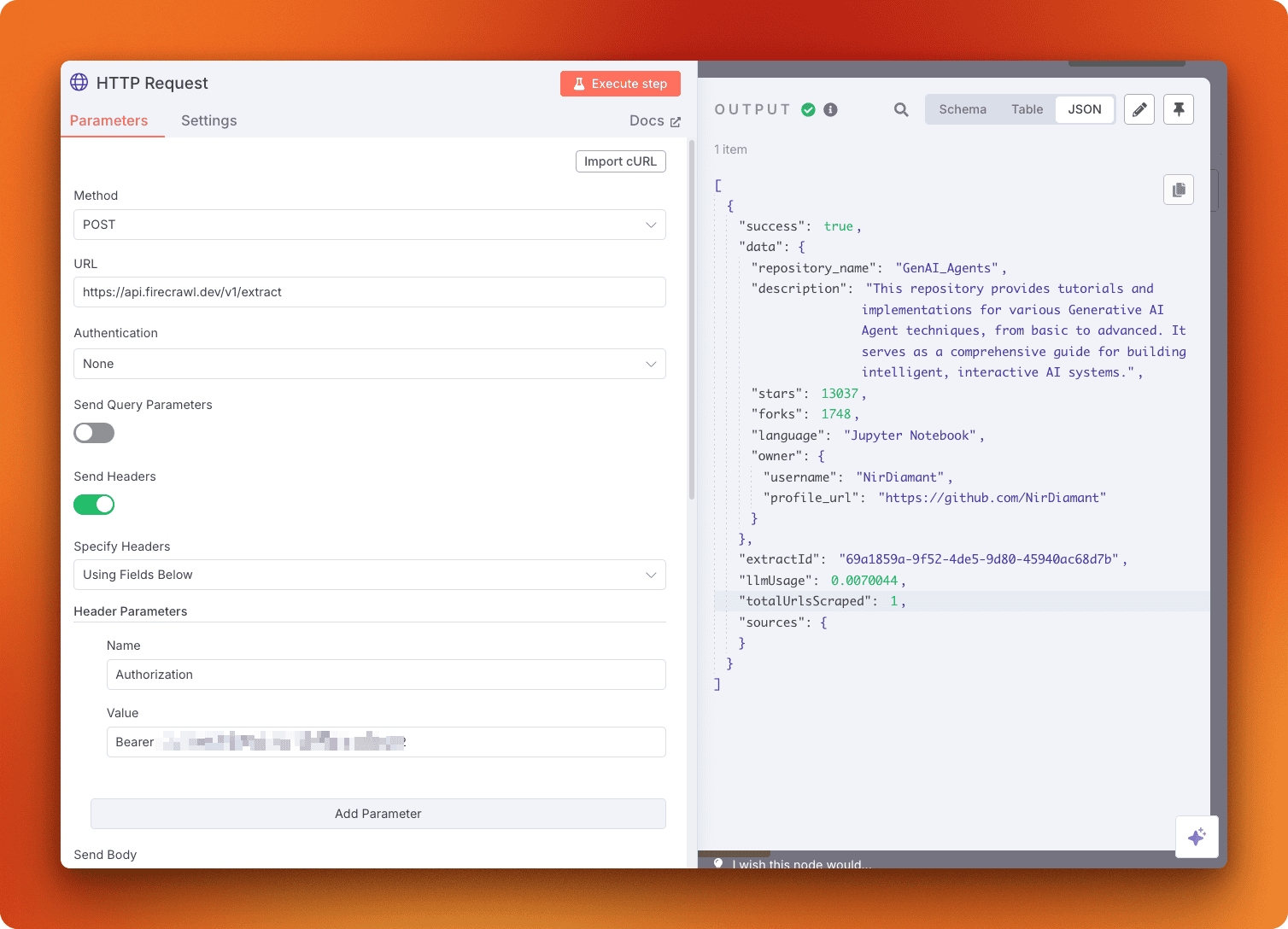

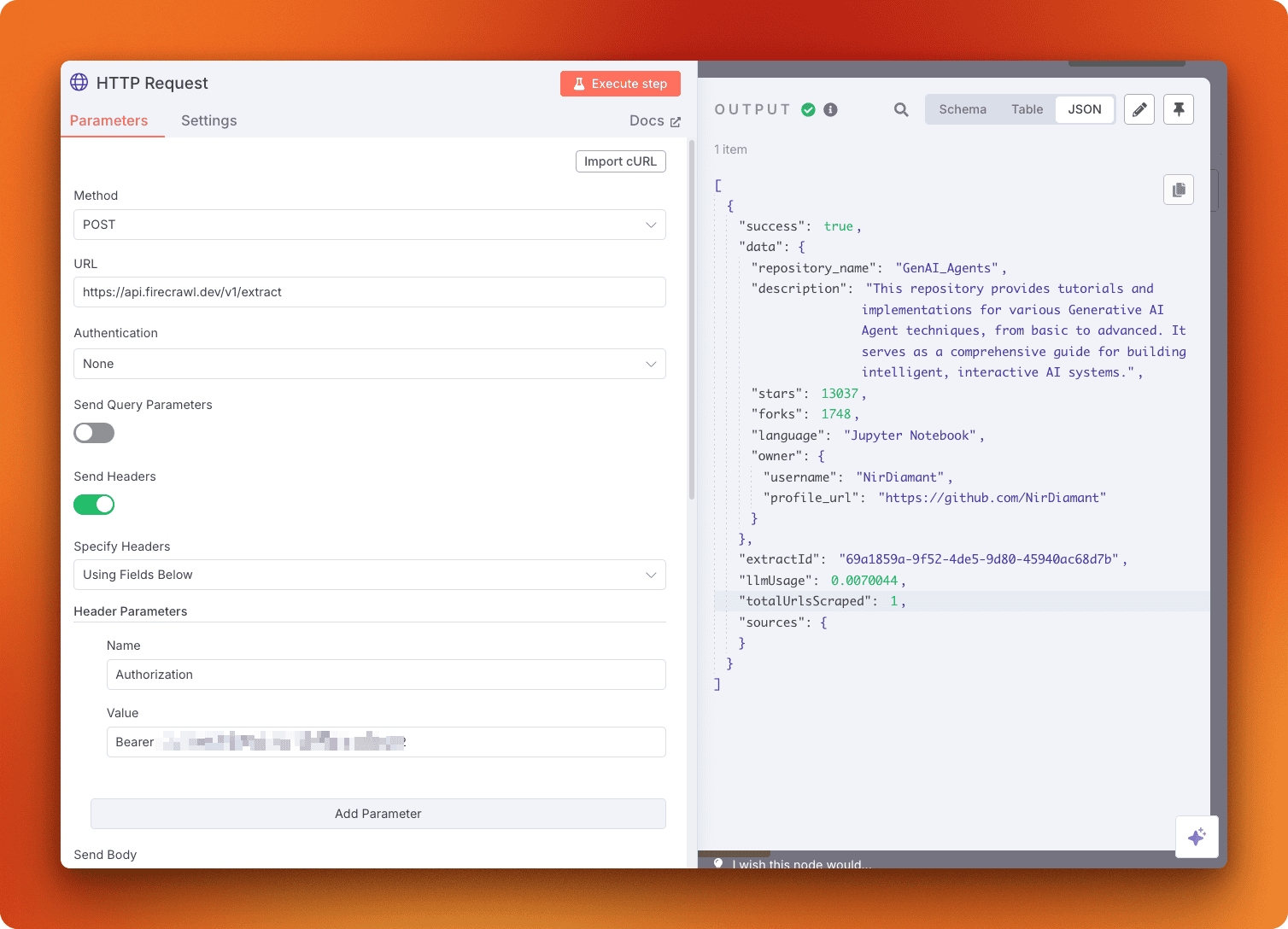

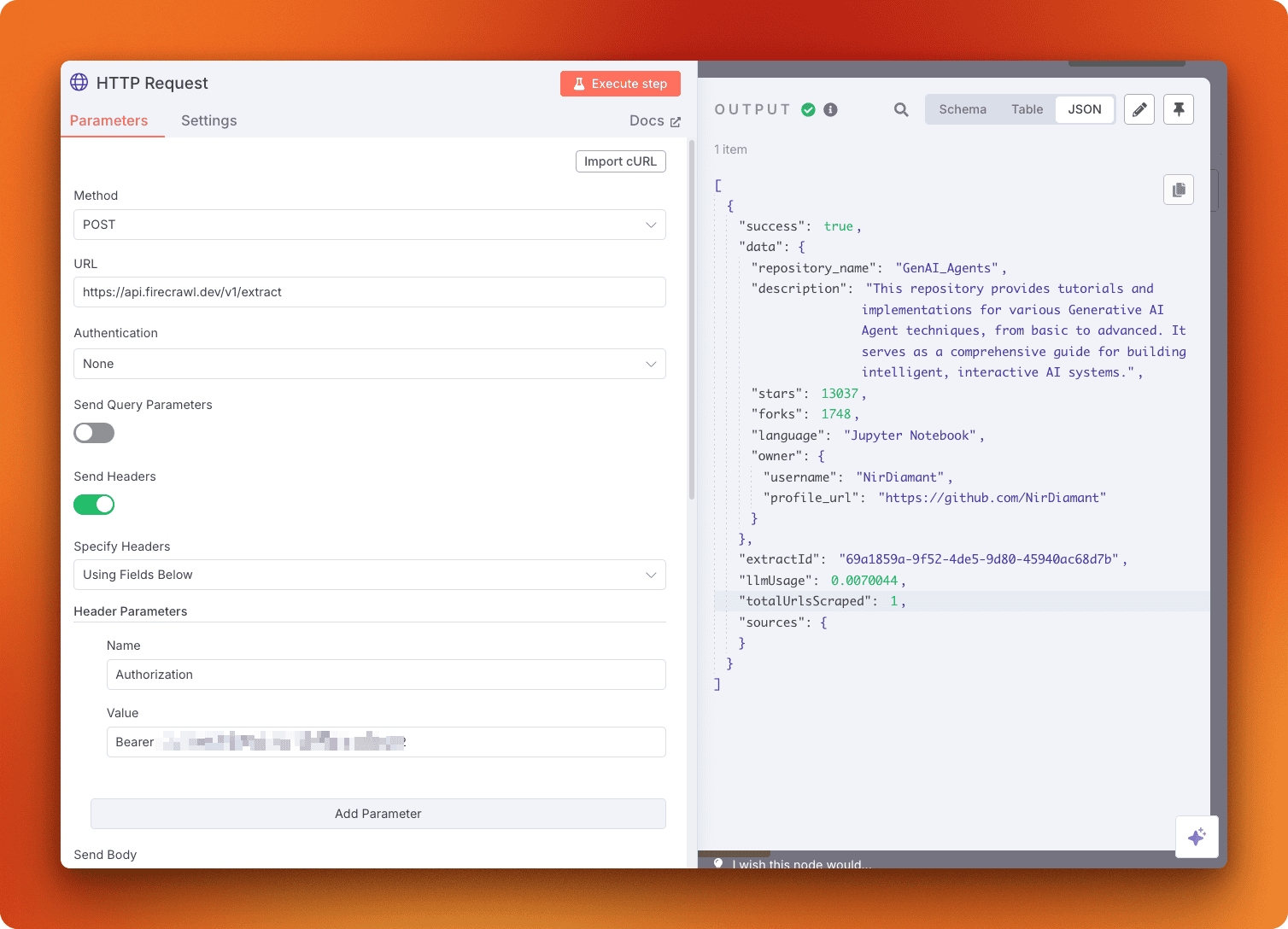

HTTP Request node showing a successful API response

You should see the extracted repository data in the output panel. The HTTP Request node is now configured and ready to run on your schedule.

Expanding the workflow

With the core scraping configured, you can extend this workflow by adding nodes to:

- Filter results for specific criteria

- Send email or Slack notifications

- Store data in databases or spreadsheets

- Compare current trends with historical data

The HTTP Request approach gives you complete control over API parameters and works with any Firecrawl endpoint, an advantage you won't get when using the community-maintained Firecrawl node.

Method 2: Self-Hosted version + Firecrawl community node

Some automation workflows require complete data control and strict security compliance. Financial institutions processing sensitive customer data, healthcare organizations handling patient records, or government agencies managing classified information often cannot use cloud-based automation platforms. These scenarios demand self-hosted solutions.

When to use this method: Choose the community node approach when you need complete data control, have security compliance requirements that prevent cloud usage, or prefer a simplified interface for basic scraping tasks. This method works best for organizations with existing infrastructure and teams comfortable managing self-hosted applications.

n8n's self-hosted version addresses these requirements while offering an additional advantage: community nodes. These third-party integrations extend n8n's functionality beyond the official node library.

Installing Self-Hosted n8n

If you have Docker Desktop installed, setting up n8n takes just two commands.

First, create a volume to persist your workflow data:

docker volume create n8n_dataThen launch the n8n instance:

docker run -it --rm --name n8n -p 5678:5678 -v n8n_data:/home/node/.n8n docker.n8n.io/n8nio/n8nYour n8n instance will be available at http://localhost:5678. The interface looks identical to the cloud version, but you'll need to register your instance to unlock additional features.

Registering your instance

When you first launch self-hosted n8n, it runs in unregistered mode. To access premium features, navigate to Settings > Usage and Plan, then register with your email to receive a license key.

Registration unlocks:

- Folders: Organize workflows into structured directories

- Debug in editor: Pin and copy execution data during development

- Workflow history: 24 hours of version history for rollbacks

- Custom execution data: Save and annotate execution metadata

Note that even the registered self-hosted version doesn't have the full feature set of n8n Pro or Enterprise.

Installing the Firecrawl Community Node

Self-hosted n8n allows installation of community-created nodes that aren't available in the cloud version. The Firecrawl community node provides a simplified interface for common Firecrawl operations.

Navigate to Settings > Community Nodes and click "Install a community node."

Enter the package name: n8n-nodes-firecrawl and click "Install".

You should see a "Package installed successfully" confirmation message.

Using the Firecrawl node

Return to your dashboard and create a new workflow. Search for "firecrawl" to see the available actions:

Firecrawl node showing available actions

The community node currently supports six operations:

- Submit a crawl job

- Check crawl job status

- Crawl URL with websocket monitoring

- Submit a crawl job with webhook

- Scrape a URL and get its content

- Map a website and get URLs

Configuring the scrape action

Let's configure the most commonly used action: scraping a URL.

- Select "Scrape a URL and get its content"

- Click "Create new credential" to add your API key

Firecrawl credential configuration interface

- Paste your Firecrawl API key and save

- Configure the scrape parameters:

- URL: The webpage you want to scrape

- Format: Choose HTML, Markdown, or Links

- Click "Execute node" to test the configuration

The credentials will be saved for all future Firecrawl nodes in your workflows.

Community node limitations

While the community node simplifies common operations, it doesn't support all Firecrawl API endpoints. The Extract endpoint with custom prompts, for example, isn't available. For complete API access, use the HTTP Request method even in self-hosted environments.

The community node works best for straightforward scraping tasks where you need clean, structured data without complex extraction requirements.

Now that you understand both configuration approaches, try building a simple workflow that scrapes a news website or documentation page using the community node. This hands-on practice will help you understand the differences between both methods and determine which fits your workflow needs.

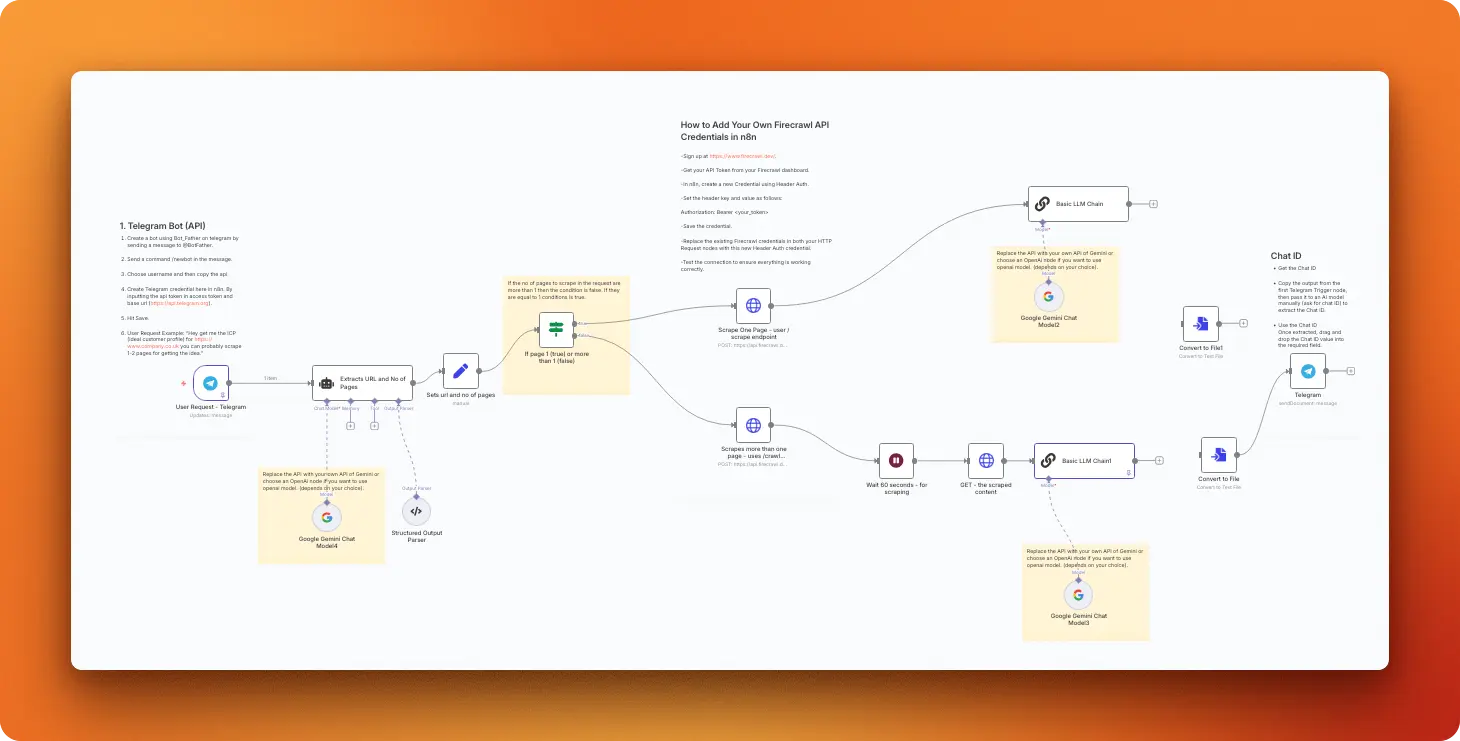

Practical example: Chatbot with web access

Let's build a complete example that demonstrates both technologies working together: an AI chatbot that can search the web in real-time using Firecrawl's search capabilities. This example uses Method 1 (HTTP requests with n8n Cloud) to showcase the full integration potential.

Building the core workflow

To get started, create a new workflow in n8n Cloud. The foundation requires two essential components that work together to create an interactive AI experience:

- "When chat message received" trigger - This creates an interactive chat interface

- "AI Agent" node - Connect this to your trigger node

Two nodes connected in the workflow canvas

The AI Agent node implements a ReAct (reason + act) pattern. This approach gives your chatbot the ability to think through problems step by step and use tools when needed to find answers.

Configuring the AI agent

Before the chatbot can function, the AI Agent needs three connections configured. Each connection handles a different aspect of the chatbot's capabilities.

Chat Model Setup:

Start by clicking the "+" next to "Chat Model" and adding your preferred language model provider. Configure it with your API credentials and select a model like gpt-4o-mini for cost-effective performance.

Memory Configuration: Next, add "Simple Memory" which stores conversation history on n8n's servers. This allows the chatbot to remember previous messages in your conversation. For production workflows, consider external databases like MongoDB, Redis, or PostgreSQL for better persistence and control.

Workflow showing AI Agent with model and memory configured

Once configured, test your basic chatbot by clicking "Open chat" and sending a few messages. This testing step serves two purposes: it verifies the setup works and exposes the chatInput field that we'll need for the Firecrawl integration.

Adding web search capabilities

With the basic chatbot working, we can extend it with web search capabilities through Firecrawl's Search endpoint. Click the "Tools" edge and add an HTTP Request node to create this connection.

Navigate to Firecrawl's Search endpoint documentation and configure a test request to understand the API structure:

Search endpoint configuration interface

Copy the generated cURL command and import it into your HTTP Request node. The specific query parameter value doesn't matter at this stage since the AI Agent will populate it dynamically based on user questions.

Configure the Tool Description: The AI Agent needs instructions about when to use this tool. In the node description field, add:

Use this tool to search the web for real-time information.This description guides the AI Agent's decision-making process about when to search the web.

Set Dynamic Query Parameter: The final step connects user input to the search functionality. Scroll to the JSON configuration section and switch from "Fixed" to "Expression" mode:

JSON configuration showing Fixed option selected

Replace the static query with the dynamic chatInput by dragging it from the available fields:

Dragging chatInput to the query parameter

Testing your web-enabled chatbot

Return to the canvas and open the chat interface. Your chatbot can now access live web information through Firecrawl's search engine.

Chat interface showing response with web search results

The AI Agent automatically evaluates each user question and decides when to search the web. This gives users access to current, accurate information that goes beyond the model's training data. You can adapt this same pattern for any workflow where AI agents need access to fresh web content or specific domain knowledge.

Conclusion

This tutorial demonstrated two distinct approaches for integrating Firecrawl's web scraping capabilities with n8n workflows. The HTTP Request method offers complete API access and works well with n8n Cloud, making it suitable for most automation workflows. Meanwhile, the community node approach provides a simplified interface for self-hosted environments where data control and security compliance are priorities.

For most workflows, Method 1 (HTTP Requests with n8n Cloud) offers the best balance of functionality and ease of use. You get immediate access to all Firecrawl endpoints, can import API calls directly from documentation, and avoid infrastructure setup. The chatbot example shows how this approach handles complex integrations with minimal configuration. Method 2 works best when your organization requires on-premises data processing or when you're building simpler scraping workflows that don't need advanced extraction features.

Continue Your Automation Journey

- Explore web scraping libraries for custom implementations

- Learn about browser automation tools for complex interactions

- Build AI agents with our agent frameworks guide

- Convert websites to llms.txt files for LLM training

data from the web