If you're working with data, you've probably noticed something: raw data alone doesn't tell you much. You need context, additional details, and connections to turn those basic records into something actually useful. And the problem is getting worse — over 40% of CRM data goes stale every year, and Gartner research shows poor data quality costs organizations an average of $12.9 million annually.

That's where data enrichment comes in. Simply put, it's the process of taking your existing data and making it better by adding relevant information from other sources. Think of it like filling in the blanks on a form. You start with a name and email address, then add job title, company size, location, and suddenly you understand who you're really talking to.

As one developer building a B2B data integration tool shared on Hacker News:

Integrating business data into a B2B app or AI agent process is a pain. On one side there's web data providers (Clearbit, Apollo, ZoomInfo) then on the other, 150 year old legacy providers based on government data (D&B, Factset, Moody's, etc). You'd be surprised to learn how much manual work is still happening — teams of people just manually researching business entities all day. — mfrye0 on Hacker News

This guide walks you through everything you need to know about data enrichment: what it actually means, why businesses care about it, and how you can do it yourself. Whether you're trying to understand your customers better, run smarter marketing campaigns, or just make better decisions with your data, this is worth understanding.

Here's what we'll cover:

- The basics of data enrichment and how it works

- Tools that make the process easier (including AI-powered options like Firecrawl)

- Practical strategies you can actually implement

- Real examples from companies doing this well

- Common problems and how to avoid them

The good news? Modern tools have made data enrichment much easier than it used to be. You don't need a huge team or massive budget to get started. Whether you're just learning about this or looking to improve what you're already doing, this guide will help you enhance your data quality and get better results for your business.

What is data enrichment?

Data enrichment is the process of enhancing, refining, and augmenting existing data by combining it with relevant information from multiple sources. This process transforms basic data points into comprehensive, actionable insights that drive better business decisions.

Recent research confirms the growing importance of automated enrichment. A 2025 IEEE review of AI and ML in data governance found that poor data quality costs businesses 15% to 25% of annual revenue, and that traditional rule-based validation is no longer adequate for large-scale, dynamic data ecosystems. Meanwhile, researchers at the University of Illinois Chicago demonstrated that LLM-generated metadata enrichment consistently outperforms content-only retrieval baselines, achieving 82.5% precision when enriching enterprise knowledge bases with automated keyword, summary, and intent metadata.

Understanding data enrichment tools

Modern data enrichment tools automate the complex process of collecting and integrating data from diverse sources. These tools typically offer:

- Automated data collection from websites, APIs, and databases

- Data validation and cleaning capabilities

- Schema mapping and transformation features

- Integration with existing business systems

- Quality assurance and monitoring

For example, web scraping tools like Firecrawl let you gather structured data from websites automatically using AI. Instead of manually copying and pasting information, you can use features like Firecrawl's Agent to describe what data you need in plain English, and it handles the extraction for you. We'll walk through a complete Firecrawl data enrichment workflow later in this guide.

Types of data enrichment services

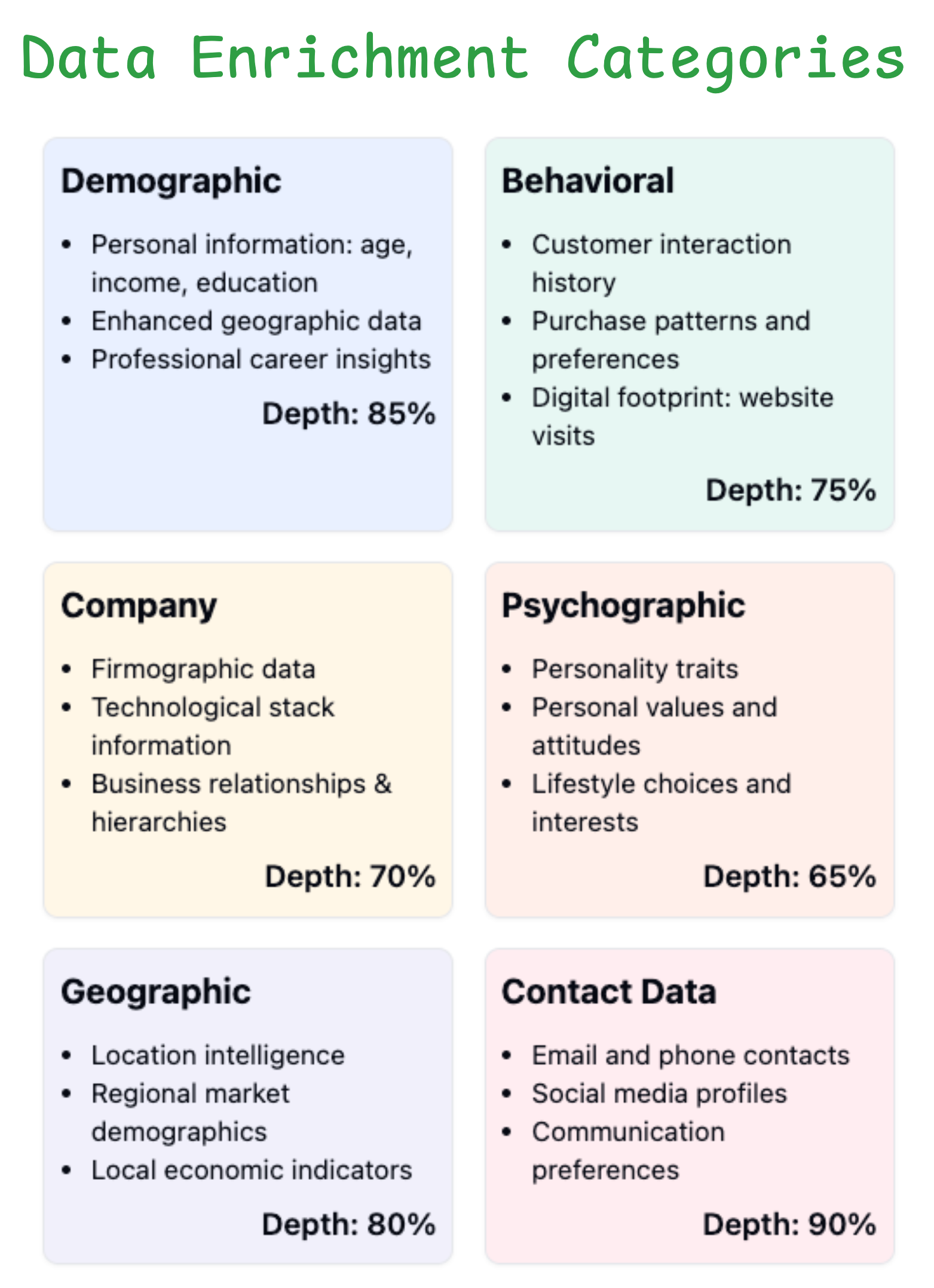

Data enrichment services generally fall into six main categories:

-

Demographic

- Adding personal information like age, income, education

- Enhancing geographic data with location-specific insights

- Including professional and career-related information

-

Behavioral

- Incorporating customer interaction history

- Adding purchase patterns and preferences

- Including digital footprint data like website visits and engagement

-

Company

- Adding firmographic data (company size, revenue, industry)

- Including technological stack information

- Incorporating business relationships and hierarchies

-

Psychographic

- Adding personality traits and characteristics

- Including personal values and attitudes

- Incorporating lifestyle choices and interests

- Adding motivation and decision-making patterns

-

Geographic

- Location intelligence and spatial analysis

- Regional market data and demographics

- Geographic business opportunities

- Local economic indicators

- Competitive landscape by region

-

Contact Data

- Email addresses and phone numbers

- Social media profiles

- Professional contact details

- Business communication preferences

- Contact verification and validation

These different types of data enrichment services can be combined and integrated to create comprehensive data profiles. For example, combining demographic and behavioral data provides deeper customer insights, while merging company and geographic data enables better B2B market analysis. The key is selecting the right combination of enrichment types based on your specific business needs and objectives.

As we move into examining B2B data enrichment specifically, we'll see how these various enrichment types come together to support business decision-making and growth strategies.

B2B data enrichment fundamentals

B2B data enrichment focuses specifically on enhancing business-related information to improve lead generation, account-based marketing, and sales processes. Key aspects include:

- Company verification and validation

- Decision-maker identification and contact information

- Industry classification and market positioning

- Technology stack and tool usage data

- Company financial health indicators (e.g., revenue ranges $1-10M, $10-50M, etc.)

- Organizational structure and hierarchy details

- Employee count brackets (e.g., 1-50, 51-200, 201-1000)

The effectiveness of B2B data enrichment relies heavily on:

- Data accuracy and freshness through diverse data sources

- Real-time enrichment for time-sensitive data vs. batch processing for static data

- Compliance with data protection regulations

- Integration with existing CRM and sales tools

- Scalability of enrichment processes

- Cost-effectiveness and validation across multiple data providers

These fundamentals form the foundation for successful B2B data enrichment initiatives. When implemented effectively, they enable organizations to make more informed business decisions and develop targeted strategies for growth.

Let's explore the various tools and services available for data enrichment and how to select the right ones for your needs.

Data enrichment tools and services

Data enrichment tools come in various forms, each serving specific use cases and industries. Here are some of the most effective solutions:

| Tool | Category | Description | Pricing |

|---|---|---|---|

| Firecrawl | Web scraping and data collection | AI-powered web data extraction with natural language prompts. Extract structured data from websites using the Agent endpoint. | $0-100/mo |

| Bright Data | Web scraping and data collection | Enterprise-grade data collection infrastructure with proxy networks | $500+/mo |

| Scrapy | Web scraping and data collection | Open-source Python framework for custom scraping solutions. Features: Custom extraction rules, proxy rotation, scheduling | Free |

| ZoomInfo | Business intelligence platforms | Company and contact information enrichment. Integrates with: Salesforce, HubSpot, Marketo | $15k+/year |

| Clearbit | Business intelligence platforms | Real-time company and person data API. Unique feature: Logo and domain matching | $99-999/mo |

| InsideView | Business intelligence platforms | Market intelligence and company insights. Specializes in: Sales intelligence and prospecting | $500+/mo |

| Segment | Customer data platforms (CDPs) | Customer data collection and integration. Key differentiator: 300+ pre-built integrations | $120+/mo |

| Tealium | Customer data platforms (CDPs) | Real-time customer data orchestration. Unique feature: Machine learning predictions | Custom pricing |

| mParticle | Customer data platforms (CDPs) | Customer data infrastructure. Best for: Mobile app analytics | $500+/mo |

| Melissa Data | Data validation and cleansing | Address verification and standardization | $500+/mo |

| DemandTools | Data validation and cleansing | Salesforce data cleansing | $999+/year |

| Informatica Data Quality | Data validation and cleansing | Enterprise data quality management | Custom pricing |

Disclaimer: The prices of mentioned tools may vary based on your location and date of visit.

These tools provide a foundation for data enrichment, but selecting the right service requires careful evaluation of several key factors.

Choosing the right data enrichment solution

When selecting a data enrichment service, organizations must evaluate several critical factors. Data quality metrics serve as the foundation, with accuracy rates ideally exceeding 95% and response times under 200ms. Services should maintain 99.9% uptime SLAs and provide comprehensive data validation methods.

Integration capabilities determine implementation success. Services should offer well-documented REST APIs, support JSON/CSV formats, and connect with major CRM platforms. The ability to handle both real-time and batch processing (up to 100k records/hour) provides essential flexibility.

Cost evaluation requires examining API pricing tiers, volume discounts, and hidden fees. Consider vendor reputation, support quality (24/7 availability), and compliance certifications (GDPR, CCPA, SOC 2). Regular security audits and data handling practices should meet industry standards.

A Hacker News discussion on real-time enrichment APIs highlights common pain points practitioners face when evaluating tools: outdated data in existing platforms, aggressive annual pricing commitments, low data recall resulting in mostly null fields, and privacy concerns around dubious data sources. One commenter raised a key scalability concern:

If this is truly real-time and not a cached graph, how do you handle rate limiting and CAPTCHAs at scale? Even with public data, on-demand scraping usually requires massive residential proxy rotation which eats that $0.03 margin alive. — warrior44 on Hacker News

These trade-offs are worth weighing when choosing between cached-database providers and live web extraction approaches like Firecrawl.

Custom data enrichment solutions

Custom data enrichment solutions provide organizations with tailored approaches to data enhancement. Web scraping platforms like Firecrawl and Selenium enable automated extraction of public data, while custom APIs facilitate direct integration with specialized data sources. Python frameworks such as Selenium, BeautifulSoup and Pandas streamline data processing and transformation workflows. Common pitfalls include rate limiting issues, unstable selectors, and data quality inconsistencies.

These solutions often incorporate modular architectures for flexibility. Organizations can combine multiple data sources, implement custom validation rules, and design specific enrichment pipelines. Advanced features include proxy rotation for reliable scraping, rate limiting for API compliance, and parallel processing for improved performance. Key challenges include maintaining data consistency across sources and handling API deprecation gracefully.

LLMs are making custom pipelines significantly more accessible. Research on Prompt2DAG (Alidu et al., 2024) showed that a hybrid LLM approach can transform natural language descriptions into executable data enrichment workflows with a 78.5% success rate — over twice as efficient as direct prompting per successful pipeline generated. Similarly, AutoDW (Liu et al., 2024) demonstrated an end-to-end automated data wrangling solution using LLMs that generates transparent, reproducible source code for the entire preparation process, reducing the manual effort that traditionally dominates data enrichment projects.

Development of custom solutions requires careful consideration of scalability and maintenance. Teams should implement robust error handling, comprehensive logging, and automated testing. Documentation and version control ensure long-term sustainability, while modular design enables iterative improvements and feature additions. Consider trade-offs between custom development costs versus off-the-shelf solutions - custom solutions typically require 2-3x more initial investment but offer greater control and flexibility.

Firecrawl as a custom data enrichment solution

Firecrawl offers powerful AI-powered web extraction capabilities that extend well beyond basic data extraction. Here are some key features that make it an effective data enrichment solution:

- Natural language data extraction

Rather than relying on brittle HTML selectors or XPath expressions, Firecrawl allows you to describe the data you want to extract in plain English.

prompt="Find YC W24 dev tool companies and get their contact info and team size"This approach works across different website layouts and remains stable even when sites update their structure. For example, you can request "find all pricing tables that show enterprise plan features" or "extract author biographies from the bottom of articles" without specifying exact HTML locations.

Firecrawl /agent searches, navigates, and gathers even the most complex websites, finding data in hard-to-reach places and discovering information anywhere on the internet.

It accomplishes in a few minutes what would take a human many hours. /agent finds and extracts your data, wherever it lives on the web.

These capabilities are also available through the scrape_url method, which is covered in detail in our guide on using the scrape endpoint.

- Recursive website crawling

Beyond scraping individual URLs, Firecrawl can automatically discover and process entire website sections. The crawler understands site structure semantically, following relevant links while avoiding navigation menus, footers, and other non-content areas. This is especially valuable when enriching data from documentation sites, knowledge bases, or product catalogs. Learn more about the crawling process in our crawl endpoint guide.

- Multiple output formats

The same content can be extracted in different formats to suit your needs:

- Structured data for database storage (as shown in our Amazon example)

- Markdown for documentation

- Plain text for natural language processing

- HTML for web archives

- Screenshots for visual records

This flexibility eliminates the need for additional conversion steps in your data pipeline.

- Intelligent content processing

The AI-powered approach helps solve common web scraping challenges:

- Automatically detecting and extracting data from tables, lists, and other structured elements

- Understanding content relationships and hierarchies

- Handling dynamic JavaScript-rendered content

- Maintaining context across multiple pages

- Filtering out irrelevant content like ads and popups

- Integration capabilities

Firecrawl offers many integrations which means the extracted data can feed directly into modern data and AI workflows:

- Vector databases for semantic search

- Large language models for analysis

- Business intelligence tools for reporting

- Machine learning pipelines for training data

- Monitoring systems for change detection

The key advantage across all these use cases is reduced maintenance overhead. Traditional scrapers require constant updates as websites change, while semantic extraction remains stable. This makes it particularly suitable for long-running data enrichment projects where reliability and consistency are crucial.

- Parallel processing with /agent for data enrichment at scale

One of the biggest bottlenecks in data enrichment is processing speed. Enriching a dataset of 500 companies one query at a time can stretch into hours. Firecrawl's Parallel Agents solve this by letting you batch hundreds or thousands of /agent queries simultaneously.

Parallel Agents use an intelligent waterfall system. Each query first tries instant retrieval via Spark-1 Fast (at a predictable 10 credits per cell), and only upgrades to full Spark-1 Mini agent research when the fast path can't find your data. This means you get the speed of a cached lookup for common data points (company size, industry, funding stage) and the depth of full web research only when you actually need it.

Here's an example of enriching a batch of companies using the /agent endpoint:

from firecrawl import Firecrawl

from pydantic import BaseModel, Field

from typing import List, Optional

app = Firecrawl(api_key="fc-YOUR_API_KEY")

class CompanyEnrichment(BaseModel):

company_name: str = Field(description="Official company name")

industry: str = Field(description="Primary industry")

employee_count: Optional[str] = Field(None, description="Approximate employee count range")

funding_stage: Optional[str] = Field(None, description="Latest funding stage (Seed, Series A, etc.)")

headquarters: Optional[str] = Field(None, description="HQ city and country")

description: str = Field(description="One-sentence company description")

# Enrich a single company with /agent - no URL needed

result = app.agent(

prompt="Find company details for Notion including their industry, employee count, latest funding stage, headquarters, and a one-sentence description",

schema=CompanyEnrichment,

model="spark-1-mini"

)

print(result.data)For large-scale enrichment, use the Agent Playground to upload a CSV of company names and define the fields you want populated. Parallel Agents will process every row simultaneously, streaming results in real time. Green cells indicate successful enrichment, yellow means in-progress, and red flags failures. No workflow configuration required — write one prompt, hit run, and watch your dataset fill in.

This approach is particularly powerful for use cases like:

- Firmographic enrichment: Upload a list of company names, get funding stages, employee counts, and contact information populated in bulk

- Competitive research: Compare features, pricing, and positioning across hundreds of competitors in a single batch

- Product catalog extraction: Pull specs, pricing, and reviews from e-commerce sites at scale, with /agent handling pagination and product page navigation automatically

- Fire Enrich: open-source AI data enrichment

For teams that want full control over their enrichment pipeline, Firecrawl also offers Fire Enrich, an open-source data enrichment tool. Upload a CSV of email addresses, select the fields you want (company description, industry, employee count, funding stage), and Fire Enrich populates your table using specialized AI agents — a Company Research Agent, a Fundraising Intelligence Agent, a People & Leadership Agent, and a Product & Technology Agent.

Unlike services that rely on static databases, Fire Enrich researches each company fresh, providing source URLs for every data point. When self-hosted, the only cost is API usage (typically $0.01-0.05 per enrichment), making it a practical alternative to platforms like Clay ($149/month) or Apollo ($59/user/month) for teams with moderate enrichment needs.

For a complete example of data enrichment process in Firecrawl, you can read a later section on Custom Data Enrichment in Python: Amazon Data Case Study.

Product data enrichment solutions

Product data enrichment solutions transform basic product information into comprehensive, market-ready datasets. These tools excel at automated attribute extraction, leveraging advanced technologies to identify and categorize product features like dimensions, materials, and industry certifications. Image recognition capabilities enhance product listings with accurate visual data, while competitive pricing analysis ensures market alignment with 95%+ accuracy rates.

Modern product enrichment platforms support bulk processing across multiple languages at speeds of 50,000+ products per hour, making them ideal for global operations. They often incorporate industry-specific taxonomies to maintain standardization and enable rich media enhancement for improved product presentation. Key integration capabilities include seamless connections with Shopify, WooCommerce, and other major e-commerce platforms, along with built-in data validation methods for ensuring attribute accuracy and completeness.

Customer data enrichment solutions

Customer data enrichment platforms serve as central hubs for creating comprehensive customer profiles, achieving match rates of 85-95% for B2B data and 70-85% for B2C data. At their core, these platforms excel at identity resolution, connecting disparate data points to form unified customer views. They incorporate behavioral analytics to understand customer actions and automatically append demographic information to existing profiles, with leading platforms like Clearbit and ZoomInfo offering 98%+ accuracy rates.

Integration features form the backbone of these platforms, with real-time API access enabling immediate data updates at speeds of 10-20ms per record. Webhook support facilitates automated workflows, while custom field mapping ensures compatibility with existing systems. Sophisticated data synchronization maintains consistency across all connected platforms, with enterprise solutions like FullContact processing up to 1M records per day.

Security and compliance remain paramount in customer data enrichment. Modern platforms incorporate robust GDPR compliance measures and granular data privacy controls, typically costing $0.05-0.15 per enriched record at scale. They maintain detailed audit trails and provide comprehensive consent management systems to protect both organizations and their customers.

The key to successful data enrichment lies in selecting tools that align with your specific use cases while maintaining data quality and compliance standards. Best practices include refreshing enriched data every 3-6 months and implementing data quality monitoring. Organizations should start with a pilot program using one or two tools before scaling to a full implementation, with typical ROI ranging from 3-5x for marketing use cases and 5-8x for sales applications.

On the research front, Tencent's SiriusBI system (Jiang et al., 2025) — deployed across finance, advertising, and cloud divisions — demonstrates what's possible when enrichment is integrated into business intelligence workflows. Their knowledge management module, which uses hybrid storage to enrich SQL generation with business context, achieves 93-97% accuracy across divisions and reduced average task completion time by 30.2%.

B2B data enrichment: A complete guide

B2B data enrichment has become increasingly critical for organizations seeking to enhance their business intelligence and decision-making capabilities. The shift is visible across practitioner communities: on r/b2bmarketing, sales teams report that fewer tools with deeper integrations outperform sprawling stacks, and that actionable data enrichment makes the real difference in pipeline quality.

One major trend reshaping B2B enrichment in 2025-2026 is waterfall enrichment — querying multiple data providers in sequence until finding the desired information. According to a 2026 comparison of B2B enrichment providers, multi-source platforms deliver 30-40 points higher match rates than single providers, pushing coverage from 50-60% to 85-95%.

A Hacker News discussion on GTM data tools surfaced an important nuance about how enrichment tools should be used:

Tools like Clay and Apollo are often misused for spammy cold outreach — which rarely works. The real value lies in enriching leads who've already shown interest, helping align marketing efforts with the right prospects. — constantinum on Hacker News

Let's explore the key tools and implementation approaches.

B2B data enrichment tools

Enterprise solutions form the backbone of large-scale B2B data operations, offering comprehensive coverage and high accuracy. These platforms excel at providing deep company insights and market intelligence:

- Enterprise solutions

- ZoomInfo: Industry leader with 95%+ accuracy for company data

- D&B Hoovers: Comprehensive business intelligence with global coverage

- InsideView: Real-time company insights and market intelligence

API-first platforms enable seamless integration into existing workflows, providing real-time data enrichment capabilities for automated systems:

- API-First platforms

- Clearbit enrichment API: Real-time company data lookup

- FullContact API: Professional contact verification

- Hunter.io: Email verification and discovery

Data validation tools ensure data quality and compliance, critical for maintaining accurate business records:

- Data Validation Tools

- Melissa Data: Address and contact verification

- Neverbounce: Email validation services

- DueDil: Company verification and compliance

Typical pricing models range from:

- Pay-per-lookup: $0.05-0.25 per record

- Monthly subscriptions: $500-5000/month

- Enterprise contracts: $50,000+ annually

Best practices for B2B data

Community discussions on r/coldemail consistently emphasize that accuracy in 2026 isn't about relying on a single tool — it's about stacking signals from multiple providers and verifying data at each step. A common practitioner stack combines Apollo or ZoomInfo for scale, Clay for enrichment orchestration, and dedicated verification tools for email validation.

- Data quality management

Regular validation and monitoring are essential for maintaining high-quality B2B data. Implement email format validation using standard regex patterns and schedule bi-monthly data refreshes. Track match rates with a target of >85% accuracy through systematic sampling. As practitioners in the HubSpot community point out, many enrichment platforms give you the same stale company records — real-time enrichment from the actual web (rather than cached databases) is increasingly what teams need.

- Integration strategy

Build a robust integration framework using standardized JSON for API communications. Critical components include:

- Real-time alert systems for failed enrichments

- Retry logic with exponential backoff

- Comprehensive request logging

- Cost monitoring and caching mechanisms

- Compliance and security

Ensure compliance with data protection regulations through proper documentation and security measures. Implement TLS 1.3 for data transfer and AES-256 for storage, while maintaining regular security audits and access reviews.

- Data Scalability

Design systems to handle large-scale data processing efficiently. Focus on data partitioning for datasets exceeding 1M records and implement auto-scaling capabilities to manage processing spikes. Monitor key performance metrics to maintain system health.

- ROI Measurement

Track the business impact of your enrichment efforts by measuring:

- Cost vs. revenue impact

- Conversion rate improvements

- Lead qualification efficiency

- Sales cycle optimization

Key Performance Indicators:

- Match rate: >85%

- Data accuracy: >95%

- Enrichment coverage: >90%

- Time to value: <48 hours

Let's put these best practices into action by exploring a real-world example of data enrichment.

Custom data enrichment in Python: Amazon data case study

Let's explore a practical example of data enrichment using Python with Amazon listings data from 2020. This Kaggle-hosted dataset provides an excellent opportunity to demonstrate how to enrich outdated product information.

Exploring the Amazon listings dataset

First, we should download the dataset stored as a ZIP file on Kaggle servers. These commands pull that zip file and extract the CSV file inside in your current working directory:

# Download the zip file

curl -L -o amazon-product-dataset-2020.zip\

https://www.kaggle.com/api/v1/datasets/download/promptcloud/amazon-product-dataset-2020

unzip amazon-product-dataset-2020.zip

# Move the desired file and rename it

mv home/sdf/marketing_sample_for_amazon_com-ecommerce__20200101_20200131__10k_data.csv amazon_listings.csv

# Delete unnecessary files

rm -rf home amazon-product-dataset-2020.zipNext, let's install a few dependencies we are going to use:

pip install pandas firecrawl-py pydantic python-dotenvNow, we can load the dataset with Pandas to explore it:

import pandas as pd

df = pd.read_csv("amazon_listings.csv")

df.shape(10002, 28)There are 10k products with 28 attributes:

df.columnsIndex(

[

'Uniq Id', 'Product Name', 'Brand Name', 'Asin', 'Category',

'Upc Ean Code', 'List Price', 'Selling Price', 'Quantity',

'Model Number', 'About Product', 'Product Specification',

'Technical Details', 'Shipping Weight', 'Product Dimensions', 'Image',

'Variants', 'Sku', 'Product Url', 'Stock', 'Product Details',

'Dimensions', 'Color', 'Ingredients', 'Direction To Use',

'Is Amazon Seller', 'Size Quantity Variant', 'Product Description'

], dtype='object'

)For ecommerce listing datasets, missing values are the main problem. Let's see if that's true of this one:

null_percentages = df.isnull().sum() / df.shape[0]

null_percentages.sort_values(ascending=False)Product Description 1.000000

Sku 1.000000

Brand Name 1.000000

Asin 1.000000

Size Quantity Variant 1.000000

List Price 1.000000

Direction To Use 1.000000

Quantity 1.000000

Ingredients 1.000000

Color 1.000000

Dimensions 1.000000

Product Details 1.000000

Stock 1.000000

Upc Ean Code 0.996601

Product Dimensions 0.952110

Variants 0.752250

Model Number 0.177165

Product Specification 0.163167

Shipping Weight 0.113777

Category 0.082983

Technical Details 0.078984

About Product 0.027295

Selling Price 0.010698

Image 0.000000

Product Name 0.000000

Product Url 0.000000

Is Amazon Seller 0.000000

Uniq Id 0.000000

dtype: float64Based on the output, we can see that almost 15 attributes are missing for all products while some have partially incomplete data. Only five attributes are fully present:

- Unique ID

- Is Amazon Seller

- Product URL

- Product Name

- Image

Even when details are present, we can't count on them since they were recorded in 2020 and have probably changed. So, our goal is to enrich this data with updated information as well as filling in the missing columns as best as possible.

Enriching Amazon listings data with Firecrawl

Now that we've explored the dataset and identified missing information, let's use Firecrawl to enrich our Amazon product data. We'll create a schema that defines what information we want to extract from each product URL.

First, let's import the required libraries and initialize Firecrawl:

from firecrawl import FirecrawlApp

from dotenv import load_dotenv

from pydantic import BaseModel, Field

load_dotenv()

app = FirecrawlApp()Next, we'll define a Pydantic model that describes the product information we want to extract. This schema helps Firecrawl's AI understand what data to look for on each product page:

class Product(BaseModel):

name: str = Field(description="The name of the product")

image: str = Field(description="The URL of the product image")

brand: str = Field(description="The seller or brand of the product")

category: str = Field(description="The category of the product")

price: float = Field(description="The current price of the product")

rating: float = Field(description="The rating of the product")

reviews: int = Field(description="The number of reviews of the product")

description: str = Field(description="The description of the product written below its price.")

dimensions: str = Field(description="The dimensions of the product written below its technical details.")

weight: str = Field(description="The weight of the product written below its technical details.")

in_stock: bool = Field(description="Whether the product is in stock")For demonstration purposes, let's take the last 100 product URLs from our dataset and enrich them:

# Get last 100 URLs

urls = df['Product Url'].tolist()[-100:]

# Start batch scraping job

batch_scrape_data = app.batch_scrape_urls(urls, params={

"formats": ["extract"],

"extract": {"schema": Product.model_json_schema()}

})Here are the results of the batch-scraping job:

# Separate successful and failed scrapes

failed_items = [

item for item in batch_scrape_data["data"] if item["metadata"]["statusCode"] != 200

]

success_items = [

item for item in batch_scrape_data["data"] if item["metadata"]["statusCode"] == 200

]

print(f"Successfully scraped: {len(success_items)} products")

print(f"Failed to scrape: {len(failed_items)} products")Successfully scraped: 84 products

Failed to scrape: 16 productsLet's examine a successful enrichment result:

{

'extract': {

'name': 'Leffler Home Kids Chair, Red',

'brand': 'Leffler Home',

'image': 'https://m.media-amazon.com/images/I/71IcFQJ8n4L._AC_SX355_.jpg',

'price': 0,

'rating': 0,

'weight': '26 Pounds',

'reviews': 0,

'category': "Kids' Furniture",

'in_stock': False,

'dimensions': '20"D x 24"W x 21"H',

'description': 'Every child deserves their own all mine chair...'

},

'metadata': {

'url': 'https://www.amazon.com/Leffler-Home-14000-21-63-01-Kids-Chair/dp/B0784BXPC2',

'statusCode': 200,

# ... other metadata

}

}A few observations about the enrichment results:

-

Success Rate: Out of 100 URLs, we successfully enriched 84 products. The 16 failures were mostly due to products no longer being available on Amazon (404 errors).

-

Data Quality: For successful scrapes, we obtained all desired details, including:

- Complete product dimensions and weights

- Updated category information

- Current availability status

- High-resolution image URLs

- Detailed product descriptions

- Missing Values: Some numeric fields (price, rating, reviews) returned 0 for unavailable products, which we should handle in our data cleaning step.

For large-scale enrichment, Firecrawl also offers an asynchronous API that can handle thousands of URLs:

# Start async batch job

batch_job = app.async_batch_scrape_urls(

urls,

params={"formats": ["extract"], "extract": {"schema": Product.model_json_schema()}},

)

# Check job status until it finishes

job_status = app.check_batch_scrape_status(batch_job["id"])This approach is particularly useful when enriching the entire dataset of 10,000+ products, as it allows you to monitor progress and handle results in chunks rather than waiting for all URLs to complete.

Alternatively, you can use the /agent endpoint to perform your data enrichment tasks in mere minutes. Check it out in this video:

Read our detailed docs on the /agent endpoint to get started.

Conclusion

Data enrichment has become essential for organizations seeking to maintain competitive advantage through high-quality, comprehensive datasets. Through this guide, we've explored how modern tools and techniques can streamline the enrichment process.

The landscape is evolving fast. GTM planning data for 2026 shows that 44% of seller interactions never make it into CRM, and 26% of those missing contacts are decision-makers. Companies with complete data achieve 10% higher forecast accuracy and stronger AI outcomes. The takeaway is clear: automated data enrichment is no longer optional.

Key takeaways

- Start with clear data quality objectives and metrics

- Use waterfall enrichment across multiple providers to maximize match rates (80-93% vs 30-60% from single providers)

- Choose enrichment tools based on your specific use case and scale — consider Firecrawl's /agent with parallel processing for web-based enrichment at scale

- Implement proper validation and error handling

- Consider both batch and real-time enrichment approaches

- Maintain data freshness through regular updates (every 3 months for high-value leads, 6-12 months for others)

Implementation steps

- Audit your current data to identify gaps

- Select appropriate enrichment sources and tools

- Build a scalable enrichment pipeline — LLM-powered tools like Prompt2DAG can help automate pipeline generation from natural language descriptions

- Implement quality monitoring

- Schedule regular data refresh cycles

Additional resources

- Firecrawl Documentation - Official documentation for implementing data enrichment with Firecrawl

- Web Scraping Ethics Guide - Best practices for ethical web scraping

- Clearbit API Documentation - Detailed guides for company and person data enrichment

- FullContact Developer Portal - Resources for identity resolution and customer data enrichment

- ZoomInfo API Reference - Documentation for B2B data enrichment

- Modern Data Stack Blog - Latest trends and best practices in data engineering

- dbt Developer Blog - Insights on data transformation and modeling

By following these guidelines and leveraging modern tools, organizations can build robust data enrichment pipelines that provide lasting value for their data-driven initiatives.

Frequently Asked Questions

What is data enrichment and why does it matter?

Data enrichment enhances existing datasets by adding valuable information from multiple sources, transforming basic data into actionable insights. It's crucial for improving customer profiles, marketing campaigns, and business decisions while maintaining competitive advantage.

How does Firecrawl differ from traditional web scraping tools?

Firecrawl uses AI-powered extraction with natural language descriptions instead of brittle HTML selectors. This means scrapers remain stable when websites update, reducing maintenance overhead and enabling semantic understanding of content across different layouts.

What are the main types of data enrichment services?

The six main categories are: demographic (age, income, education), behavioral (purchase patterns, interactions), company (firmographics, tech stack), psychographic (values, interests), geographic (location intelligence), and contact data (emails, social profiles).

How much does data enrichment typically cost?

Costs vary significantly by solution: pay-per-lookup ranges from $0.05-0.25 per record, monthly subscriptions run $500-5,000, and enterprise contracts start at $50,000+ annually. Firecrawl offers flexible pricing from $0-100/month for web scraping needs.

What's the difference between B2B and B2C data enrichment?

B2B enrichment focuses on company information, decision-makers, and firmographics with 85-95% match rates. B2C targets individual customer demographics and behavior with 70-85% match rates. Both require different tools and strategies.

How often should enriched data be refreshed?

Best practice recommends refreshing enriched data every 3-6 months to maintain accuracy. However, rapidly changing information like contact details, pricing, or availability status may require real-time or monthly updates for optimal data quality.

Can Firecrawl handle large-scale data enrichment projects?

Yes. Firecrawl supports both synchronous batch scraping and asynchronous API calls for large-scale projects. The /agent endpoint with parallel processing can batch hundreds or thousands of queries simultaneously, making it ideal for enriching datasets at scale.

What are common challenges in implementing data enrichment?

Key challenges include maintaining data quality across sources (target >95% accuracy), handling API rate limits, managing costs at scale, ensuring GDPR/CCPA compliance, dealing with website structure changes, and integrating enriched data with existing systems.

data from the web