Getting Started with Modern Web Scraping: An Introduction

Traditional web scraping runs into a number of unique challenges. Relevant information is often scattered across multiple pages containing complex elements like code blocks, iframes, and media. JavaScript-heavy websites and authentication requirements add additional complexity to the scraping process. Modern AI agents and applications need a reliable web scraping API that can handle these challenges automatically.

Even after successfully scraping, the content needs to be transformed into a specific format to be useful for downstream processes, like data engineering or training AI and machine learning models. This is where specialized AI scraping tools become essential for teams building intelligent applications.

Firecrawl helps teams solve these problems by providing a specialized web scraping API solution designed for AI. Its /scrape endpoint offers features like JavaScript rendering, automatic content extraction, bypassing blockers and flexible output formats that make it easier to collect high-quality information and training data at scale. Whether you're building scraping for agents or collecting data for AI training, Firecrawl handles the complexity of modern web extraction.

In this guide, we'll learn how to use Firecrawl's /scrape endpoint to extract structured data from static and dynamic websites. First, we'll start with basic scraping setup and then dive into a real-world example of scraping form data from dynamic sites, showing how to handle JavaScript-based interactions, extract structured data using schemas, and capture screenshots during the scraping process. For website-wide data collection, you might also want to explore generating sitemaps with the /map endpoint to understand a site's structure before scraping.

Table of Contents

- Getting Started with Modern Web Scraping: An Introduction

- What Is Firecrawl's

/scrapeEndpoint? The Short Answer - Prerequisites: Setting Up Firecrawl

- Basic Scraping Setup

- Advanced Data Extraction: Structured Techniques

- Large-scale Scraping With Batch Operations

- How to Scrape Dynamic JavaScript Websites

- What's New in /scrape Endpoint v2?

- Conclusion

What Is Firecrawl's /scrape Endpoint? The Short Answer

The /scrape endpoint is Firecrawl's core web scraping API that enables automated extraction of content from any webpage. As a comprehensive web crawling API designed for modern AI applications, it handles common web scraping challenges like:

- JavaScript rendering - Executes JavaScript to capture dynamically loaded content

- Content extraction - Automatically identifies and extracts main content while filtering out noise

- Format conversion - Converts HTML to clean formats like Markdown or structured JSON for AI consumption

- Screenshot capture - Takes full or partial page screenshots during scraping

- Browser automation - Supports clicking, typing and other browser interactions

- Request handling - Uses rotating proxies and browser configuration for reliable access

The endpoint accepts a URL and configuration parameters, then returns the scraped content in your desired format. It's flexible enough for both simple static page scraping and complex dynamic site automation, making it an ideal solution for scraping for AI applications and intelligent agents that need reliable web data access.

Now that we understand what the endpoint does at a high level, let's look at how to set it up and start using it in practice.

Prerequisites: Setting Up Firecrawl

Firecrawl's scraping engine is exposed as a REST API, so you can use command-line tools like cURL to use it. However, for a more comfortable experience, better flexibility and control, I recommend using one of its SDKs for Python, Node, Rust or Go. This tutorial will focus on the Python version. If you're interested in building complete AI applications with scraped data, check out our guide on building full-stack AI web apps.

To get started, please make sure to:

- Sign up at firecrawl.dev.

- Choose a plan (the free one will work fine for this tutorial).

Once you sign up, you will be given an API token which you can copy from your dashboard. The best way to save your key is by using a .env file, ideal for the purposes of this article:

touch .env

echo "FIRECRAWL_API_KEY='YOUR_API_KEY'" >> .envNow, let's install Firecrawl Python SDK, python-dotenv to read .env files, and Pandas for data analysis later:

pip install firecrawl-py python-dotenv pandasBasic Scraping Setup

Scraping with Firecrawl starts by creating an instance of the Firecrawl class:

from firecrawl import Firecrawl

from dotenv import load_dotenv

load_dotenv()

app = Firecrawl()When you use the load_dotenv() function, the app can automatically use your loaded API key to establish a connection with the scraping engine. Then, you can scrape any URL with the scrape() method:

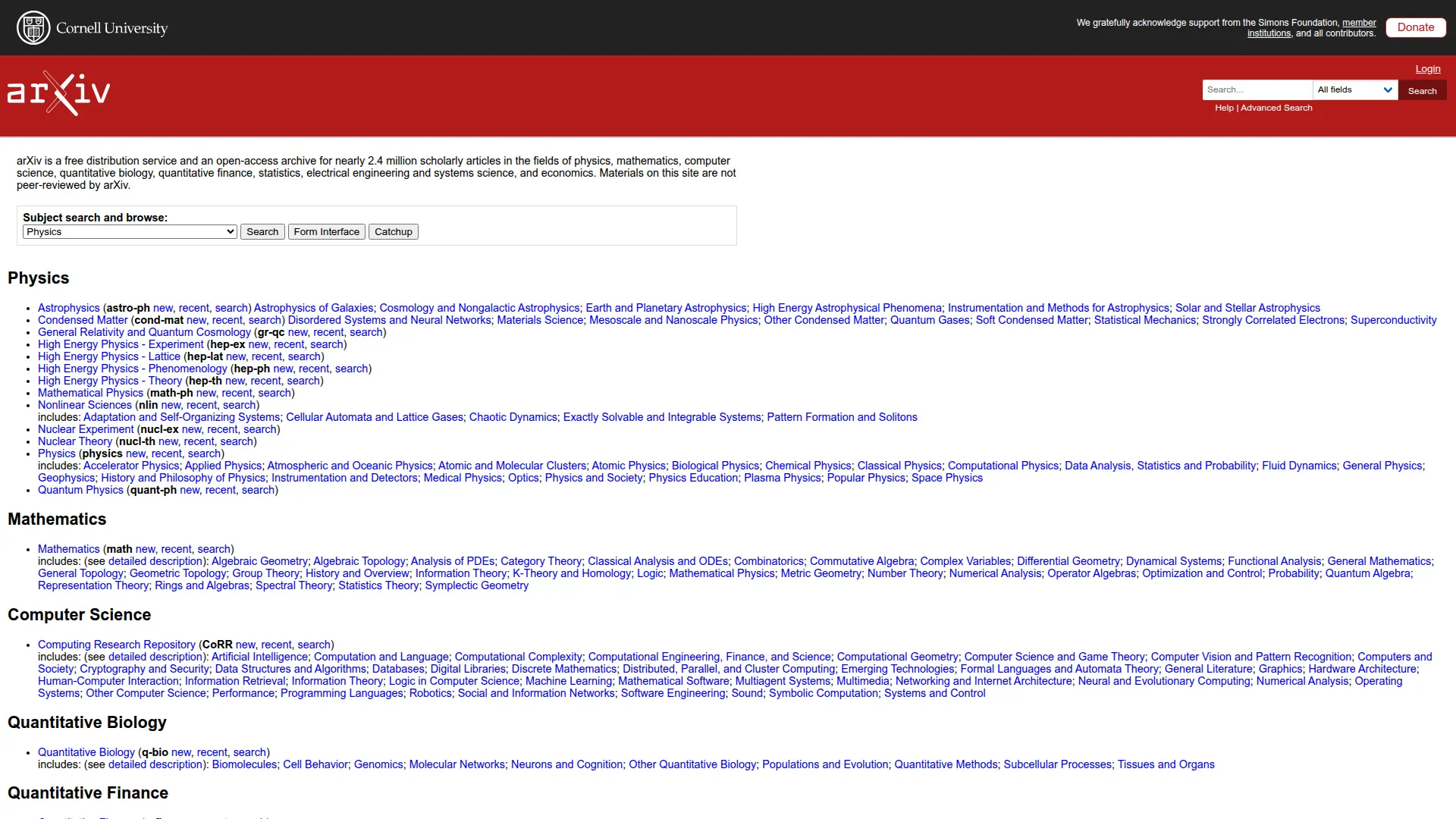

url = "https://arxiv.org"

data = app.scrape(url, formats=["markdown"])Let's take a look at the response format returned by scrape method:

data.metadata{

"title": "arXiv.org e-Print archive",

"language": "en",

"source_url": "<https://arxiv.org>",

"url": "<https://arxiv.org/>",

"status_code": 200,

"content_type": "text/html; charset=utf-8",

"cache_state": "hit",

"credits_used": 1

}The response metadata includes comprehensive information like the page title, content type, cache status, and status code.

Now, let's look at the scraped contents, which is converted into markdown by default:

from IPython.display import Markdown

Markdown(data.markdown[:500])

arXiv is a free distribution service and an open-access archive for nearly 2.4 million

scholarly articles in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics.

Materials on this site are not peer-reviewed by arXiv.

Subject search and browse:

Physics

Mathematics

Quantitative Biology

Computer Science

Quantitative Finance

Statistics

Electrical Engineering and Systems SciencThe response can include several other formats that we can request when scraping a URL. Let's try requesting multiple formats at once to see what additional data we can get back:

data = app.scrape(

url,

formats=[

'html',

'rawHtml',

'links',

'screenshot',

]

)Each format serves different purposes:

- HTML: Processed HTML for analysis and manipulation

- rawHtml: Unprocessed HTML exactly as received from the server

- links: All hyperlinks extracted from the page

- screenshot: Visual capture of the page as rendered in a browser

Choose rawHtml for archival or comparison tasks, links for SEO analysis, and screenshot for visual documentation.

Passing more than one scraping format adds additional attributes to the response:

# Check available attributes

dir(data)['actions', 'html', 'json', 'links', 'markdown', 'metadata', 'raw_html', 'screenshot', 'summary']Let's display the screenshot Firecrawl took of arXiv.org:

from IPython.display import Image

Image(data.screenshot)

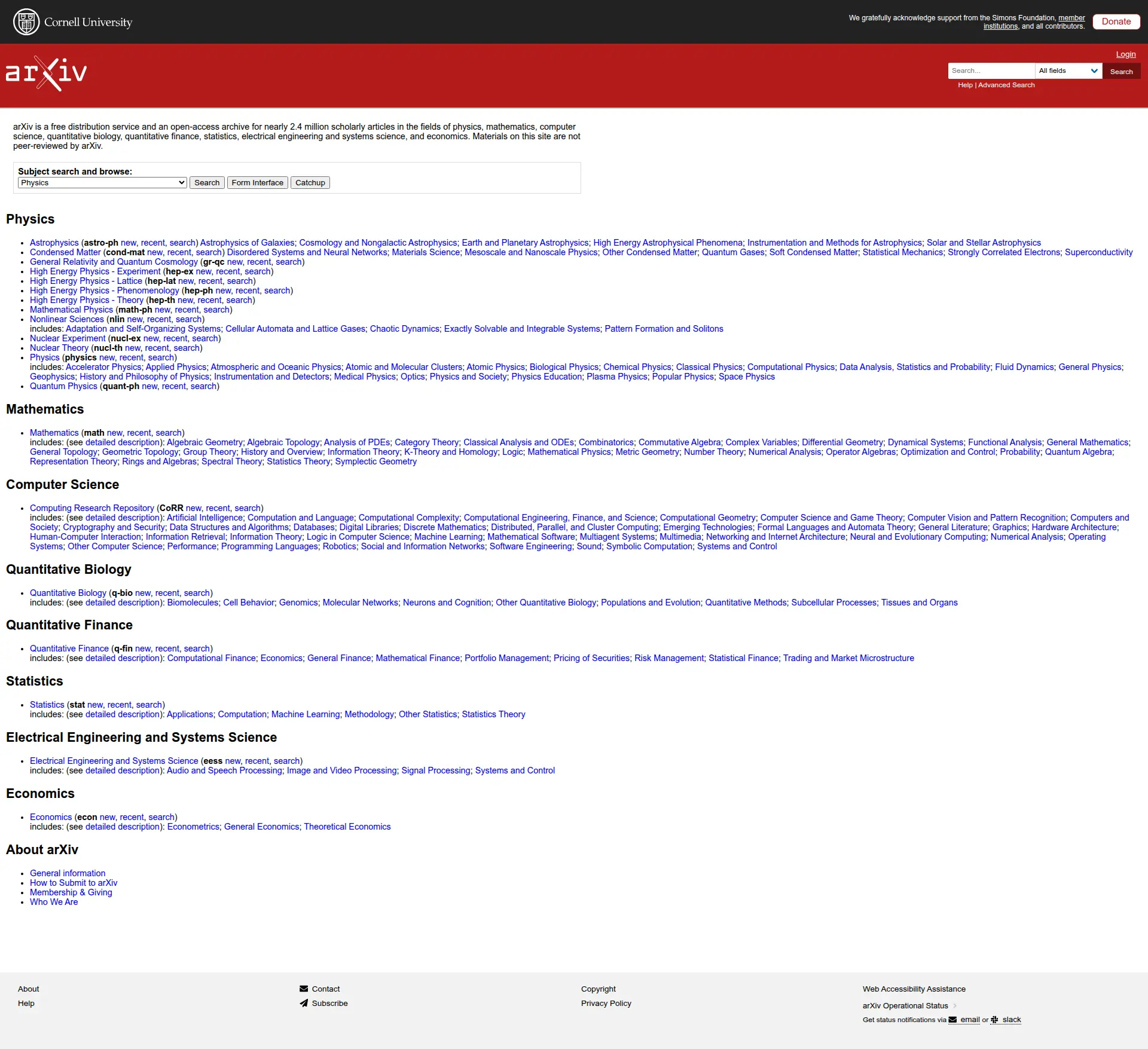

Notice how the screenshot is cropped to fit a certain viewport. For most pages, it's better to capture the entire screen by using the full page screenshot option:

data = app.scrape(

url,

formats=[

{"type": "screenshot", "fullPage": True},

]

)

Image(data.screenshot)

As a bonus, the /scrape endpoint can handle PDF links as well:

pdf_link = "https://arxiv.org/pdf/2411.09833.pdf"

data = app.scrape(pdf_link, formats=["markdown"], parsers=["pdf"])

Markdown(data.markdown[:500])arXiv:2411.09833v1 \[math.DG\] 14 Nov 2024

EINSTEIN METRICS ON THE FULL FLAG F(N).

MIKHAIL R. GUZMAN

Abstract.LetM=G/Kbe a full flag manifold. In this work, we investigate theG-

stability of Einstein metrics onMand analyze their stability types, including coindices,

for several cases. We specifically focus onF(n) = SU(n)/T, emphasizingn= 5, where

we identify four new Einstein metrics in addition to known ones. Stability data, including

coindex and Hessian spectrum, confirms that these metrics onFurther Scrape Configuration Options

By default, scrape() converts everything it sees on a webpage to one of the specified formats. To control this behavior, Firecrawl offers the following parameters:

only_main_contentinclude_tagsexclude_tags

only_main_content excludes the navigation, footers, headers, etc. and is set to True by default.

include_tags and exclude_tags can be used to whitelist/blacklist certain HTML elements:

url = "https://arxiv.org"

data = app.scrape(url, formats=["markdown"], include_tags=["p"], exclude_tags=["span"])

Markdown(data.markdown[:1000])[Help](https://info.arxiv.org/help) \| [Advanced Search](https://arxiv.org/search/advanced)

arXiv is a free distribution service and an open-access archive for nearly 2.4 million

scholarly articles in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics.

Materials on this site are not peer-reviewed by arXiv.

[arXiv Operational Status](https://status.arxiv.org)

Get status notifications via

[email](https://subscribe.sorryapp.com/24846f03/email/new)

or [slack](https://subscribe.sorryapp.com/24846f03/slack/new)include_tags and exclude_tags also support referring to HTML elements by their #id or .class-name.

These configuration options help make scraping efficient and precise. While only_main_content filters out peripheral elements, include_tags and exclude_tags enable surgical targeting of specific HTML elements - particularly valuable when dealing with complex webpage structures or when only certain content types are needed.

Advanced Data Extraction: Structured Techniques

Scraping clean, LLM-ready data is the core philosophy of Firecrawl's AI scraping capabilities. However, certain web pages with their complex structures can interfere with this philosophy when scraped in their entirety. For this reason, Firecrawl offers two AI-powered scraping methods for better structured outputs, making it an essential tool for searching for AI and agents:

- Natural language extraction - Use prompts to extract specific information and have an LLM structure the response

- Manual structured data extraction - Define JSON schemas to have an LLM scrape data in a predefined format

In this section, we will cover both methods that make Firecrawl a powerful solution for AI crawling and data extraction.

Natural Language Extraction - Use AI to Extract Data

To illustrate natural language scraping for agents and AI applications, let's try extracting information using a JSON prompt from a webpage. This approach is particularly useful when building chatbots or AI assistants - you can learn more about turning websites into chatbots with our Firestarter tool:

url = "https://example.com"

data = app.scrape(

url,

formats=[

'markdown',

'screenshot',

{"type": "json", "prompt": "Extract the main title and any links from this page"}

]

)To enable this feature, you include a JSON object in the formats array with a type of "json" and provide a prompt within that object.

Once scraping finishes, the response will include a new json attribute:

data.json{

"title": "Example Domain",

"links": [

{

"url": "https://www.iana.org/domains/example",

"text": "More information..."

}

]

}Due to the nature of this scraping method, the returned output can have arbitrary structure as we can see above.

This LLM-based extraction can have endless applications for searching for agents, from extracting specific data points from complex websites to analyzing sentiment across multiple news sources to gathering structured information from unstructured web content. It's a key component of modern AI search capabilities.

To improve the accuracy of the extraction and give additional instructions, you can include specific guidance directly in your prompt:

data = app.scrape(

url,

formats=[

'markdown',

{

"type": "json",

"prompt": "You are a helpful assistant that extracts domain information. Find any specific information about domains and return them with context."

}

]

)Above, we are providing specific instructions for the LLM to act as a domain information extraction assistant. Let's look at its response:

data.json{

"domain_information": [

{

"domain": "example.com",

"context": "This domain is for use in illustrative examples in documents",

"link": "https://www.iana.org/domains/example"

}

]

}The output shows the LLM successfully extracted domain information from the page.

The LLM not only identified the domain but also provided relevant context and the associated documentation link.

Schema-Based Data Extraction - Building Structured Models

While natural language scraping is powerful for exploration and prototyping, production systems typically require more structured and deterministic approaches. LLM responses can vary between runs of the same prompt, making the output format inconsistent and difficult to reliably parse in automated workflows. For advanced extraction needs, you might want to explore our dedicated Extract endpoint which offers even more sophisticated data extraction capabilities.

For this reason, Firecrawl allows you to pass a predefined schema to guide the LLM's output when transforming the scraped content. To do this, Firecrawl uses Pydantic models.

In the example below, we will extract article information from a sample webpage using a predefined schema:

from pydantic import BaseModel, Field

class IndividualArticle(BaseModel):

title: str = Field(description="The title of the news article")

subtitle: str = Field(description="The subtitle of the news article")

url: str = Field(description="The URL of the news article")

author: str = Field(description="The author of the news article")

date: str = Field(description="The date the news article was published")

read_duration: int = Field(description="The estimated time it takes to read the news article")

topics: list[str] = Field(description="A list of topics the news article is about")

class NewsArticlesSchema(BaseModel):

news_articles: list[IndividualArticle] = Field(

description="A list of news articles extracted from the page"

)Above, we define a Pydantic schema that specifies the structure of the data we want to extract. The schema consists of two models:

IndividualArticle defines the structure for individual news articles with fields for:

titlesubtitleurlauthordateread_durationtopics

NewsArticlesSchema acts as a container model that holds a list of IndividualArticle objects, representing multiple articles extracted from the page. If we don't use this container model, Firecrawl will only return the first news article it finds.

Each model field uses Pydantic's Field class to provide descriptions that help guide the LLM in correctly identifying and extracting the requested data. This structured approach ensures consistent output formatting.

The next step is passing this schema to the formats parameter of scrape():

url = "https://example.com"

class SimpleContent(BaseModel):

title: str = Field(description="The main title or heading")

description: str = Field(description="Main content or description text")

structured_data = app.scrape(

url,

formats=[

"screenshot",

{

"type": "json",

"schema": SimpleContent,

"prompt": "Extract the title and main content from this page."

}

]

)

print(structured_data.json)When passing a Pydantic schema, we pass the model class directly to Firecrawl. Let's look at the output:

structured_data.json{

'title': 'Example Domain', 'description': 'This domain is for use in illustrative examples in documents. You may use this\ndomain in literature without prior coordination or asking for permission.\n\n[More information...](https://www.iana.org/domains/example)'

}This time, the response fields exactly match the fields we set during schema definition:

{

"news_articles": [

{...}, # Article 1

{...}, # Article 2,

... # Article n

]

}When creating the scraping schema, the following best practices can go a long way in ensuring reliable and accurate data extraction:

- Keep field names simple and descriptive

- Use clear field descriptions that guide the LLM

- Break complex data into smaller, focused fields

- Include validation rules where possible

- Consider making optional fields that may not always be present

- Test the schema with a variety of content examples

- Iterate and refine based on extraction results

To follow these best practices, the following Pydantic tips can help:

- Use

Field(default=None)to make fields optional - Add validation with

Field(min_length=1, max_length=100) - Create custom validators with @validator decorator

- Use

conlist()for list fields with constraints - Add example values with

Field(example="Sample text") - Create nested models for complex data structures

- Use computed fields with

@propertydecorator

As you can see, web scraping process becomes much easier and more powerful if you combine it with structured data models that validate and organize the scraped information. This allows for consistent data formats, type checking, and easy access to properties like checking if an article is recent.

Large-scale Scraping With Batch Operations

Up to this point, we have been focusing on scraping pages one URL at a time. In reality, you will work with multiple, perhaps, thousands of URLs that need to be scraped in parallel. This is where batch operations become essential for efficient web scraping at scale. Batch operations allow you to process multiple URLs simultaneously, significantly reducing the overall time needed to collect data from multiple web pages. For comprehensive automation workflows, you can also integrate Firecrawl with n8n for web scraping workflows.

Batch Scraping with batch_scrape

The batch_scrape method lets you scrape multiple URLs at once.

Let's scrape multiple URLs using batch operations.

# Use example URLs for demonstration

article_links = ["https://example.com", "https://httpbin.org/html"]

class ArticleSummary(BaseModel):

title: str = Field(description="The title of the news article")

summary: str = Field(description="A short summary of the news article")

batch_data = app.batch_scrape(article_links, formats=[

{

"type": "json",

"schema": ArticleSummary,

"prompt": "Extract the title of the news article and generate its brief summary"

}

])Here is what is happening in the codeblock above:

- We define a list of example URLs to demonstrate batch processing

- We create an

ArticleSummarymodel with title and summary fields to structure our output - We use

batch_scrape()to process all URLs in parallel, configuring it to:- Extract data in structured format

- Use our

ArticleSummaryschema - Generate titles and summaries based on the page content

The response from batch_scrape() is a bit different:

# Check the batch response attributes

dir(batch_data)['completed', 'credits_used', 'data', 'expires_at', 'next', 'status', 'total']It contains the following key fields:

status: Current status of the batch job ('completed', 'scraping', 'failed', etc.)completed: Number of URLs processed so fartotal: Total number of URLs in the batchcredits_used: Number of API credits consumedexpires_at: When the results will expiredata: The extracted data for each URLnext: URL for pagination if more results are available

Let's focus on the data attribute where the actual content is stored:

len(batch_data.data)

2The batch processing completed successfully with 2 documents. Let's examine the structure of the first document:

# Check the first document's type and content

first_doc = batch_data.data[0]

print(f"Document type: {type(first_doc)}")

print(f"Has JSON: {hasattr(first_doc, 'json')}")Document type: <class 'firecrawl.v2.types.Document'>

Has JSON: True

The response format here matches what we get from individual `scrape()` calls.

Let's print the generated JSON:

```python

print(batch_data.data[0].json)

{'title': 'Example Domain', 'summary': 'This domain, example.com, is designated for use in documentation examples and can be utilized in literature without requiring prior permission.'}The scraping was performed according to our specifications, extracting the metadata, the title and generating a brief summary.

Asynchronous batch scraping with start_batch_scrape

Scraping multiple articles in a batch typically takes a few seconds on most machines. While that's not much, in practice, we can't wait around as Firecrawl batch-scrapes thousands of URLs. For these larger workloads, Firecrawl provides an asynchronous batch scraping API that lets you submit jobs and check their status later, rather than blocking until completion. This is especially useful when integrating web scraping into automated workflows or processing large URL lists.

This feature is available through the start_batch_scrape method and it works a bit differently:

batch_scrape_job = app.start_batch_scrape(

article_links,

formats=[

{

"type": "json",

"schema": ArticleSummary,

"prompt": "Extract the title of the article and generate its brief summary"

}

]

)When using start_batch_scrape instead of the synchronous version, the response comes back immediately rather than waiting for all URLs to be scraped. The program can continue executing while the scraping happens in the background.

batch_scrape_jobBatchScrapeResponse(

id='7c2f553a-4888-46c3-b19a-412d581b63c9',

url='https://api.firecrawl.dev/v2/batch/scrape/7c2f553a-4888-46c3-b19a-412d581b63c9',

invalid_urls=None

)The response contains an ID belonging the background task that was initiated to process the URLs under the hood.

You can use this ID later to check the job's status with get_batch_scrape_status method:

batch_scrape_job_status = app.get_batch_scrape_status(batch_scrape_job.id)

list(type(batch_scrape_job_status).model_fields.keys())['completed', 'credits_used', 'data', 'expires_at', 'next', 'status', 'total']If the job finished scraping all URLs, its status will be set to completed:

print(f"Status: {batch_scrape_job_status.status}")

print(f"Completed: {batch_scrape_job_status.completed}")

print(f"Total: {batch_scrape_job_status.total}")

print(f"Credits used: {batch_scrape_job_status.credits_used}")Status: completed

Completed: 2

Total: 2

Credits used: 10Let's look at how many pages were scraped:

batch_scrape_job_status.total2The response always includes the data attribute, whether the job is complete or not, with the content scraped up to that point. It has error and next attributes to indicate if any errors occurred during scraping and whether there are more results to fetch.

How to Scrape Dynamic JavaScript Websites

Out in the wild, many websites you encounter will be dynamic, meaning their content is generated on-the-fly using JavaScript rather than being pre-rendered on the server. These sites often require user interaction like clicking buttons or typing into forms before displaying their full content. Traditional web scrapers that only look at the initial HTML don't capture this dynamic content right, which is why browser automation capabilities are essential for thorough web scraping.

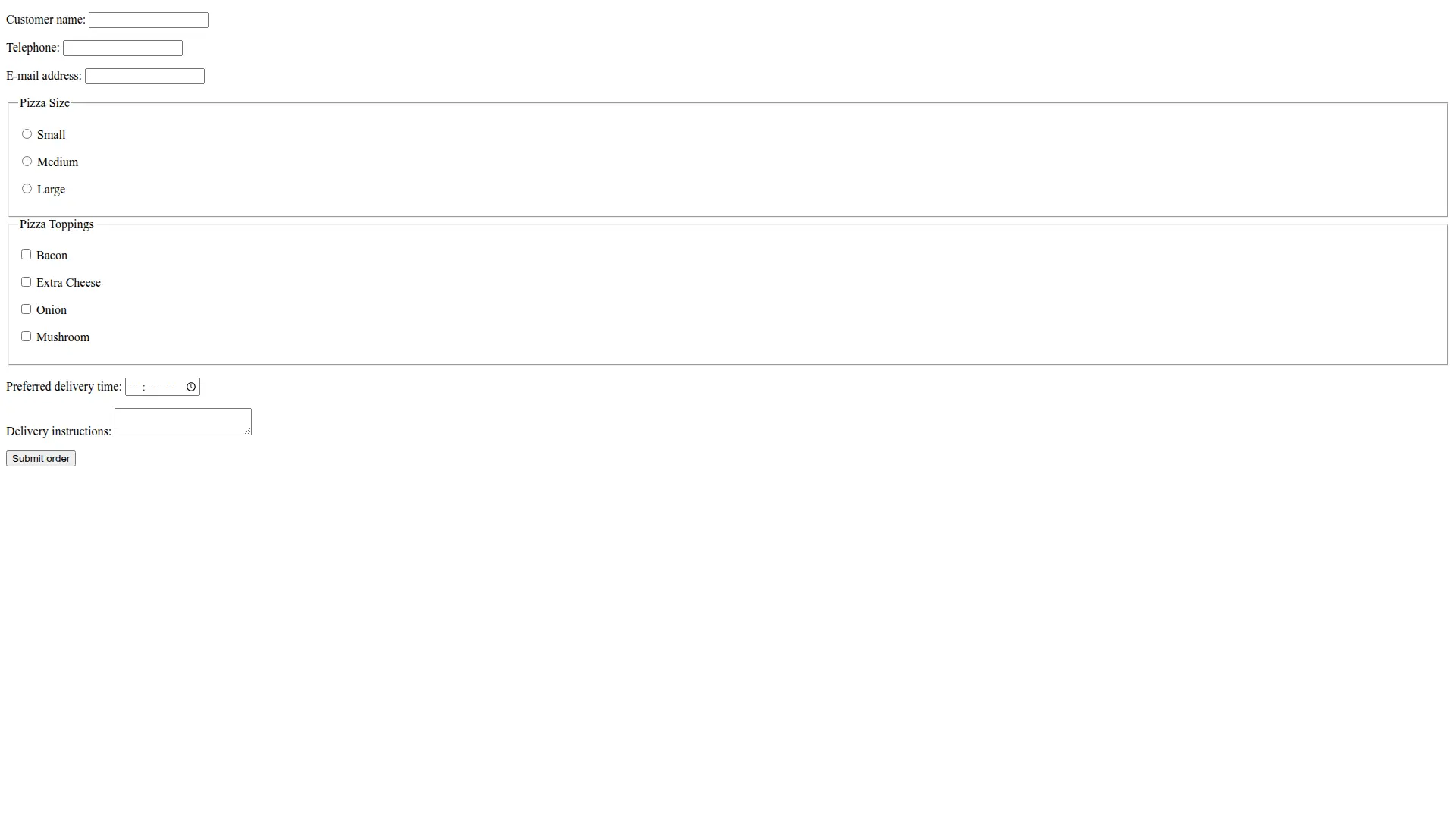

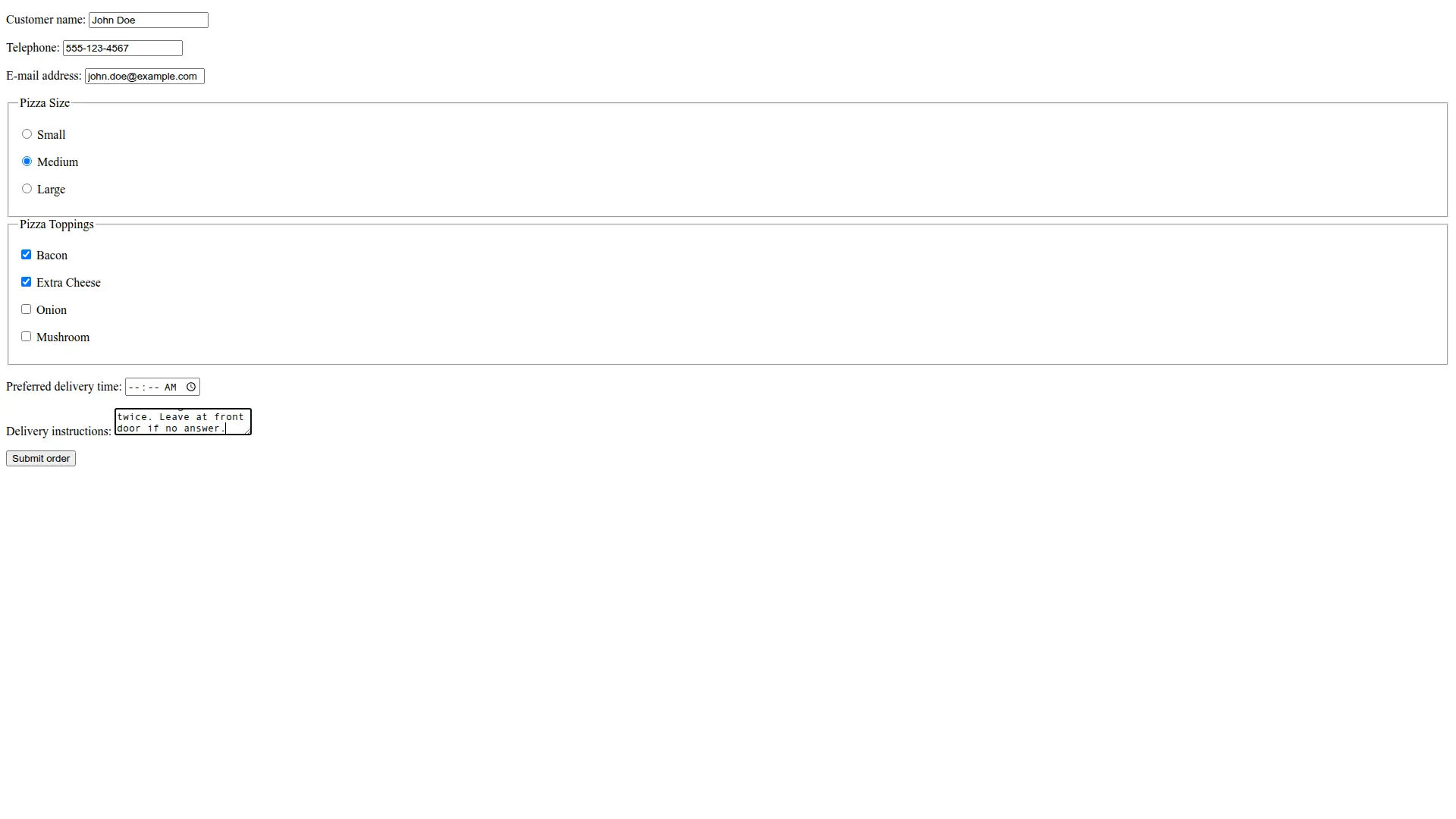

Firecrawl supports dynamic scraping by default. In the parameters of scrape() or batch_scrape(), you can define necessary actions to reach the target state of the page you are scraping. As an example, we will demonstrate actions functionality using a form-based website: httpbin.org/forms/post

Many modern websites require user interactions before displaying their full content. Unlike static websites where you can directly access content via URLs, dynamic sites often require you to:

- Click buttons to reveal content

- Fill out forms to access data

- Wait for JavaScript to load dynamic elements

- Navigate through multiple steps to reach the target information

This multi-step interaction process is where Firecrawl's actions feature becomes essential.

Fortunately, Firecrawl natively supports such interactions through the actions parameter. It accepts a list of dictionaries, where each dictionary represents one of the following interactions:

- Waiting for the page to load

- Clicking on an element

- Writing text in input fields

- Scrolling up/down

- Take a screenshot at the current state

- Scrape the current state of the webpage

Let's define comprehensive actions for interacting with multiple form elements:

actions = [

{"type": "wait", "milliseconds": 2000},

# Fill customer name

{"type": "click", "selector": 'input[name="custname"]'},

{"type": "write", "text": "John Doe"},

# Fill telephone

{"type": "click", "selector": 'input[name="custtel"]'},

{"type": "write", "text": "555-123-4567"},

# Fill email

{"type": "click", "selector": 'input[name="custemail"]'},

{"type": "write", "text": "john.doe@example.com"},

# Select pizza size (Medium)

{"type": "click", "selector": 'input[name="size"][value="medium"]'},

# Select some toppings

{"type": "click", "selector": 'input[name="topping"][value="bacon"]'},

{"type": "click", "selector": 'input[name="topping"][value="cheese"]'},

# Fill delivery time

{"type": "click", "selector": 'input[name="delivery"]'},

{"type": "write", "text": "18:00"},

# Fill delivery instructions

{"type": "click", "selector": 'textarea[name="comments"]'},

{"type": "write", "text": "Please ring doorbell twice. Leave at front door if no answer."},

{"type": "wait", "milliseconds": 1000},

{"type": "screenshot"},

{"type": "wait", "milliseconds": 500},

]This comprehensive sequence demonstrates multiple types of form interactions:

- Text inputs: Fill customer name, phone, and email fields

- Radio buttons: Select pizza size using

input[name="size"][value="medium"] - Checkboxes: Select multiple toppings with specific values

- Time inputs: Set delivery time to 18:00

- Textareas: Add detailed delivery instructions

Let's look at how we choose the selectors, since this is the most technical aspect of the actions. Using browser developer tools, we inspect the webpage elements to find the appropriate selectors. Each form element has specific attributes like name="custname" for the customer name input, or name="size" value="medium" for radio buttons. After each interaction, we include waits to ensure the content is properly filled before moving on to the next step.

Note that when working with different websites, the specific attribute names will vary - you'll need to use browser developer tools to inspect the webpage and find the correct selectors for your target site.

Now, let's define a Pydantic schema to extract form data:

class FormData(BaseModel):

form_title: str = Field(description="The title of the form")

input_fields: list[str] = Field(description="List of input field names found in the form")

action_url: str = Field(description="The form's action URL")Let's first take a screenshot of the form before any interactions to see the initial state:

Finally, let's pass these objects to scrape() with our comprehensive actions:

url = "https://httpbin.org/forms/post"

data = app.scrape(

url,

formats=[

"screenshot",

"markdown",

{

"type": "json",

"schema": FormData,

"prompt": "Extract form information including title, input fields, and action URL"

}

],

actions=actions

)The scraping only happens once all actions are performed. Let's see if it was successful by looking at the json attribute:

data.json{

'form_title': 'Pizza Order Form',

'input_fields': ['Customer name', 'Telephone', 'E-mail address', 'Pizza Size', 'Pizza Toppings', 'Preferred delivery time', 'Delivery instructions'],

'action_url': 'Submit order'

}All details are accounted for! But, for illustration, we need to take a closer look at the response structure when using JS-based actions:

# Check available attributes in the response

dir(data)['actions', 'html', 'json', 'links', 'markdown', 'metadata', 'raw_html', 'screenshot', 'summary']The response has a new actions attribute:

data.actions{

'screenshots': ['https://service.firecrawl.dev/storage/v1/object/public/media/screenshot-31e35220-0df7-454d-a6ec-e6b39ad078a4.png'],

'scrapes': [],

'javascriptReturns': [],

'pdfs': []

}The actions array contained a single screenshot-generating action, which is reflected in the output above.

Let's look at the screenshot:

from IPython.display import Image

Image(data.actions.screenshots[0])

The image shows the form after all automated interactions have been completed. All text fields have been filled, the medium pizza size is selected, bacon and cheese toppings are checked, delivery time is set, and detailed instructions have been added to the text area.

This demonstrates how Firecrawl's actions feature can automate complex interactions with dynamic websites, making it possible to scrape content that requires user input or multi-step processes. The combination of browser automation and structured data extraction makes Firecrawl a powerful tool for handling even the most complex web scraping tasks.

What's New in /scrape Endpoint v2?

Firecrawl v2 introduces several powerful new features that make web scraping more efficient, reliable, and cost-effective. These enhancements establish Firecrawl as a leading web search API and AI crawling solution. Let's explore the key enhancements that weren't available in v1.

Summary Format - Get Concise Page Overviews

One of the most useful additions to v2 is the built-in summary format, which provides concise page content summaries without the need for additional LLM processing. This feature is particularly valuable for AI agents that need quick content understanding.

from firecrawl import Firecrawl

from dotenv import load_dotenv

load_dotenv()

app = Firecrawl()

url = "https://techcrunch.com"

# Get both summary and full markdown for comparison

data = app.scrape(

url,

formats=["markdown", "summary"]

)

print(f"Full content length: {len(data.markdown)} characters")

print(f"Summary length: {len(data.summary)} characters")

print(f"\nSummary content:")

print(data.summary)Full content length: 39022 characters

Summary length: 1127 characters

Summary content:

The TechCrunch page features several key articles and updates in technology and business. Notable highlights include: 1. **Chatbot Design and AI Delusions** - An article discussing how design choices in chatbots contribute to misconceptions about AI capabilities. 2. **Security Flaw in TheTruthSpy** - Coverage of a vulnerability in a spy app that exposed sensitive user data. 3. **Startup Investment Trends** - Analysis of current venture capital and startup funding patterns in the tech industry.The summary format is especially valuable for content monitoring, news aggregation, and when you need quick insights without processing large amounts of text. It reduces bandwidth usage and speeds up downstream processing greatly.

Advanced Caching with max_age - Control Content Freshness

Caching temporarily stores scraped webpage content to avoid refetching the same data repeatedly. When you request a URL that was recently scraped (by you or other Firecrawl users), Firecrawl can return the cached version instead of scraping it again. This improves response times, reduces server load, and saves API credits.

V2 introduces the max_age parameter to control how long cached content remains valid (in milliseconds). Here's how it works:

import time

# First request - fetches fresh content and stores in cache

start_time = time.time()

data1 = app.scrape(

"https://example.com",

formats=["markdown"],

max_age=3600000 # Accept cached content up to 1 hour old

)

first_request_time = time.time() - start_time

# Second request within 1 hour - returns cached content instantly

start_time = time.time()

data2 = app.scrape(

"https://example.com",

formats=["markdown"],

max_age=3600000

)

second_request_time = time.time() - start_time

print(f"First request: {first_request_time:.2f}s (cache: {data1.metadata.cache_state})")

print(f"Second request: {second_request_time:.2f}s (cache: {data2.metadata.cache_state})")First request: 0.82s (cache: hit)

Second request: 0.89s (cache: hit)The cache_state in metadata shows whether content came from cache ("hit") or was freshly scraped ("miss").

Common max_age values:

0: Force fresh scraping (ignores cache)3600000: 1 hour (good for frequently updated content)86400000: 1 day (default for most use cases)172800000: 2 days (Firecrawl's default setting)

Enhanced Enhanced Mode - Reliable Request Handling

V2 includes sophisticated request handling capabilities that automatically manage complex websites, without requiring additional configuration.

# Test stealth capabilities with user agent detection

data = app.scrape(

"https://httpbin.org/user-agent",

formats=[

"markdown",

{"type": "json", "prompt": "Extract the user agent information"}

]

)

print(f"Status: {data.metadata.status_code}")

print(f"Extracted data: {data.json}")

print(f"Raw content: {data.markdown}")Status: 200

Extracted data: {'userAgent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36'}

Raw content: ```json

{

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/138.0.0.0 Safari/537.36"

}The stealth mode automatically rotates user agents, manages browser fingerprinting, and handles complex anti-bot measures, making it much more reliable for scraping protected websites.

Location-Based Scraping - Geographic Content Control

V2 provides location-specific scraping that delivers content as it appears to users in different geographic regions. This is valuable for monitoring regional search results, testing geo-targeted content, and accessing location-specific information.

from pydantic import BaseModel, Field

class SearchResult(BaseModel):

query: str = Field(description="The search query performed")

location_info: str = Field(description="Geographic location information")

top_results: list[str] = Field(description="Top search result titles")

# Test location-aware search results

data = app.scrape(

"https://www.google.com/search?q=pizza+delivery",

formats=[

"markdown",

{

"type": "json",

"schema": SearchResult,

"prompt": "Extract search query, location info, and top business names from results"

}

]

)

print(f"Status: {data.metadata.status_code}")

print(f"Search data: {data.json}")Status: 200

Search data: {

'query': 'pizza delivery',

'location_info': 'United States',

'top_results': [

'i Fratelli Pizza Las Colinas',

'Bravo Pizza',

'Cosmo\'s Pizza',

'Papa Johns Pizza Delivery & Carryout',

'Domino\'s: Pizza Delivery & Carryout, Pasta, Chicken & More'

]

}Notice how search results return location-specific businesses that would differ if the request originated from another country or region. This feature is ideal for e-commerce price monitoring, content localization testing, and competitive analysis across different markets.

Advanced Search Integration - Multi-Source Discovery

V2 introduces comprehensive AI search capabilities that can query across web, news, and image sources, expanding beyond traditional web scraping. This makes Firecrawl a complete web search API for modern applications.

# Use the new search functionality

search_results = app.search("firecrawl web scraping", limit=3)

print(f"Search completed")

print(f"Number of web results: {len(search_results.web)}")

for i, result in enumerate(search_results.web[:2]):

print(f"\nResult {i+1}:")

print(f"Title: {result.title}")

print(f"URL: {result.url}")

print(f"Description: {result.description}")

print(f"Category: {result.category}")Search completed

Number of web results: 3

Result 1:

Title: Firecrawl - The Web Data API for AI

URL: https://www.firecrawl.dev/

Description: The web crawling, scraping, and search API for AI. Built for scale. Firecrawl delivers the entire internet to AI agents and builders.

Category: None

Result 2:

Title: firecrawl/firecrawl: Turn entire websites into LLM-ready ... - GitHub

URL: https://github.com/firecrawl/firecrawl

Description: Firecrawl allows you to perform various actions on a web page before scraping its content. This is particularly useful for interacting with dynamic content, ...

Category: githubThe search integration allows you to discover relevant content across multiple sources before deciding what to scrape, making it ideal for research, competitive analysis, and content discovery workflows. This combination of AI search and scraping capabilities provides a complete solution for searching for AI applications and intelligent agents.

Where to Go from Here

In this guide, we've explored Firecrawl's /scrape endpoint and its powerful capabilities as a modern web scraping API. We covered:

- Basic scraping setup and configuration options

- Multiple output formats including HTML, markdown, and screenshots

- Structured data extraction using both natural language prompts and Pydantic schemas

- Batch operations for processing multiple URLs quickly

- Advanced techniques for scraping JavaScript-heavy dynamic websites

Through practical examples like structured data extraction and form data scraping using dynamic interactions, we've shown how Firecrawl simplifies complex scraping tasks while providing adaptable output formats suitable for data engineering and AI/ML pipelines.

The combination of LLM-powered extraction, structured schemas, and browser automation capabilities makes Firecrawl a versatile web crawling API for gathering high-quality web data at scale. Whether you're building training datasets, implementing scraping for AI agents, monitoring websites, or conducting research, Firecrawl provides the robust infrastructure needed for modern web data extraction.

To discover more what Firecrawl has to offer, refer to our guide on the /crawl endpoint, which scrapes websites in their entirety with a single command while using the /scrape endpoint under the hood.

For more hands-on uses-cases of Firecrawl, these posts may interest you as well:

data from the web