What is PHP web scraping and why should you care?

PHP web scraping is using PHP code to extract data from websites. You make HTTP requests, parse the HTML, and pull out the information you need.

Not every website offers an API. You might need to track competitor prices, collect job listings, or aggregate content. If data exists on a webpage, you can scrape it.

PHP makes sense if you already work with it. You can integrate scraped data into Laravel or WordPress without switching languages. PHP runs on most hosting environments, making deployment straightforward.

This guide covers HTML structure, HTTP requests, pagination, JavaScript sites, and when to use traditional tools versus modern solutions.

PHP web scraping libraries: what's available

Before diving into code, it helps to know what tools exist. The PHP ecosystem has a lot of options depending on what layer of the problem you're solving.

HTTP clients - these fetch the raw HTML:

- cURL - built into PHP, no installation required. Verbose API but maximum control.

- Guzzle - the most popular PHP HTTP client. Cleaner API than cURL, excellent error handling, supports concurrent requests via promises.

- Httpful - a chainable alternative to cURL for simpler use cases.

HTML parsers - these extract data from the fetched HTML:

- Simple HTML DOM Parser - CSS selector-based parsing, jQuery-like syntax. Good for beginners.

- DiDOM - faster than Simple HTML DOM, supports both CSS selectors and XPath.

- PHP's built-in

DOMDocument+DOMXPath- no library needed, XPath support, handles messy HTML withlibxml_use_internal_errors(true). - phpQuery - a PHP port of jQuery selectors.

Full scraping frameworks - these handle the whole pipeline:

- Roach PHP - a complete web scraping toolkit inspired by Python's Scrapy. Handles spiders, pipelines, middleware, and scheduling.

- PHP-Spider - a crawling library with breadth-first and depth-first search support.

- Symfony BrowserKit + DomCrawler - Symfony's approach to browser simulation and DOM traversal. Replaced the deprecated Goutte library.

Headless browsers - for JavaScript-rendered sites:

- Symfony Panther - controls Chrome or Firefox via WebDriver, same API as Symfony BrowserKit.

- php-webdriver - PHP bindings for Selenium WebDriver.

- Chrome PHP - control Chrome/Chromium directly from PHP without Selenium.

For most projects, you'll use an HTTP client paired with an HTML parser. This guide uses Guzzle + Simple HTML DOM Parser, which covers the majority of scraping tasks. For JavaScript-heavy sites, we'll use Firecrawl.

Understanding HTML structure for PHP web scraping

Instead of writing HTML to build pages, you're reading someone else's HTML to extract data. This section covers HTML from a scraping perspective.

HTML structure basics

HTML uses tags to structure content. A book title might look like this:

<h3 class="book-title">A Light in the Attic</h3>The class="book-title" gives it a label you can target. The text between the tags is what you want to extract.

A product listing might be structured like this:

<article class="product">

<h3 class="title">Book Name</h3>

<p class="price">£51.77</p>

</article>The <article> tag wraps one product with title and price as child elements.

Finding elements with classes and IDs

A class like class="price" can appear multiple times on a page. An ID like id="main-content" appears only once.

Open books.toscrape.com in your browser, right-click any book title, and select "Inspect."

You'll see the browser's DevTools panel with the HTML code. Hovering over elements highlights them on the page. Look at the structure: the book title "A Light in the Attic" appears inside an <a> tag, inside an <h3> tag, inside an <article class="product_pod">. The price sits in a separate <div class="product_price"> below it.

You inspect the page, identify patterns, and note the classes or tags that wrap your target data.

Using CSS selectors to target elements

CSS selectors let you target elements precisely:

.priceselects all elements with class "price"#mainselects the element with id "main"articleselects all<article>tags.product .priceselects price elements inside product containers

Once you identify what to look for, the scraping code becomes straightforward.

Your first PHP scraping project: book prices

You need PHP 7.4 or higher and Composer installed. We're scraping books.toscrape.com because it won't block you or change its structure unexpectedly.

Setting up your environment

Install Composer if you don't have it:

curl -sS https://getcomposer.org/installer | php

sudo mv composer.phar /usr/local/bin/composerMore information about Composer installation can be found in the Composer documentation.

Create a project folder and initialize Composer:

mkdir php-scraper

cd php-scraper

composer init --no-interactionSetting up your scraping libraries

PHP doesn't have built-in web scraping functions, so we'll use two libraries that work well together.

Guzzle is an HTTP client for PHP. It handles HTTP communication: sending requests and receiving responses. You could use PHP's file_get_contents() or curl, but Guzzle gives you a cleaner API and better error handling.

Simple HTML DOM Parser lets you search through HTML using CSS selectors, just like you did with jQuery or browser DevTools. Without it, you'd need to parse HTML with regex or PHP's DOMDocument, which gets messy fast.

Together, they split the work: Guzzle fetches the HTML, Simple HTML DOM Parser finds the data inside it.

If you prefer not to add a dependency, PHP's built-in cURL can do the same job as Guzzle. Here's the equivalent fetch with cURL:

$ch = curl_init('https://books.toscrape.com/');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, true);

$html = curl_exec($ch);

curl_close($ch);cURL is more verbose but has zero dependencies - useful when you're adding a quick scraper to an existing project and don't want to touch composer.json. For anything beyond basic GET requests (timeouts, retries, concurrent requests), Guzzle's API is cleaner. This guide uses Guzzle throughout.

Install them with Composer:

composer require guzzlehttp/guzzle

composer require voku/simple_html_domWriting your first scraper

Your goal: extract one book's title and price from the homepage. Create a file called scrape.php:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

use voku\helper\HtmlDomParser;

// Fetch the HTML

$client = new Client();

$response = $client->request('GET', 'https://books.toscrape.com/');

$html = $response->getBody()->getContents();

// Load HTML into parser

$dom = HtmlDomParser::str_get_html($html);

// Find the first book's title

$titleElement = $dom->findOne('article.product_pod h3 a');

$title = $titleElement->getAttribute('title');

// Find the first book's price

$priceElement = $dom->findOne('article.product_pod .price_color');

$price = $priceElement->text();

// Print the results

echo "Title: " . $title . "\n";

echo "Price: " . $price . "\n";

?>

Let's break this down step by step:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

use voku\helper\HtmlDomParser;Start by loading Composer's autoloader and importing the classes you need.

// Fetch the HTML

$client = new Client();

$response = $client->request('GET', 'https://books.toscrape.com/');

$html = $response->getBody()->getContents();Create a Guzzle client and send a GET request to the URL. The getBody()->getContents() method returns the HTML as a string.

// Load HTML into parser

$dom = HtmlDomParser::str_get_html($html);Load the HTML string into Simple HTML DOM Parser so you can query it with CSS selectors.

// Find the first book's title

$titleElement = $dom->findOne('article.product_pod h3 a');

$title = $titleElement->getAttribute('title');Use findOne() with a CSS selector to locate the first book title. The selector article.product_pod h3 a means "find an <a> tag inside an <h3> inside an <article> with class product_pod." The title text is stored in the title attribute, not the link text.

// Find the first book's price

$priceElement = $dom->findOne('article.product_pod .price_color');

$price = $priceElement->text();Find the price element using .price_color selector. The text() method extracts the text content.

// Print the results

echo "Title: " . $title . "\n";

echo "Price: " . $price . "\n";

?>Run it:

php scrape.phpYou'll see output like:

Title: A Light in the Attic

Price: £51.77You just scraped your first website. The pattern: fetch HTML, parse it, find elements with selectors, extract data. Next, we'll scale up to scrape multiple items.

Extracting multiple books from a page

Scraping one book proves the concept, but real projects need data at scale. The homepage shows 20 books, so we'll grab all of them.

Update your scrape.php:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

use voku\helper\HtmlDomParser;

// Fetch the HTML

$client = new Client();

$response = $client->request('GET', 'https://books.toscrape.com/');

$html = $response->getBody()->getContents();

// Parse it

$dom = HtmlDomParser::str_get_html($html);

// Find all books

$books = [];

$bookElements = $dom->find('article.product_pod');

foreach ($bookElements as $bookElement) {

// Get title

$titleElement = $bookElement->findOne('h3 a');

if (!$titleElement) continue;

$title = $titleElement->getAttribute('title');

// Get price

$priceElement = $bookElement->findOne('.price_color');

if (!$priceElement) continue;

$price = $priceElement->text();

// Store in array

$books[] = [

'title' => $title,

'price' => $price

];

}

// Print results

echo "Found " . count($books) . " books:\n\n";

foreach ($books as $index => $book) {

echo ($index + 1) . ". " . $book['title'] . " - " . $book['price'] . "\n";

}

?>Run it:

php scrape.phpYou'll see:

Found 20 books:

1. A Light in the Attic - £51.77

2. Tipping the Velvet - £53.74

3. Soumission - £50.10

4. Sharp Objects - £47.82

5. Sapiens: A Brief History of Humankind - £54.23

...The important change here is using find('article.product_pod') to get all book elements instead of just the first one. This returns a collection you can loop through.

Inside the loop, use $bookElement->findOne() instead of $dom->findOne() to scope searches to each book's HTML. Add error handling with if (!$titleElement) continue; to skip books if elements don't exist.

Build an associative array with the book data instead of storing separate title and price arrays.

You now have an array of all books. This pattern scales to any similar task: find container elements, loop through them, extract data from each.

Alternative: XPath with PHP's built-in DOMDocument

CSS selectors work well for most tasks, but PHP also has built-in XPath support via DOMDocument and DOMXPath - no extra library required. XPath is more expressive for complex queries like "find all <a> tags that are direct children of <h3> elements inside a <ul> with a specific class."

Here's the same book scraper rewritten with XPath:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

$client = new Client();

$response = $client->request('GET', 'https://books.toscrape.com/');

$html = (string) $response->getBody();

// Suppress warnings from malformed HTML

libxml_use_internal_errors(true);

$doc = new DOMDocument();

$doc->loadHTML($html);

$xpath = new DOMXPath($doc);

$books = [];

// XPath: find all <article> elements with class product_pod

$articles = $xpath->query('//article[contains(@class, "product_pod")]');

foreach ($articles as $article) {

// Get title from the <a> tag's title attribute inside <h3>

$titleNodes = $xpath->query('.//h3/a', $article);

$title = $titleNodes->length > 0 ? $titleNodes->item(0)->getAttribute('title') : '';

// Get price text from element with class price_color

$priceNodes = $xpath->query('.//*[contains(@class, "price_color")]', $article);

$price = $priceNodes->length > 0 ? $priceNodes->item(0)->textContent : '';

$books[] = ['title' => $title, 'price' => $price];

}

foreach ($books as $book) {

echo $book['title'] . ' - ' . $book['price'] . "\n";

}

?>XPath syntax (.//h3/a means "find an <a> inside any <h3> descendant") is more verbose than CSS selectors but gives you more control. Use it when:

- You need to navigate up the DOM tree (XPath can select parent elements; CSS selectors can't)

- You need to match on text content (e.g.,

//h2[text()="Price"]) - You want zero dependencies beyond PHP itself

For most straightforward scraping, CSS selectors via Simple HTML DOM Parser are faster to write. Reach for XPath when your selector logic gets complex.

How do you handle multiple pages and pagination?

You've scraped 20 books from one page, but books.toscrape.com has 50 pages with 1,000 books total. To scrape all of them, you need to handle pagination.

Start by examining the URL pattern. Visit the site and click "next" a few times:

- Page 1:

https://books.toscrape.com/catalogue/page-1.html - Page 2:

https://books.toscrape.com/catalogue/page-2.html - Page 3:

https://books.toscrape.com/catalogue/page-3.html

The pattern is clear: page-{number}.html. You can build these URLs in a loop.

Here's how to update your scrape.php to handle multiple pages:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

use voku\helper\HtmlDomParser;

$client = new Client();

$allBooks = [];

// Scrape pages 1-5

for ($pageNum = 1; $pageNum <= 5; $pageNum++) {

echo "Scraping page $pageNum...\n";

// Build the URL

$url = "https://books.toscrape.com/catalogue/page-$pageNum.html";

// Fetch HTML

$response = $client->request('GET', $url);

$html = $response->getBody()->getContents();

// Parse it

$dom = HtmlDomParser::str_get_html($html);

// Find all books on this page

$bookElements = $dom->find('article.product_pod');

foreach ($bookElements as $bookElement) {

$titleElement = $bookElement->findOne('h3 a');

if (!$titleElement) continue;

$priceElement = $bookElement->findOne('.price_color');

if (!$priceElement) continue;

$allBooks[] = [

'title' => $titleElement->getAttribute('title'),

'price' => $priceElement->text(),

'page' => $pageNum

];

}

// Be respectful: wait 1 second between requests

if ($pageNum < 5) {

sleep(1);

}

}

// Print results

echo "\nTotal books scraped: " . count($allBooks) . "\n";

echo "First 5 books:\n";

foreach (array_slice($allBooks, 0, 5) as $index => $book) {

echo ($index + 1) . ". " . $book['title'] . " (Page " . $book['page'] . ") - " . $book['price'] . "\n";

}

?>Run it:

php scrape.phpYou'll see:

Scraping page 1...

Scraping page 2...

Scraping page 3...

Scraping page 4...

Scraping page 5...

Total books scraped: 100

First 5 books:

1. A Light in the Attic (Page 1) - £51.77

2. Tipping the Velvet (Page 1) - £53.74

3. Soumission (Page 1) - £50.10

4. Sharp Objects (Page 1) - £47.82

5. Sapiens: A Brief History of Humankind (Page 1) - £54.23The pagination loop scrapes pages 1 through 5. To scrape all 50 pages, change <= 5 to <= 50. The URL is built dynamically using the page number with string interpolation.

For each page, fetch the HTML and use the same scraping logic from the previous section to find all books and extract their data. Add the page number to each book to track where the data came from.

The sleep(1) function pauses for 1 second between requests to avoid getting blocked. The delay is skipped after the last page.

The $allBooks array accumulates data from each page into one collection.

You can also detect the last page automatically by following the "next" button link instead of counting pages. This works on any site regardless of URL structure:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

use voku\helper\HtmlDomParser;

$client = new Client();

$allBooks = [];

$url = 'https://books.toscrape.com/catalogue/page-1.html';

while ($url) {

echo "Scraping: $url\n";

$response = $client->request('GET', $url);

$dom = HtmlDomParser::str_get_html((string) $response->getBody());

foreach ($dom->find('article.product_pod') as $bookElement) {

$titleElement = $bookElement->findOne('h3 a');

$priceElement = $bookElement->findOne('.price_color');

if (!$titleElement || !$priceElement) continue;

$allBooks[] = [

'title' => $titleElement->getAttribute('title'),

'price' => $priceElement->text(),

];

}

// Find the "next" button and follow its link, or stop

$nextButton = $dom->findOne('li.next a');

$url = $nextButton

? 'https://books.toscrape.com/catalogue/' . $nextButton->getAttribute('href')

: null;

sleep(1);

}

echo "Total books: " . count($allBooks) . "\n";

?>This approach is more robust than hardcoding page numbers. If the site adds or removes pages, your scraper still works correctly.

Saving scraped data to a CSV file

Once you have an array of scraped data, you'll often want to save it somewhere. CSV is the simplest option - it opens in Excel or Google Sheets and works with most data pipelines.

PHP's built-in fputcsv() handles this cleanly:

<?php

// Assume $allBooks is already populated from your scraper

$csvFilePath = 'books.csv';

$file = fopen($csvFilePath, 'w');

// Write header row using the keys of the first item

fputcsv($file, array_keys($allBooks[0]));

// Write each book as a row

foreach ($allBooks as $book) {

fputcsv($file, $book);

}

fclose($file);

echo "Saved " . count($allBooks) . " books to $csvFilePath\n";

?>Output file (books.csv):

title,price

"A Light in the Attic",£51.77

"Tipping the Velvet",£53.74

"Soumission",£50.10

...For larger datasets or when you need to query the data, see the storage options in the Advanced topics section below.

Memory tip: when using Simple HTML DOM Parser on large pages, call $dom->clear() after you're done extracting to free memory. Without this, processing many pages in a loop can exhaust available memory:

$dom = HtmlDomParser::str_get_html($html);

// ... extract your data ...

$dom->clear(); // release memory before the next iteration

unset($dom);When do traditional PHP scraping tools stop working?

The methods you've learned so far work great on sites like books.toscrape.com. But some sites return empty data or broken results when you use Guzzle and Simple HTML DOM Parser.

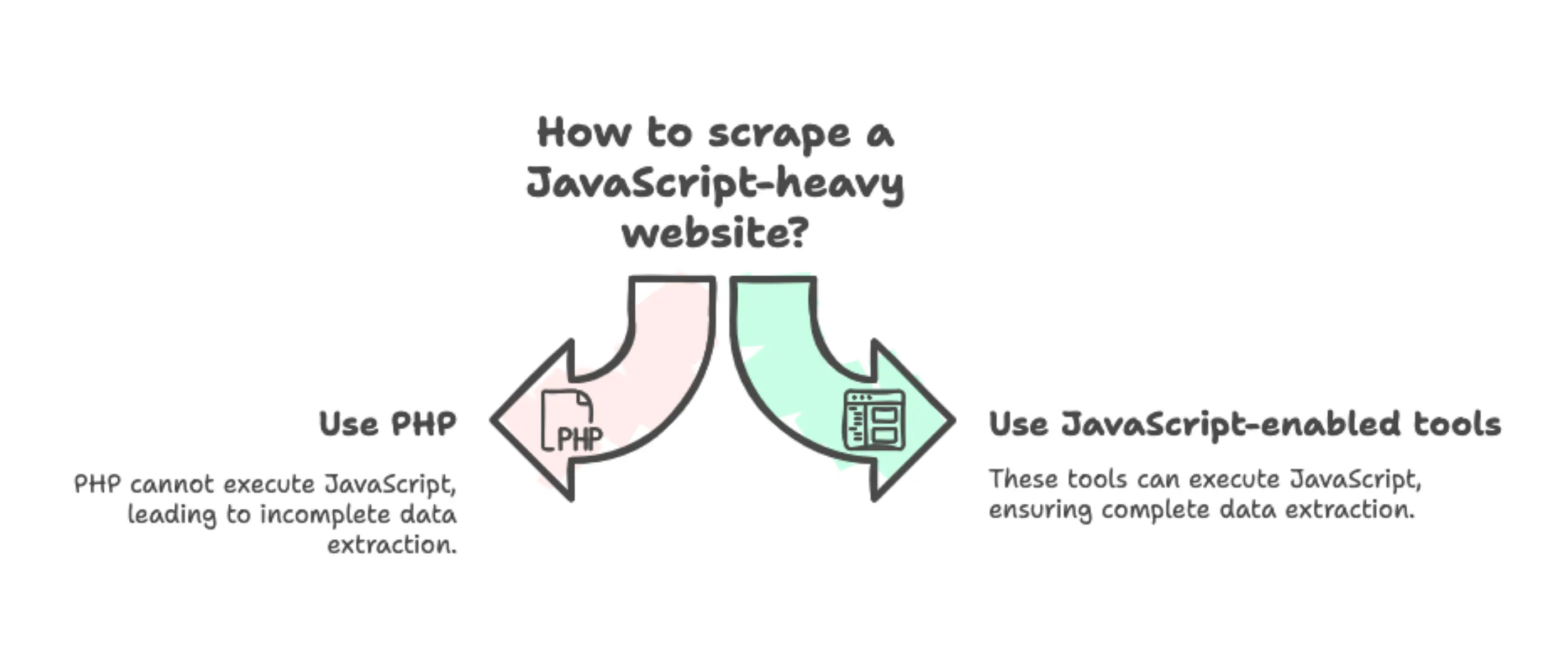

The problem is JavaScript.

The JavaScript problem

Here's what happens with a traditional website:

- Your browser requests a page

- Server sends back complete HTML with all the data

- Browser displays the page

- You scrape the HTML and get the data

Modern websites work differently:

- Your browser requests a page

- Server sends back minimal HTML and JavaScript code

- JavaScript runs in the browser and fetches the real data

- JavaScript builds the page content dynamically

- You see the final page

When you scrape with PHP, you only get the HTML at step 2. The JavaScript never runs, leaving you with incomplete HTML and no data.

Seeing the problem in action

Here's what this looks like in practice. The site quotes.toscrape.com has two versions: one is static HTML, the other uses JavaScript.

The static version works fine with our tools. Try scraping https://quotes.toscrape.com/:

<?php

require 'vendor/autoload.php';

use GuzzleHttp\Client;

use voku\helper\HtmlDomParser;

$client = new Client();

$response = $client->request('GET', 'https://quotes.toscrape.com/');

$html = $response->getBody()->getContents();

$dom = HtmlDomParser::str_get_html($html);

$quotes = $dom->find('.quote');

echo "Found " . count($quotes) . " quotes\n";

?>Run it:

php scrape_quotes.phpOutput:

Found 10 quotesThat works. Now try the JavaScript version at https://quotes.toscrape.com/js/. Change just the URL (adding /js):

$response = $client->request('GET', 'https://quotes.toscrape.com/js/');Run it again:

php scrape_quotes.phpOutput:

Found 0 quotesZero quotes. The page looks identical in your browser, but PHP sees nothing.

To understand why, open https://quotes.toscrape.com/js/ in your browser and view the page source (right-click, "View Page Source"). You'll see the HTML is mostly empty. There's a <div class="col-md-8"> container, but no quotes inside it. Just a <script> tag that fetches and renders the quotes after the page loads. PHP doesn't run JavaScript, so it gets the empty HTML and stops there.

Other challenges you'll hit

JavaScript isn't the only obstacle you'll encounter when scraping real-world sites:

-

Anti-bot detection - Sites like Amazon or LinkedIn detect scrapers and block them. They check your headers, browser fingerprint, and behavior patterns.

-

CAPTCHAs - Some sites show CAPTCHAs if they think you're a bot. You can't solve these with PHP alone.

-

Rate limiting - Sites may block your IP if you make too many requests, even with delays.

-

Dynamic content - Infinite scroll, lazy loading, and content that loads as you interact with the page all require browser-like behavior.

The downsides of traditional web scraping tools

The old approach to these problems was headless browsers. Tools like Selenium or Puppeteer run a real browser in the background, execute JavaScript, and return the final HTML.

But they come with serious downsides:

- You need to install and maintain Chrome or Firefox

- They use a lot of memory and CPU

- They're slow (3-10 seconds per page)

- They break when browser versions update

- You need to write more complex code to handle browser automation

- Hosting gets expensive because of resource usage

For a small project, this might be manageable. For production use, it becomes difficult to maintain.

Modern scraping APIs handle JavaScript rendering, anti-bot measures, and infrastructure. You just send a request and get clean data back. Try Firecrawl to handle JavaScript and anti-bot measures automatically.

PHP web scraping with modern solutions

Services like Firecrawl handle JavaScript execution, anti-bot measures, and infrastructure for you (see our top 10 web scraping tools comparison). You make API calls and get clean, structured data back.

What is Firecrawl?

Firecrawl solves the JavaScript problem from Section 6:

- Executes JavaScript automatically

- Bypasses anti-bot detection

- Handles infrastructure and scaling

The main advantage: describe what data you want in plain English, and Firecrawl's AI extracts structured data. No manual HTML parsing, no complex selectors, no dealing with changing layouts.

Setting up Firecrawl

Sign up for a free account at firecrawl.dev and get your API key. Install the community PHP SDK:

composer require helgesverre/firecrawl-php-sdk

composer require vlucas/phpdotenvStore your API key in a .env file:

FIRECRAWL_API_KEY=your-api-key-hereAdd .env to your .gitignore to keep credentials secure.

Extracting structured quote data

Let's revisit the JavaScript quotes site that returned 0 results with Guzzle. Create firecrawl_quotes.php:

<?php

require 'vendor/autoload.php';

use HelgeSverre\Firecrawl\FirecrawlClient;

// Load API key from .env file

$dotenv = Dotenv\Dotenv::createImmutable(__DIR__);

$dotenv->safeLoad();

$client = new FirecrawlClient(apiKey: $_ENV['FIRECRAWL_API_KEY'] ?? getenv('FIRECRAWL_API_KEY'));The FirecrawlClient handles all API communication. The ?? operator checks environment variables for your API key.

try {

// Firecrawl's extract() method uses AI to find and structure data

$result = $client->extract(

options: [

'urls' => ['https://quotes.toscrape.com/js/'],

'prompt' => 'Extract all quotes with their authors and tags'

]

);The extract() method is Firecrawl's AI-powered extraction. You provide:

urls: An array of pages to process (can extract from multiple URLs at once)prompt: Plain English description of the data you want

$quotes = $result['data']['quotes'];

echo "Found " . count($quotes) . " quotes:\n\n";

foreach (array_slice($quotes, 0, 3) as $quote) {

echo "Quote: {$quote['quote']}\n";

echo "Author: {$quote['author']}\n";

echo "Tags: " . implode(', ', $quote['tags']) . "\n\n";

}

} catch (Exception $e) {

echo "Error: " . $e->getMessage() . "\n";

}

?>The AI infers the data structure from your prompt and creates a quotes array with quote, author, and tags fields automatically.

Here's the full code:

<?php

require 'vendor/autoload.php';

use HelgeSverre\Firecrawl\FirecrawlClient;

// Load API key from .env file

$dotenv = Dotenv\Dotenv::createImmutable(__DIR__);

$dotenv->safeLoad();

$client = new FirecrawlClient(apiKey: $_ENV['FIRECRAWL_API_KEY'] ?? getenv('FIRECRAWL_API_KEY'));

try {

// Firecrawl's extract() method uses AI to find and structure data

$result = $client->extract(

options: [

'urls' => ['https://quotes.toscrape.com/js/'],

'prompt' => 'Extract all quotes with their authors and tags'

]

);

$quotes = $result['data']['quotes'];

echo "Found " . count($quotes) . " quotes:\n\n";

foreach (array_slice($quotes, 0, 3) as $quote) {

echo "Quote: {$quote['quote']}\n";

echo "Author: {$quote['author']}\n";

echo "Tags: " . implode(', ', $quote['tags']) . "\n\n";

}

} catch (Exception $e) {

echo "Error: " . $e->getMessage() . "\n";

}

?>

Run it:

php firecrawl_quotes.phpOutput:

Found 10 quotes:

Quote: The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.

Author: Albert Einstein

Tags: change, deep-thoughts, thinking, world

Quote: It is our choices, Harry, that show what we truly are, far more than our abilities.

Author: J.K. Rowling

Tags: abilities, choices

Quote: There are only two ways to live your life. One is as though nothing is a miracle. The other is as though everything is a miracle.

Author: Albert Einstein

Tags: inspirational, life, live, miracle, miraclesThe same site that returned 0 quotes with Guzzle now gives you perfect, structured data.

Real-world example: GitHub trending tracker

GitHub's trending page shows repositories gaining popularity. Here's how to extract trending repos for a daily newsletter or monitoring dashboard:

<?php

require 'vendor/autoload.php';

use HelgeSverre\Firecrawl\FirecrawlClient;

$dotenv = Dotenv\Dotenv::createImmutable(__DIR__);

$dotenv->safeLoad();

$client = new FirecrawlClient($_ENV['FIRECRAWL_API_KEY'] ?? getenv('FIRECRAWL_API_KEY'));

try {

echo "Extracting trending repositories...\n";

$result = $client->extract(

options: [

'urls' => ['https://github.com/trending'],

'prompt' => 'Find all trending repositories. For each, extract: repository name (like "owner/repo"), description, main language, total stars, and stars gained today.'

],

timeout: 240 // Complex pages need more processing time

);

$repositories = $result['data']['repositories'];

echo "Found " . count($repositories) . " trending repos\n\n";

foreach (array_slice($repositories, 0, 3) as $repo) {

echo "Repository: {$repo['full_name']}\n";

echo "Language: {$repo['main_language']}\n";

echo "Stars: {$repo['total_stars']} (+{$repo['stars_today']} today)\n";

echo "Description: {$repo['description']}\n\n";

}

// Save to timestamped JSON file for tracking trends over time

$filename = 'github_trending_' . date('Y-m-d') . '.json';

file_put_contents($filename, json_encode($repositories, JSON_PRETTY_PRINT));

echo "Saved all " . count($repositories) . " repos to {$filename}\n";

} catch (Exception $e) {

echo "Error: " . $e->getMessage() . "\n";

}

?>The timeout parameter gives Firecrawl more time to process complex pages. More specific prompts (naming exact fields you want) produce more consistent results.

You can now:

- Run with cron for daily tracking (see automated web scraping for free in 2025)

- Filter for specific languages or star thresholds

- Send alerts via Slack/email (explore N8N web scraping workflows)

For a complete guide to all extraction options, parameters, and advanced techniques, check out our tutorial on mastering the Firecrawl scrape endpoint.

When do you need exact field names?

For production applications requiring precise field names and types, use schema-based extraction instead of prompts:

$result = $client->extract(

options: [

'urls' => ['https://example.com'],

'schema' => [

'type' => 'object',

'properties' => [

'products' => [

'type' => 'array',

'items' => [

'type' => 'object',

'properties' => [

'name' => ['type' => 'string'],

'price' => ['type' => 'number'],

'inStock' => ['type' => 'boolean']

]

]

]

]

]

]

);This guarantees consistent output for database integration. Learn more in the Firecrawl PHP SDK documentation.

Ready to scrape JavaScript sites without headaches? Get your free Firecrawl API key and start building.

Next steps in PHP web scraping

You've learned how websites work, how to scrape static pages with Guzzle and Simple HTML DOM Parser, handle pagination, and use Firecrawl for JavaScript sites. These skills transfer to any scraping project: fetch HTML, find data, extract it.

When to use traditional tools vs. modern APIs

Use Guzzle and Simple HTML DOM Parser when:

- The site loads all content in the initial HTML (check "View Source")

- You're scraping small volumes (under 1,000 pages)

- You want to understand how scraping works

Use Firecrawl when:

- The site uses JavaScript to load content

- You need reliable handling of complex web infrastructure

- You need structured data without writing selectors

- Time to market matters more than per-request cost

| Feature | Guzzle + Simple HTML DOM | Symfony DomCrawler | Selenium PHP | Firecrawl API |

|---|---|---|---|---|

| JavaScript Sites | ❌ No | ❌ No | ✅ Yes | ✅ Yes |

| Complex Sites | ❌ No | ❌ No | ⚠️ Partial | ✅ Yes |

| Setup Time | 5 minutes | 5 minutes | 30+ minutes | 2 minutes |

| Maintenance | High | Medium | Very High | None |

| AI Extraction | ❌ No | ❌ No | ❌ No | ✅ Yes |

| Cost | Free | Free | Free + Server | Pay per use |

| Best For | Static sites | Robust parsing | Browser automation | Production JS sites |

Pick based on your project needs, not ideology.

Real-world practice projects

Local library book tracker: Scrape your library's catalog to check if books on your reading list are available. Send weekly email updates when books become available.

Recipe scaling calculator: Pull recipes from cooking sites and recalculate ingredients for different serving sizes. Most recipe sites are static HTML.

University course seat monitor: Track open seats in classes you want to take. Scrape every hour and send push notifications when seats open.

Podcast transcript collector: Scrape episode descriptions and guest info from podcast sites to build a searchable archive.

Local event calendar aggregator: Scrape events from multiple city sites (library, parks, community centers) into one calendar feed filtered by your interests.

Pick the one that solves a problem you actually have.

Legal and ethical considerations

Check robots.txt before scraping (learn about robots.txt from Google). Read the Terms of Service. Only scrape publicly available data and understand GDPR and CCPA requirements when handling personal information.

When in doubt, contact the site owner.

Advanced topics in PHP web scraping

Advanced topics to explore:

- Concurrent requests: Speed up scraping with Guzzle's promises or Firecrawl's batch scraping

- Data storage: SQLite for simple projects, PostgreSQL for production

- Error handling: Build retries and logging so you know when scrapers break

- Proxy rotation: Use services like BrightData or a scraping API that handles it

Check out Firecrawl's Advanced Scraping Guide for more techniques.

Additional resources:

- Composer - PHP dependency manager for installing scraping libraries

- MDN HTML Structure Guide - Learn more about HTML structure and tags

Ready to build your first PHP web scraping project?

Pick one small project and build it this week. Start with a static site using the Guzzle approach from Section 3. When you hit JavaScript or anti-bot measures, sign up for Firecrawl and try their API.

Build skills on simple sites first. The code you wrote in this guide works right now. When sites change, you'll know how to adapt.

Frequently asked questions

What's the best PHP library for beginners?

Simple HTML DOM Parser is the easiest starting point. It uses jQuery-like syntax, so $dom->find('.class') feels familiar if you've done any JavaScript. Pair it with Guzzle to handle HTTP requests cleanly, and together they cover most beginner needs. As you advance, look into Symfony's DomCrawler for better performance and XPath support.

Can PHP handle JavaScript-rendered websites?

Not with standard libraries. PHP executes on the server and can't run JavaScript in the HTML it receives. To scrape these sites, you need either a headless browser (complex and resource-heavy) or a scraping API like Firecrawl that renders JavaScript for you. Most modern sites fall into this category, which is why APIs have become popular.

Where should I store scraped data?

It depends on your data volume and query needs. CSV files work fine for small datasets you'll open in Excel. Use JSON when you need structure and your app consumes the data directly.

SQLite works well when you need a database but want to avoid server setup. For production apps with lots of data, move to MySQL or PostgreSQL. Start simple, upgrade when you need to.

Should I use cURL or Guzzle for PHP scraping?

Guzzle is the better choice for most projects. It has a cleaner API, handles redirects and cookies automatically, and integrates well with Composer. Use raw cURL when you need minimal dependencies or are working in an environment where you can't install packages.

How do I scrape multiple pages of a site efficiently?

For small jobs, loop through pages with sleep(1) between requests. For larger operations, consider curl_multi_exec() or ReactPHP for parallel requests - this can cut total time dramatically when you're hitting dozens of pages. Always respect the site's rate limits.

How do I handle errors and retries in a PHP scraper?

Wrap your requests in a try/catch and retry with exponential backoff - start with a 2-second delay, then 4, then 8. Catch GuzzleHttp\Exception\RequestException for HTTP errors. Log failed URLs to a file so you can reprocess them without restarting the full scrape.

When should I use Symfony DomCrawler instead of Simple HTML DOM?

Use Symfony DomCrawler when you need XPath support, better memory performance on large pages, or you're already in a Symfony/Laravel project. Simple HTML DOM is fine for quick scripts and smaller pages. DomCrawler is the more production-ready choice for anything running regularly.

data from the web