Finding the best AI resources means focusing on hands-on learning where you build real applications. This guide covers 12 of the best AI resources for learning AI engineering by getting your hands dirty. Instead of watching videos back to back, each resource gets you building chatbots, RAG systems, and AI agents with working code.

The resources are organized by skill level: 3 beginner, 6 intermediate, 3 advanced. Pick your level and you can build your first project within a week.

What's new for 2026: This edition adds a section on context engineering under prompt engineering - the emerging discipline that separates demos from production agents. We've also expanded the learning paths to reflect how the field has matured: evals, LLMOps, and structured output handling are no longer advanced topics, they're table stakes.

Resources for building chatbots, RAG systems, and AI agents

The 12 hands-on AI resources are organized by skill level:

- Beginner (3 resources): Start with Prompt Engineering Guide, Claude Cookbooks, and DeepLearning.AI courses

- Intermediate (6 resources): OpenAI Cookbook, Activeloop, W&B Academy, LlamaIndex, Vercel AI, and Firecrawl tutorials

- Advanced (3 resources): CrewAI, Decoding AI, and Neural Maze courses

- Time to first project: 1 week with beginner resources

- All resources are free (You pay only for API usage)

Quick start: Pick resource #1 from your skill level, build your first project this week, then progress sequentially.

Quick reference: Best AI resources at a glance (2026)

| Resource | Level | What You'll Build | Time | Best For |

|---|---|---|---|---|

| 1. Prompt Engineering Guide | Beginner | 18+ prompting techniques, templates, RAG strategies | 1-2 weeks | Foundation skill for all LLM work |

| 2. Claude Cookbooks | Beginner | Customer support agents, tool use, vision apps | 1-2 weeks | Learning both OpenAI + Anthropic APIs |

| 3. DeepLearning.AI | Beginner | LangChain apps, vector DB integration, agents | 2-4 weeks | Structured courses with expert instructors |

| 4. OpenAI Cookbook | Intermediate | RAG systems, multi-agent orchestration, GPT-4o apps | 3-4 weeks | Production-ready OpenAI patterns |

| 5. Activeloop GenAI | Intermediate | LangChain production, advanced RAG, LLMOps | 4-6 weeks | Complete RAG training |

| 6. Weights & Biases Academy | Intermediate | Production agents, evaluation pipelines, monitoring | 2-3 weeks | Real-world MLOps and LLMOps |

| 7. LlamaIndex Resources | Intermediate | SEC chatbots, ReAct agents, text-to-SQL | 3-4 weeks | Practical recipes with working code |

| 8. Vercel AI Academy | Intermediate | Production chatbots, streaming UI, TypeScript apps | 2-3 weeks | Web developers using TypeScript |

| 9. Firecrawl Tutorials | Intermediate-Advanced | Documentation agents, PDF RAG, multi-agent systems | 4-6 weeks | Web scraping + AI integration |

| 10. CrewAI Examples | Advanced | Multi-agent teams, workflow automation, integrations | 3-5 weeks | Rapid multi-agent prototyping |

| 11. Decoding AI Courses | Advanced | Production systems, LLM Twin, MCP workflows | 6-8 weeks | Software engineering best practices |

| 12. Neural Maze Courses | Advanced | WhatsApp agents, multimodal RAG, video processing | 6-8 weeks | Production deployment patterns |

What makes a great AI engineering resource

Before jumping into the resources, let's clarify what AI Engineering means and how we selected these 12 specific ones.

AI engineering vs machine learning

The resources in this guide focus on AI Engineering, not traditional machine learning or data science. AI Engineering means building applications with pre-trained LLMs like GPT-4 or Claude through APIs. You focus on prompt engineering, RAG systems, and AI agents. Traditional machine learning and deep learning focus on training models from scratch, building neural network architectures, and working with training datasets.

This distinction shapes the entire list. Every resource here teaches you to build applications by calling LLM APIs, not by training models. If you want to learn PyTorch or build CNNs, look elsewhere. If you want to ship LLM applications, keep reading.

How we curated this list

We built this list by testing resources ourselves, not by aggregating other lists. Each resource had to meet specific criteria.

First, hands-on projects. Watching tutorial videos or reading documentation doesn't teach you to build software. You learn by writing code that breaks, fixing it, and shipping something that works. Resources that just explain concepts without making you build didn't make the cut.

Second, active maintenance. We checked update dates, verified working code examples, and tested whether community forums were still active. AI engineering moves too fast for outdated resources.

Third, clear project outcomes. Vague promises about "understanding LLMs" weren't enough. We wanted resources that result in specific, shippable projects: a chatbot with memory, a RAG system over your docs, a multi-agent research assistant.

Fourth, free access. All 12 resources offer free tutorials and documentation. You only pay for API usage when running your applications.

We verified every resource builds with LLMs rather than training them, tested the code examples ourselves, and confirmed 2025-2026 updates.

A note on books: This list focuses on free, hands-on resources. If you prefer structured reading alongside the tutorials, two books are worth having nearby: AI Engineering by Chip Huyen (real-world engineering challenges, MLOps, inference) and The LLM Engineering Handbook by Paul Iusztin and Maxime Labonne (RAG, evals, LangChain, fine-tuning). Both complement the hands-on resources here rather than replacing them.

Beginner resources

1. Prompt engineering guide

Link: Prompt Engineering Guide

Everything in AI engineering starts with prompting. This guide teaches 18+ techniques including Chain-of-Thought for step-by-step reasoning, ReAct for combining thinking with actions, few-shot prompting with examples, and self-consistency for reliability. Each technique includes working examples you can test immediately.

The Prompt Hub provides 100+ ready templates organized by task: classification, coding, creativity, mathematics, reasoning. Copy and adapt these instead of starting from scratch. The guide covers RAG prompting strategies and agent-building patterns that become important for intermediate work.

Learn these techniques once and apply them to GPT-5, Claude, Gemini, or any LLM. The guide includes Jupyter notebooks and gets regular updates with new research. Master prompting first because it forms the foundation for every other skill on this list.

Context engineering: the next layer up

Once you're comfortable with prompting, the natural next step in 2026 is context engineering - the discipline of designing what fills the entire context window, not just crafting a single instruction string. Prompt engineering is what you write inside the context window. Context engineering is how you decide what goes in there at all: memory, retrieved documents, tool definitions, conversation history, and yes, the prompt itself.

The distinction matters because research shows LLM accuracy degrades as context length grows - even on simple tasks. Dumping everything into a 2M-token window doesn't work. What works is curating the minimum set of high-signal tokens the model needs for the immediate task, structured and positioned deliberately.

Two resources go deep on this:

- Context Engineering vs Prompt Engineering for AI Agents - covers the four ways context fails (poisoning, distraction, confusion, clash), the research behind context rot, and practical techniques for production systems

- Context Layer for AI Agents - focuses on automating context ingestion, separating static decision context from dynamic operational context, and building self-healing pipelines

Think of prompt engineering as a prerequisite and context engineering as the production-grade version of that skill.

2. Claude cookbooks

Link: Claude Cookbooks

Jupyter notebooks with copy-paste code you can integrate directly into your projects. Learning both OpenAI and Anthropic APIs early matters because Claude excels at long context windows and nuanced reasoning, while GPT-5 handles highly structured tasks better.

The cookbooks cover text classification systems, RAG implementations that answer questions from your documents, customer service agents, SQL query generators that convert natural language to database queries, and vision applications like chart reading and form extraction. Advanced patterns include sub-agent architectures where multiple agents work together, PDF processing pipelines, and prompt caching techniques that reduce API costs.

Each notebook includes complete prompts, API calls, error handling, and expected outputs. Having both providers in your toolkit makes you versatile when different projects demand different strengths.

3. DeepLearning.AI short courses

Link: DeepLearning.AI Short Courses

Andrew Ng's platform offers 88 short courses, with 61 focused on GenAI applications rather than traditional machine learning. Each course runs 1-2 hours with hands-on Jupyter notebooks where you write code alongside instructors from OpenAI, LangChain, and Anthropic.

Start with "ChatGPT Prompt Engineering for Developers" to build your foundation, then "LangChain for LLM Application Development" where LangChain creator Harrison Chase teaches the industry-standard framework. "LangChain: Chat with Your Data" covers RAG fundamentals and vector database integration. "Multi AI Agent Systems with crewAI" teaches workflow automation with multiple agents. Andrew Ng's "Agentic AI" covers building systems that take action through iterative, multi-step workflows.

Intermediate resources

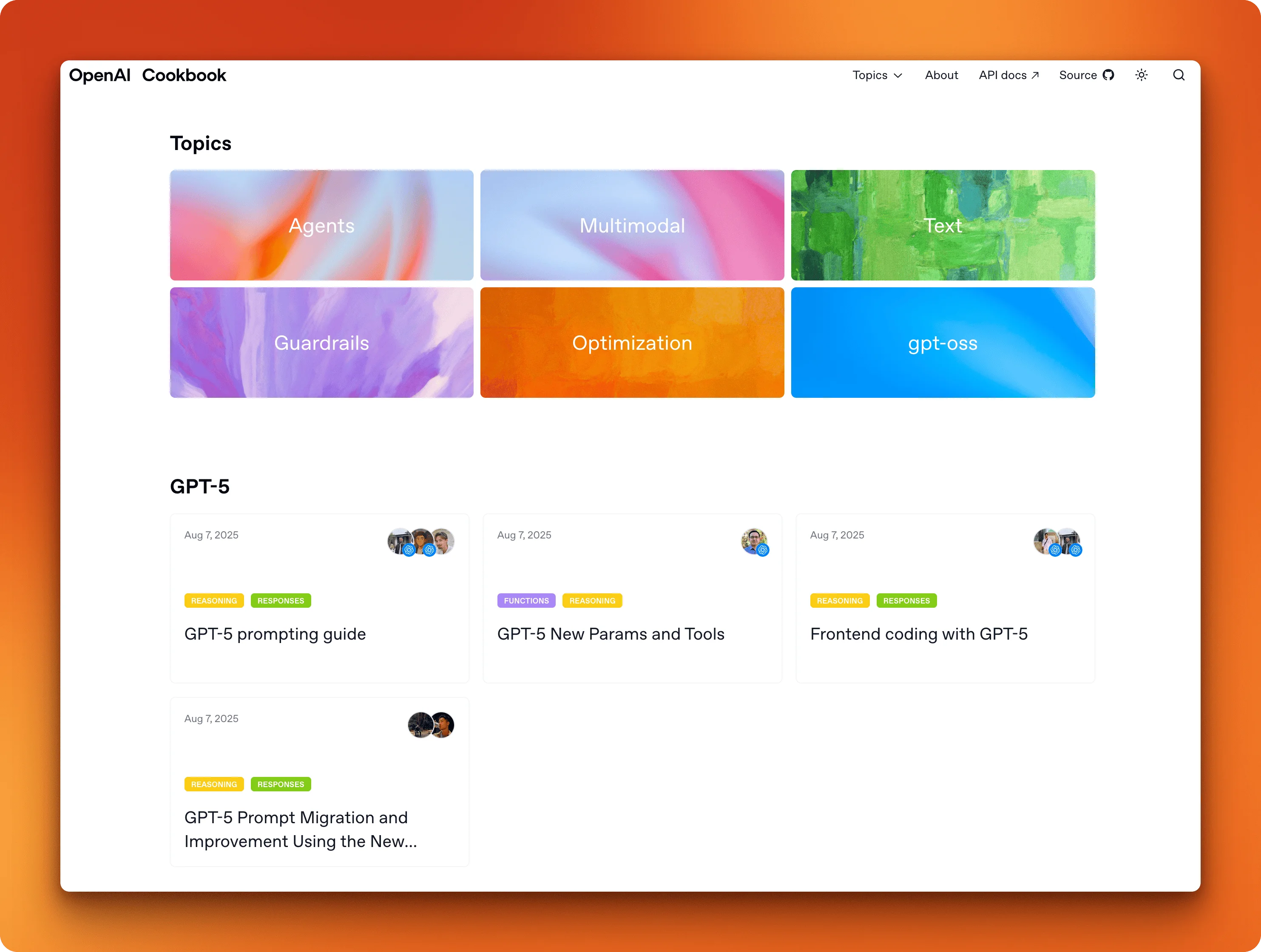

1. OpenAI cookbook

Link: OpenAI Cookbook

Production-ready code examples for building RAG systems with vector databases, multi-agent orchestration, and function calling workflows that connect LLMs to external tools. Each cookbook includes complete Python and JavaScript code, not just conceptual explanations.

Start with GPT-4o tutorials and structured outputs that guarantee valid JSON, then build semantic search with embeddings or chatbots with conversation memory. Recent October 2025 additions cover AgentKit walkthroughs for autonomous agents, eval-driven system design, and GPT-5 prompting techniques. The examples integrate production tools like Pinecone for vector search, Chroma for embeddings, and MongoDB Atlas.

OpenAI updates this monthly with new API features. You'll learn multimodal patterns processing vision and audio, plus agent handoff patterns where specialized agents collaborate on complex tasks.

You can also checkout our detailed guide on OpenAI's predicted outputs for faster LLM response.

2. Activeloop GenAI courses

Link: Activeloop GenAI Courses

This platform offers multiple free courses on LLMs and GenAI applications. "LangChain & Vector Databases in Production" covers 68 lessons on building with LangChain and Deep Lake vector databases. "Advanced RAG with LangChain & LlamaIndex" delivers 35 lessons on production retrieval systems. "Building AI Search: Multi-Modal RAG, RAFT, & GraphRAG" teaches 27 lessons on advanced search techniques.

The GenAI360 Foundation Model Certification provides hands-on LLMOps training covering cloud deployment, efficient scaling, and benchmarking. You'll learn to train, fine-tune, and productize LLMs through industry-specific projects. The certification is backed by Activeloop, Towards AI, and Intel Disruptor Initiative.

Courses include working code examples and real-world applications. The platform partners with AWS and Lambda for infrastructure training, making these skills production-ready.

3. Weights & Biases AI academy

Link: Weights & Biases AI Academy

Free 2-3 hour courses taught by engineers from OpenAI, Google, and other leading AI companies. "AI Engineering: Agents" shows you how to build production-grade agents with reasoning models. "LLM apps: Evaluation" teaches building and scaling evaluation pipelines. "RAG++: From POC to production" covers production-ready RAG techniques from industry experts.

Additional courses include "LLM engineering: Structured outputs" for reliable JSON handling, "Building LLM-powered apps" with LangChain and monitoring tools, and "Developer's guide to LLM prompting" for system prompts and techniques. Each course focuses on practical implementation with working code.

The academy emphasizes real-world MLOps and LLMOps challenges. You'll learn tracking, monitoring, and evaluation frameworks that companies use in production.

Why evals matter in 2026: As open-source models (Llama 3, DeepSeek, Qwen) become viable alternatives to proprietary APIs, evaluation skills become the deciding factor in model selection. Being able to run systematic evals - not just vibes-testing in a chat UI - is what lets you make defensible decisions about which model to use for a given task. W&B's evaluation courses are the fastest free path to that skill.

4. LlamaIndex developer resources

Link: LlamaIndex Developer Resources

This platform offers 70+ practical recipes with working GitHub code. Build a SEC Chatbot that answers questions from financial filings, construct multi-agent report generation systems, or create document processing workflows with metadata extraction. Each recipe includes complete code you can run immediately.

The tutorials cover RAG systems with reranking and citations, ReAct agents that combine reasoning and actions, text-to-SQL query engines, and knowledge graph retrieval. You'll work with document parsing, ingestion pipelines, and semantic search across both Python and TypeScript. The platform integrates with OpenAI, Anthropic Claude, Mistral AI, and AWS Bedrock.

These are practical guides that show you how to build specific applications. Filter recipes by complexity and use case to find exactly what you need to build next.

5. Vercel AI academy

Link: Vercel AI Academy

TypeScript developers building web applications need this course. You'll construct a production chatbot in five phases: basic streaming, structured outputs, tool calling with external APIs, system prompts for personality, and generative UI components. Each phase builds working features you can deploy.

The curriculum covers data extraction with schema validation, classification systems for batch-processing support tickets, summarization with server actions, and smart form parsers. You'll work with React and Next.js, though the AI SDK works with any TypeScript project. The course teaches streaming responses, error handling, and integration with real-world APIs like weather services.

This helps if you're building web applications rather than Python scripts. The AI SDK is free and open-source.

6. Firecrawl blog & tutorials

Link: Firecrawl Blog & Tutorials

The Firecrawl blog showcases production-ready implementations combining web scraping with AI. You get complete, deployable code covering patterns absent from typical documentation.

Five tutorials stand out for intermediate to advanced learning:

- Turn Any Documentation Site Into an AI Agent with LangGraph and Firecrawl - Agentic RAG with streaming, memory, and deployment

- Building Multi-Agent Systems With CrewAI - Role-based agent architecture with specialized workers

- Building a PDF RAG System with LangFlow and Firecrawl - Document processing with vector storage

- Building AI Agents with OpenAI Agent Builders & Firecrawl - Multi-step workflows using no-code tools

- Building a Trend Detection System with AI in TypeScript - Real-time monitoring with Slack integration

These tutorials bridge the difference between toy examples and production systems, teaching data collection strategies that most AI projects require.

Read More: How Credal Extracts 6M+ URLs Monthly to Power Production AI Agents with Firecrawl

Advanced resources

1. CrewAI examples

Link: CrewAI Examples

This GitHub repository offers production-ready examples organized into four categories: Crews for traditional multi-agent systems, Flows for advanced orchestration, Integrations with external frameworks, and Jupyter notebooks for experimentation.

The Crews section provides real use cases you can adapt directly: content generation pipelines, financial analysis systems, recruitment automation, and travel planning agents. The Flows category addresses more complex scenarios with state management, including iterative content refinement, automated email responses, and improvement loops.

Unlike simplified tutorials, every example demonstrates proper error handling and shows integration with APIs, databases, and external services. You'll find production-ready patterns for rate limiting, failure recovery, and other real-world concerns you'll encounter when shipping code.

2. Decoding AI OpenSource courses

Link: Decoding AI OpenSource Courses

Decoding AI offers six free courses on building production-grade systems with professional software engineering practices. You move beyond Jupyter notebooks to learn clean, modular code that scales.

PhiloAgents teaches AI agent simulation across six modules covering agent concepts, RAG, and LLM applications (created with Neural Maze). LLM Twin shows how to design and deploy a production-ready personal AI replica with modular architecture. Enterprise MCP Systems covers workflow automation using the Model Context Protocol, including a project that analyzes GitHub PRs and posts results to Slack.

These courses emphasize software engineering fundamentals: proper code structure, dataset preprocessing, and deployment with Kubernetes. You'll learn practices that distinguish production systems from notebook experiments.

3. Neural Maze courses

Link: Neural Maze Courses

Neural Maze provides four progressive courses moving from agent fundamentals to advanced multimodal systems. Each course builds on the previous one, taking you from core patterns to production implementations you can deploy.

Agent Design Patterns teaches reflection, tool use, ReAct planning, and multi-agent systems using open-source code. Ava shows you how to build a WhatsApp agent with LangGraph workflows, memory systems, and multimodal message processing. PhiloAgents focuses on production RAG with LLMOps practices that go beyond prototype code. The final course, Kubrick, tackles multimodal RAG for video processing with full observability, prompt versioning, and monitoring. For a production-ready messaging agent with Firecrawl web access out of the box, see our OpenClaw guide.

Each course blends written explanations, video walkthroughs, and deployable code you can adapt for your own projects.

How to choose your learning path

You don't have to work through these resources sequentially from 1 to 12. Your path depends on what you want to build. Pick your focus area and prioritize the resources that get you there fastest.

Building RAG and search systems

Most AI engineering jobs involve retrieval-augmented generation. You're building systems that answer questions from company docs, search through knowledge bases, or power chatbots with real-time information.

Start with the Prompt Engineering Guide (#1) to learn retrieval patterns, then move to the OpenAI Cookbook (#4) for RAG implementation basics. The Activeloop courses (#5) give you the deepest RAG-specific training available for free, covering chunking strategies and retrieval optimization. LlamaIndex resources (#7) teach you production indexing patterns. Finish with Firecrawl tutorials (#9) to handle the web scraping and data collection that most RAG systems need.

Building AI agents

Agents autonomously take actions, make decisions, and orchestrate workflows. They're harder to build than RAG systems but open more possibilities.

Start with Claude Cookbooks (#2) to learn tool use and function calling patterns that let LLMs interact with external systems. DeepLearning.AI courses (#3) cover multi-agent architectures. CrewAI documentation (#10) teaches rapid prototyping with role-based agent teams. Decoding AI courses (#11) show you how to structure production-grade agent code with proper error handling and state management.

Building production web applications

If you're integrating LLM features into user-facing products, you need different skills than notebook-focused developers.

Start with Prompt Engineering Guide (#1) for foundation work. Vercel AI Academy (#8) teaches TypeScript-first development with streaming responses and generative UI components. Weights & Biases courses (#6) cover the monitoring and evaluation pipelines you need before deploying to users. Decoding AI courses (#11) handle deployment concerns like Kubernetes and scaling.

Complete beginner path

Not sure which specialization you want yet? Start with the three beginner resources in order: Prompt Engineering Guide (#1), Claude Cookbooks (#2), and DeepLearning.AI courses (#3). Build a basic chatbot with each one. After six weeks of hands-on work, you'll know whether you're more interested in RAG systems, agents, or web integration.

Most developers eventually need skills across all three areas. Start with one focus, build something that works, then expand to the others. Revisit earlier resources when you're ready to go deeper.

Ready to improve your AI engineering skills?

Pick one resource from your skill level and start this week. You don't need all 12 to ship something useful. Most developers build production apps after mastering 3-4 resources.

Start beginner if you're new, intermediate with basic knowledge, advanced for production work. Build projects while you learn instead of just reading. Your first chatbot won't be perfect. Your first RAG system will have issues. Ship them anyway. Real usage teaches more than tutorials.

One more thing specific to 2026: after you get comfortable prompting, invest time in context engineering. The gap between a demo that works once and an agent that works reliably in production almost always comes down to context - what goes into the window, in what order, and how fresh it is. The two Firecrawl posts linked in the prompt engineering section (context engineering and context layer for agents) are a good starting point.

Frequently Asked Questions

What's the difference between AI engineering and machine learning?

AI Engineering builds applications using pre-trained LLMs through APIs like OpenAI or Anthropic. Machine learning trains models from scratch with backpropagation and neural network architectures. If you want to ship chatbots and RAG systems, focus on AI Engineering. If you want to research model architectures, focus on ML.

What's the best resource for complete beginners?

Start with the Prompt Engineering Guide to learn the core skill. Follow with Claude Cookbooks for hands-on API work. Then take DeepLearning.AI short courses for structured learning. All three are free. Work through them sequentially and build a small project with each one before moving forward.

How long does it take to learn AI engineering?

You can build a basic chatbot in your first week with the beginner resources. Production-ready RAG systems take 2-3 months of focused work. Complex multi-agent systems take 4-6 months. The timeline depends on your programming experience and how much time you spend building actual projects versus just reading documentation.

Should I learn machine learning before AI engineering?

No. They're different skill sets. ML background helps understand concepts but isn't required to build LLM applications. Start directly with AI Engineering if you want to ship products. You'll learn by calling APIs and building applications, not by studying gradient descent or training neural networks.

Are these resources free?

Yes. All 12 resources offer free tutorials, documentation, and courses. You won't pay for the learning materials. You will pay for LLM API usage when running your applications. OpenAI and Anthropic charge per API call. Most providers offer free trial credits to get started.

data from the web