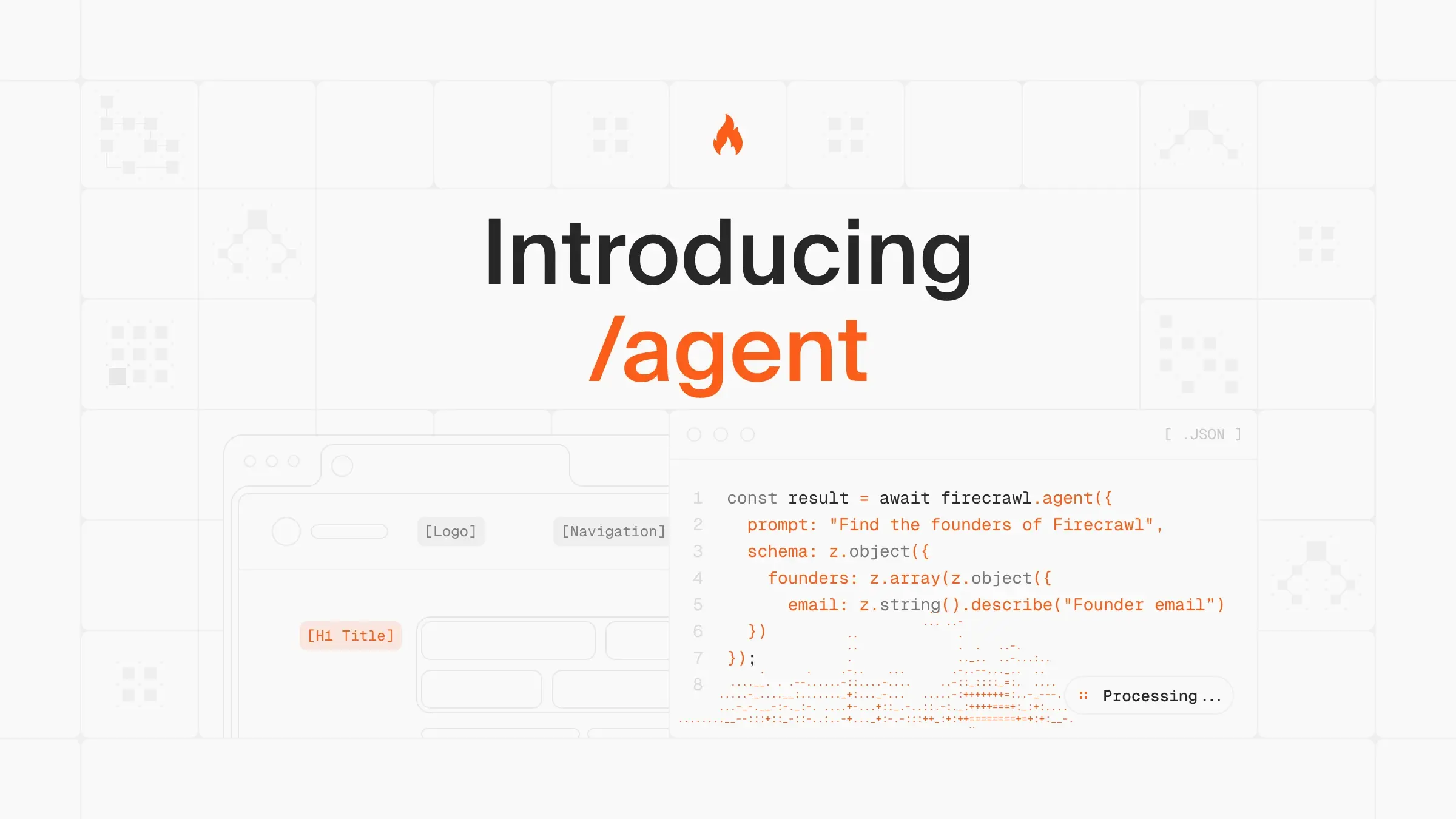

Firecrawl /agent searches, navigates, and gathers even the most complex websites, finding data in hard-to-reach places and discovering information anywhere on the internet.

It accomplishes in a few minutes what would take a human many hours. /agent finds and extracts your data, wherever it lives on the web.

The problem: data lives in hard-to-reach places

Building lead lists? Contact information is buried across company sites, team pages, and press releases.

Researching pricing? Competitors hide their pricing behind multi-page flows, gated content, or dynamic tables.

Curating datasets? The data you need is scattered - research papers across publishers, company information across databases, product specs across e-commerce sites.

Traditional web scraping requires you to map every site structure, write custom code for each page type, and maintain brittle scripts when layouts change. It's slow, expensive, and breaks constantly.

You need a better way to gather data at scale.

How /agent works

Describe what data you want to extract and /agent handles the rest.

from firecrawl import FirecrawlApp

from pydantic import BaseModel, Field

from typing import List, Optional

app = FirecrawlApp(api_key="fc-YOUR_API_KEY")

class Company(BaseModel):

name: str = Field(description="Company name")

contact_email: Optional[str] = Field(None, description="Contact email")

employee_count: Optional[str] = Field(None, description="Number of employees")

class CompaniesSchema(BaseModel):

companies: List[Company] = Field(description="List of companies")

result = app.agent(

prompt="Find YC W24 dev tool companies and get their contact info and team size",

schema=CompaniesSchema

)

print(result.data)No URLs required. No site mapping. No custom scripts. Agent figures out how to navigate sites, click through pages, handle dynamic content, and extract exactly what you need.

Want to provide starting points? URLs are optional:

result = app.agent(

urls=["https://stripe.com/pricing", "https://square.com/pricing"],

prompt="Compare pricing tiers and features"

)Agent searches the web, navigates complex sites, clicks through multi-page flows, handles pagination, and returns structured data. Whether you need one data point or thousands, it scales.

Research preview: expect improvements

We're launching /agent in research preview. This means:

- Expect kinks and edge cases

- Performance will improve significantly over time

- We're actively gathering feedback and iterating

Think of this as the early version of something that will get much better. We're committed to making /agent the best way to extract web data at scale.

What /agent can do

Find information in hard-to-reach places: Agent clicks through authentication flows, navigates nested menus, handles dropdowns, and explores multi-step processes to find your data.

Discover information across the web: Searches for companies, people, products, or research. Visits multiple sites, cross-references data, and structures everything into clean JSON.

Scale from one to thousands: Extract a single contact email or curate entire datasets of companies, research papers, or products. Agent adapts.

Navigate like a human: Clicks buttons, follows links, handles pagination, explores sub-pages. Interacts with sites the way a person would, but faster.

Real-world use cases

Lead generation and company research

"Find all YC W24 dev tool companies, their founders, contact emails, and employee count"

Agent searches for YC W24 companies, filters for dev tools, visits each company site, finds founder and contact information, checks team pages for headcount. Returns structured data ready for your CRM.

Competitive pricing intelligence

"Compare pricing tiers and features across Stripe, Square, and PayPal"

Agent visits each pricing page, navigates through tier details, extracts features and costs, handles different page layouts. Returns unified pricing comparison.

Product research and e-commerce

"Get all running shoes from Nike.com under $150 with customer ratings"

Agent navigates Nike's catalog, filters by category and price, clicks into product pages, extracts specs and ratings, handles pagination across hundreds of products.

Dataset curation

"Find the top 50 AI research papers from 2024 with author names, institutions, and citation counts"

Agent searches academic databases, visits publisher sites, extracts paper metadata, cross-references citation counts, structures everything for analysis.

Complex browser interactions

"Extract pricing from SaaS sites that require clicking 'See Pricing' buttons"

Agent handles JavaScript-heavy sites, clicks through multi-step flows, waits for dynamic content to load, navigates modal windows and forms.

The end of brittle scraping scripts

Traditional web scraping means:

- Mapping site structures manually

- Writing custom selectors for each page

- Maintaining brittle scripts when sites change

- Building separate workflows for each data source

Agent replaces all of that with a prompt. It figures out navigation, handles changes automatically, and works across any site without custom code.

What takes a human hours - finding data sources, clicking through pages, copying information, structuring it - Agent does in minutes.

/agent vs /extract

The /agent endpoint is the next evolution of our /extract endpoint. It uses AI agents to intelligently gather and structure data from across the web. Unlike /extract, Agent requires only a prompt and URLs are optional, making it incredibly flexible for a wide variety of use cases.

What's different:

| Feature | Agent | Extract |

|---|---|---|

| URLs Required | Optional | Required |

| Web Search | Built-in | None |

| Navigation | Autonomous | Single page |

| Browser Actions | Full support | Limited |

| Scale | One to thousands | Single page |

| Status | Active development | Deprecated |

If you're using /extract, migration is straightforward - convert your extraction logic into a natural language prompt. Agent handles everything /extract did, plus autonomous discovery and navigation. A detailed migration guide will be available soon.

Try it, break it, let us know what doesn't work. Every edge case you find helps us improve.

Available now

Agent is live across all our integrations:

- API – Full control in your applications

- SDKs – Python and Node with Pydantic/Zod schemas

- Playground – Try it immediately

Coming soon

- MCP – Work with Claude, Gemini, OpenAI agents

- n8n – Add Agent to workflow automations

- Zapier – Integrate with thousands of apps

Start building

- Read the /agent documentation

- Experiment in the Playground

- Share your projects on Discord

Gather data wherever it lives on the web. Start building today.

data from the web